Introduction

Technology permeates the lives of today’s college student like never before, making this generation of students much more technically savvy than previous ones. Nevertheless, college students are not always proficient in computer use as it relates to online learning and the use of course management systems (CMS). The effective use of this technology is critical as online class enrollments continue to grow. In the 2006 fall semester, over 3.5 million college students were enrolled in an online course, increasing 9.7% from the previous year and doubling in just 4 years (Allen & Seaman, 2007). College enrollments in general rose only 1.5% during the same period, which is a trend that is expected to continue (Allen and Seaman, 2007).

Accompanying the increased use of CMS are problems associated with user frustration. The anxiety students experience when problems interfere with attempting to complete online course requirements can have a ripple effect as more serious or ongoing issues reach other stakeholders like faculty, instructional developers, and possibly administrators (Dietz-Uhler, Fisher, & Han, 2007; Nitsch, 2003). The purpose of this study was to explore the value of implementing usability testing within a user-centered design framework to reduce the problems students experienced while interacting with an online course.

Literature Survey

Business and industry are well aware of the consequences of user frustration with products and constantly seek ways to circumvent consumer loss (Badre, 2002; Barnum, 2002b; Nielsen, 1993; Rosson & Carroll, 2002). Numerous approaches have been developed for improving product design. User-centered design (UCD) is touted in the literature as one of the most effective (Badre, 2002; Barnum, 2002b). With UCD, the user becomes central to product design and provides a genuine glimpse of the issues users experience while they interact with a product to improve its usability (Barnum, 2002b, 2007; Nielsen 1993, 1997a; Rubin, 1994).

A key component of the UCD process is usability testing, which has become the industry standard for measuring usability. Studies regarding the implementation of usability testing as part of a UCD framework for improving usability are numerous in the private sector and in some governmental entities, particularly the military (Gould, Boies, & Lewis, 1991; Gould & Lewis, 1985; Nielsen, 1993, 1994, 1995, 2000; Rubin 1994; Schneiderman, 1998). Unfortunately, academia has lagged behind in conducting research in this area especially in regard to online learning, which is surprising when one considers online learning’s exponential growth in recent years (Roth, 1999; Barnum, 2007; Swan, 2001). Crowther, Keller, and Waddoups (2004) assert that ensuring that technology does not impede learning in an online environment is both the ethical and moral responsibility of the university. Instructors, technical support teams, developers, and administrators can be impacted by issues that surface while students work in the online classroom (e.g., inability to access instructional materials, submit assignments, post a message to the Discussion Board, etc.). Research has shown that students become anxious and frustrated when course design issues interfere with completing course requirements (Dietz-Uhler et al., 2007; Nitsch, 2003). While the technical support team can address many problems students encounter, administrators will be burdened by some, particularly those significant enough to hinder student success and retention (Dietz-Uhler et al., 2007).

Employing usability testing as part of a UCD framework in the design of online courses provides opportunities for developers to identify and eliminate potential problems encountered by students early in the design process and prior to course delivery (Barnum, 2002b). All stakeholders reap the benefits. One benefit is enhanced student satisfaction, potentially increasing student retention (Dietz-Uhler et al., 2007; Swan, 2001). Another benefit is the opportunity to set standards for design that can be replicated by development of other online courses saving time and lowering costs.

UCD in Higher Education

Few have conducted research implementing usability testing as part of a UCD framework in higher education (Corry, Frick, & Hansen, 1997; Jenkins, 2004; Long, Lage, & Cronin, 2005). Jenkins (2004) sought to investigate developers’ beliefs in the value of UCD, as compared to their actual use of UCD, which the researcher found to be contradictory. Long, Lage, and Cronin (2005) redesigned a library website based on findings from another study using the UCD framework. Insight into problems encountered by users relating to the interface resulted in recommendations to improve the site. Corry, Frick, and Hansen (1997) also employed a UCD framework to redesign a university website. The researchers performed iterative testing and redesigned the website to eliminate problems found. As a result, the new site became one of the top 50 most visited sites (Corry et al., 1997). The findings from these studies indicate that the user experience can be improved through implementation of the UCD framework justifying further investigation of its application to online course design.

Number of Users Needed for Testing

Nielsen (1993, 1994, 1997a) posits that five or fewer users are not only enough to detect the majority of usability problems, but more may reduce the return on investment (ROI) often used to justify costs. Nielsen and Landauer (1993) conducted 11 studies to determine the appropriate sample size of users or evaluators needed to predict usability problems, comparing the number of users to the cost benefit ratio. The researchers determined that the optimal number of users for testing and experts for conducting heuristic evaluations, where benefits outweighed costs to the largest extent, was 3.2 and 4.4, respectively.

Numerous studies support Nielsen and Landauer’s (1993) findings (Lewis, 1994; Virzi, 1990, 1992). According to these researchers, the majority of problems in product design can be eliminated using 3 to 5 users, provided that iterative testing is employed. With the major issues addressed, minor ones will come to light. Virzi’s (1990, 1992) research indicated that 80% of issues encountered were uncovered with 4 to 5 users. Additionally, his findings suggested that testing additional participants did not provide a beneficial return on investment.

Krug (2006) also supports the use of a small sample size asserting that some data regarding issues are better than having none, particularly early in development to avoid patterns of flawed design. Dumas and Reddish (1999) argue that while usability testing is similar to research, the objectives of the two do differ. Usability testing is used to improve design of a product through problem identification and elimination by observing users interacting with the product. Research, instead, explores the existence of phenomena. Usability testing yields quantitative data in terms of the number of errors (Dumas & Redish, 1999) and qualitative data in terms of the nature of the problems (Barnum, 2002a).

Opposing views regarding the appropriate number of users required for effective usability testing indicates no one number is a cure all for eliminating all problems (Bevan et al., 2003). As a result, experts offer several considerations for determining the appropriate number for usability testing such as the: (a) the product undergoing testing, (b) characteristics and needs of each participant, (c) return on investment, (d) the testing objectives, and (e) statistical analysis (Dumas & Redish, 1999; Palis, Davidson, & Alvarez-Pousa, 2007).

Research Design

This study took place at a major research university in the Southeast region of the United States that offered, at the time of this study, over 250 online courses, and supported over 40 online programs. One department within the university employed a team of six instructional developers who collaborated with subject matter experts to develop new courses and to revise existing ones. The department also employed a program manager who oversaw the process, in part, by reviewing newly developed and existing online courses nearing the end of their 3-year cycle to ensure quality. For this study, a research team was organized consisting of the instructional design team. Roles assigned to individual team members included observers, instructional developers, camera operator, and test facilitator. The primary researcher for this study served as the test administrator.

For this study, one course was selected to undergo usability testing based on three criteria: (a) a signed course authorization indicating departmental approval for revisions was submitted, (b) the 3-year review cycle had expired prompting a review of the course, and (c) upon review, the course failed to meet quality standards. The Quality Matters rubric, a nationally recognized faculty peer review process, provided the measure by which quality in online courses was assessed in the department. The rubric features 40 research-based standards that align with best practices and accrediting standards for online learning (Welcome to, 2006). English 101 online met the three criteria just mentioned; therefore, it was selected for this study. The online course originally was developed as a collaborative effort between an instructional developer and content expert. In subsequent semesters, however, various instructors taught the course, each tweaking the design, some of whom had little or no instructional design expertise. Consequently, the original design was altogether different. The English department agreed to have the course undergo usability testing as the department was nearing accreditation review. Additionally, the department hoped to establish consistency in design standards for its online courses.

The overarching research question guiding this study was: Does the implementation of usability testing as part of a user-centered design (UCD) framework improve online course design? To investigate this question, this study explored the types of problems participants encountered while completing tasks within the course, the areas participants found pleasing, and the developers’ perceptions of reported problems and decisions regarding revisions.

A purposeful sample of 32 participants was drawn from the freshmen student population totaling nearly 5,300. From a list provided by the registrar’s office, students’ e-mail accounts were used to solicit their participation. Students were informed of the two criteria for inclusion in the study which included that they be freshmen and that they had never been enrolled in an online version of English 101 at the university. Students meeting these criteria were scheduled to participate in the order in which they responded.

The instructional design department’s recording studio served as the testing center. Typically, instructors record lectures and welcome videos for online and blended courses in the studio. The arrangement is similar to a traditional classroom, with rows of tables and chairs and a large desk at the head of the room. A computer from which participants worked during the testing sessions is on the desk. A sound- proof room at the back of the studio houses the equipment to run the camera, lights, audio, etc.

Instrumentation

Several instruments were utilized for data collection and analysis including checklists, a protocol, a testing schedule, and surveys. These instruments were originally developed for a usability study of the Hotmail interface by Barnum (2002b), who provided permission to use them in this study. Each instrument was altered as needed for the current study. Additionally, a data capturing software application and journals were employed for data collection and analysis. The instruments are described in the order in which they were implemented into the study.

Checklists

Individual checklists were provided to each team member specific to the functions for their particular duties. For instance, the test facilitator was to turn on the testing equipment, greet participants, and describe what to do. The camera operator was to turn on and test recording equipment, adjust and run the equipment during recording, and save recordings to a DVD for the test administrator.

Protocol

To ensure that usability testing went smoothly and consistently, the test facilitator was provided a protocol. The protocol included a script and described what the test facilitator was to do throughout the test session.

Schedule

A testing schedule was developed detailing the order and times of test sessions and research team meetings, as well as administrative requirements. The schedule was tested during two mock usability test sessions to ensure sufficient time for each participant was provided.

Pretest, Task, and Exit Surveys

A pretest survey including nine forced-choice items was adapted from a Hotmail usability study to collect data regarding students’ experiences and satisfaction levels with computers and online coursework. Other surveys from the Hotmail study were adapted to collect data regarding participants’ opinions about each of the five tasks they performed during testing. These five surveys included 2 to 3 forced-choice items and 3 to 5 open-ended items. A final survey consisting of 5 open-ended items was adapted to collect data following testing regarding participants’ opinions about the course as a whole. To ensure content validity and reliability, the surveys were piloted and revised. The revised surveys were entered into the MORAE software study configuration, which is described below. This permitted automatic administration of the surveys at the appropriate time before, during, and after testing. At the completion of the session, an exit survey was automatically administered via MORAE software.

Software

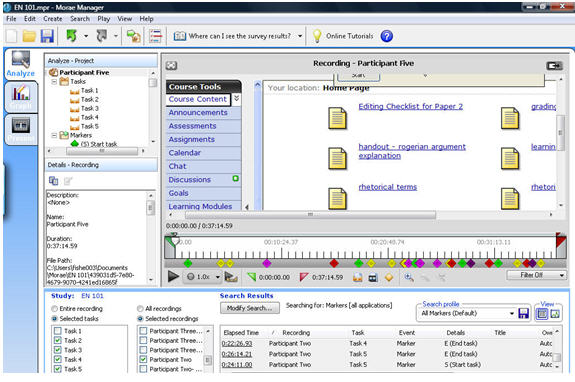

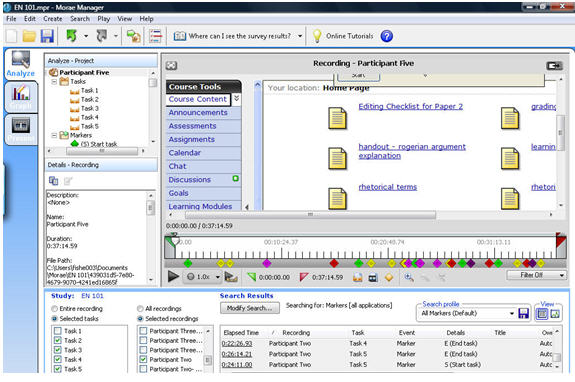

MORAE software, an application that digitally captures participants’ interaction with the computer, as well as, providing an audio and video recording of the participants’ comments and facial expressions, was also employed for data collection and analysis. This tool also enables observers to remotely connect, via a computer, to a testing session to observe the participants’ total experience as it is recorded. As they monitor, observers enter comments and mark specific times when participants encounter problems or express satisfaction. Test sessions captured by the recording software permit the researchers to analyze the data and to generate reports for the instructional developer, as well as to create visual displays for presenting data such as Excel spreadsheets and video clips. A sample of the MORAE manager software interface is provided in Figure 1.

The test administrator developed the five tasks for the participants to complete during testing, which were entered into the Morae software program. For example, Task 1, Begin the Course, instructed students to enter the course and determine how they were to begin. Task 2 instructed students to locate Assignment 1 and determine how to complete and submit it. Subsequent tasks (i.e., tasks 3-5) were similar. The tasks were vague intentionally, so as to simulate as closely as possible the online environment—students figuring out what to do using the resources provided within the course.

Journals

A series of journals for each round of testing was kept by the instructional developers assigned to the course. The developers recorded their opinions about the problems that students encountered during usability testing indicated on the reports they received from an analysis of the recording. The journals included types of revisions made by the developers, as well as justifications for the chosen or omitted revisions.

Prior to actual testing, two mock usability test sessions were held to ensure that the usability team understood their roles, had configured the equipment properly, was familiar with the MORAE software, and had established a reasonable schedule for conducting test sessions with real participants. Issues that surfaced were addressed. Data collection points were established.

Figure 1. MORAE software

Usability Testing Session

Usability testing sessions consisted of one participant completing a usability test, as follows. The participants were provided instructions for participating in the usability testing session. Additionally, the facilitator requested that the participants practice the think aloud protocol while loading a stapler to ensure that they understood the concept of thinking aloud. Thinking aloud was an essential part of data collection, so that students’ thought processes could be recorded as they completed tasks.

Once students logged into the targeted course the facilitator started the MORAE software recording, which automatically administered the Pretest Survey. As participants completed the survey, the instructions for the first task appeared on the screen in a pop-up window. Participants controlled when to start and end each task by clicking on the Start Task or End Task buttons near the bottom of the window. Another task survey appeared automatically each time participants clicked End Task, followed by a new task as participants completed each survey. Participants continued to progress through the remaining five tasks and surveys in the same manner. Once the Task 5 Survey was submitted, the exit survey appeared. Completion of the exit survey signified the end of a test session.

As they worked, participants were reminded to think aloud and voice their thoughts about the tasks. Observers marked points during testing in which they observed participants encountering problems or exhibiting satisfaction through comments and nonverbal cues participants made. The beginning and ending times for each task, participants’ comments, nonverbal cues, and navigation paths were captured using MORAE software.

Usability Testing Round

One round of usability testing consisted of 3 to 5 participants completing a test session. As mentioned previously, the literature indicates benefits of using a small number of users (Nielsen and Landauer, 1993; Krug, 2006). Each round was scheduled over 1 or 2 days depending on the participants’ arrival. At least 3 participants were needed per round, so if 3 did not show up in one day, then the round would continue on the next scheduled testing day. The observers, facilitator, and researcher discussed observations and how they might be addressed following each round of testing.

Data Analysis

Recordings of the participants during usability testing were imported for analysis into the MORAE manager software application on the researcher’s computer. The researcher searched for common themes in coding. The themes were complied into a word processed report according to the subject matter. In addition, positive comments made by participants during usability testing were included in the report to ensure their continuation during the course redesign. Participants’ responses on the pretest and post-task surveys and exit interviews, captured in the MORAE recordings, were exported into data spreadsheets, as were observers’ markers and comments. These were provided to the instructional developer to determine the course of action for redesigning the course. Additionally, journals kept by the instructional developers were analyzed.

Results

A number of problems surfaced during usability testing sessions. From the problems observed, three dominant themes emerged: course tools, readability, and lack of clarity.

Theme 1: Course Tools

In Round 1, the Course Tools menu was identified as an area where study participants experienced confusion. One issue that apparently contributed to the confusion was the number of menu tabs, some of which did not lead to any content. These problems surfaced to a lesser degree in subsequent rounds as revisions were made to the menu. A second issue that emerged during testing was that the Course Tools menu consistently collapsed hiding the tabs’ labels throughout testing, although not as often in Round 3. An investigation into possible solutions by the research team revealed no way to prevent the menu from collapsing, a problem that resurfaced in Round 4.

Theme 2: Readability

Due to formatting issues and lengthy narratives, readability of the content within the course was also a dominant theme throughout testing. Participants repeatedly indicated problems finding information, which hindered and sometimes even prevented task completion. This is not surprising given the fact that people read differently on the Web, scanning pages for specific information, as opposed to reading word for word (Nielsen, 1997b).

Following each iteration of usability testing, both participants and observers recommended presenting content with obvious headings and in bulleted or outline format, the value of which is supported in the literature (Nielsen, 1997b). This was clearly illustrated during testing with the Course Content page. In the beginning of the first round of testing, the page contained no instructions and a number of hyperlinks in no apparent order. In preparation for the next round of testing, navigation instructions in paragraph form were added to minimize the confusion that students were experiencing in beginning the course (Task 1). However, participants ignored instructions in Round 2 and continued having difficulty with completing Task 1. As recommended by participants and observers, the instructional developer changed the instructions to a bulleted list, resulting in a marked improvement in participants’ ability to compete Task 1 in Round 3. Issues with navigation instructions resurfaced in Round 4. The instructional developer decided to add text for informational purposes in response to another issue, which appeared to contribute to the problem. Therefore, in the final iteration of revisions, the navigation instructions were again condensed. The recommended changes relating to readability with other course documents such as the Syllabus and Welcome Letter were not applied. As a result, participants did not complete all assignments associated with Tasks 2-5.

Theme 3: Lack of Clarity

Lack of clarity was also a theme that emerged in this study. Revisions intended to correct problems often created new ones that were indirectly related to readability. For instance, the navigation instructions that were added to the Course Content page to provide clarity for completing assignments, as described previously, were initially ignored due to readability issues. As a result, participants missed assignments. Locating the appropriate assignments, submitting assignments, and finding due dates were recurring problems, despite numerous attempts to circumvent the issues.

Discussion

Several factors may have contributed to the recurring themes described. One factor may have been the way in which tasks were defined. In Tasks 2, 3, 4, and 5, participants were directed to find Assignments 1, 2, 3, and 4, respectively. Many study participants appeared to intuitively access the Assignments tab, even though there were assignments also posted on the Discussion Board and buried at the bottom of the syllabus. The research team believed that participants assumed the assignments they were searching for would be titled, as listed in the tasks (e.g., Assignment 1, Assignment 2, etc.). For example, in Task 2, participants were instructed to locate Assignment 1. Many of the participants began the task by immediately accessing the Assignments tab. When they were unable to find Assignment 1 specifically, some would search in other areas of the course. Others would submit Paper 1 for Assignment 1, Paper 2 for Assignment 2, and so forth, which was not correct.

Another possible factor was the primary instructional developer’s decisions to forego certain revisions that were recommended by both participants and observers. As previously mentioned, problems associated with readability of content were reported but not always addressed. When specific issues were discussed among the research team regarding participants’ comments about the spacing of a document, the instructional developer commented, “The spacing looks fine to me. The problems stem from students not reading.” The developer, a doctoral student in the instructional technology program, felt very strongly that the length of the documents should not change because the participants needed all of the information included in the documents; the developer further stated that as a student, she prefers to have more information.

Although some of the instructional developer’s decisions to forego needed revisions were challenging, intervening was inappropriate and unethical due to the role of the researcher as observer. It is important to note that the instructional development team associated with this study routinely discussed research regarding course design and modifications to current models. Clearly, more discussion and training are needed in regard to readability issues at the developer’s level.

Conclusions

Does implementation of usability testing as part of a UCD framework improve online course design? Although problems surfacing during test sessions persisted beyond the timeframe of this study, initial findings indicate that usability testing as part of a UCD framework is effective for developing an improved model for online course design. Several examples support this assertion. For instance, a marked difference in participants’ ability to complete Task 1 manifested in the test sessions in Round 3, following a major revision of the Course Content page. Moreover, usability testing for the course led to the development of a comprehensive calendar of assignments. Both have been implemented as new templates for online courses developed with the department. Numerous course documents such as the Syllabus, Grading Protocol, and Letter of Agreement were improved in terms of readability in the final round of testing. Finally, the creation and eventual improvement of modules led to enhancements in the overall course structure and organization.

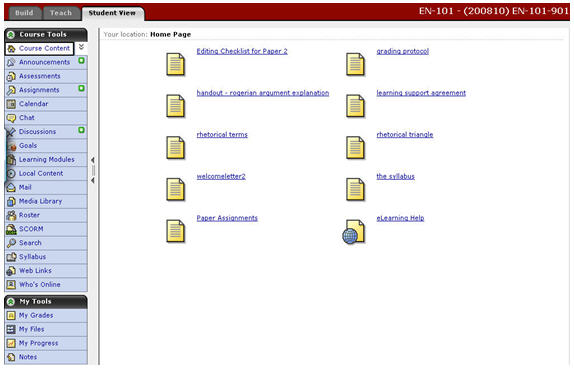

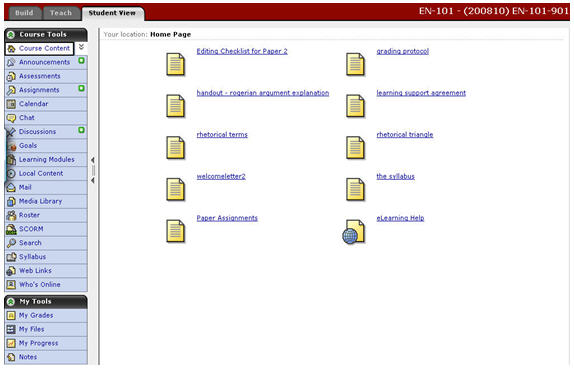

Illustrations of the Course Content page provide a graphical display of UCD principles at work (see Figures 2 and 3). In Figure 2, the Course Tools menu is filled with empty and irrelevant tabs. There are no instructions in terms of where to begin when the student enters the course, and links are not logically ordered.

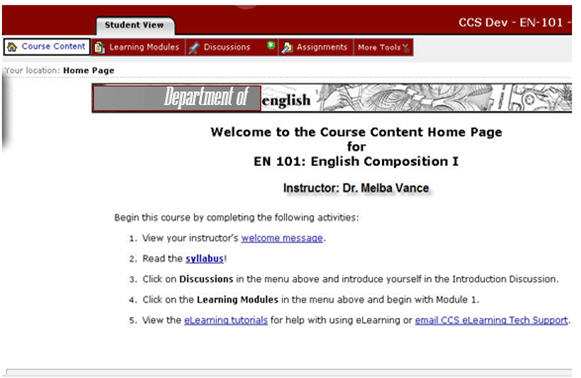

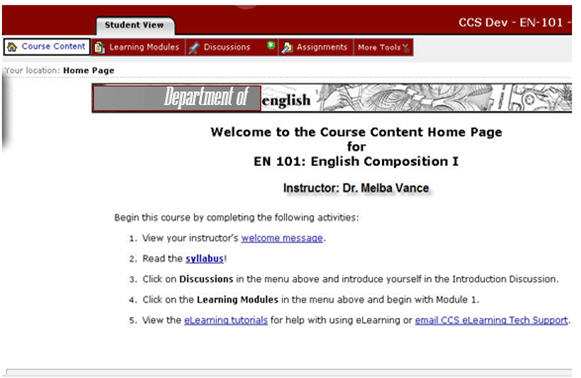

Figure 3 shows the Course Content page at the conclusion of Round 4. The Course Tools menu was relocated to the top of the page and only essential tabs (i.e., Learning Modules, Discussions, and Assignments) were included in the menu. The numerous links found on the original Course Content page were linked within the modules, and navigation instructions were enumerated with critical documents boldfaced and hyperlinked. (The instructor’s name included on the homepage is a pseudonym.)

Implications

The results of this study have implications for key stakeholders within the institution, particularly students, faculty, instructional developers, and administrators.

For students, usability testing should be employed as a tool to increase student satisfaction and to improve course design. Course design is one factor that has been shown to improve satisfaction (Swan, 2001). Moreover, poorly designed courses may lead to frustration, anxiety, and can hinder learning (Dietz-Uhler et al., 2007; Nitsch, 2003). For faculty, effective course design can engage students who are becoming “increasingly distracted and harder to captivate,” thereby enhancing the teaching and learning process (Hard-Working College, 2005, p. 1). Improvements to poorly designed courses could reduce time spent by faculty addressing problems (Ko & Rossen, 2008).

Figure 2. Original Course Content page.

Figure 3. Revised Course Content page at the end of Round 4.

For developers, usability testing provides a candid view of the students’ experiences and perspectives first hand, which can serve to inform standards and guidelines for improving online course design, increasing productivity, saving time, and ensuring consistency (Barnum, 2002a). Moreover, convincing faculty to follow online course design principles can be challenging, particularly when faculty and administrators may not recognize the differences in traditional and online teaching and learning. Usability testing offers evidence to support developers’ recommendations to faculty and administrators for improving online courses. Finally, for administrators, the cost of not testing can be greater than the cost of testing (Barnum, 2002a; Nielsen, 1993) in terms of retention, accreditation, institutional reputation, and competitiveness (Nitsch, 2003).

This study contributes to the limited research associated with usability testing as a model to improve online course design. Further research is needed that examines the impact of usability testing on student satisfaction and on student retention. Replicating the methodology employed in this study with other courses, in other disciplines, and with other student populations (i.e., undergraduate, graduate, post graduate) would also be helpful. Additionally, replicating the methodology employed in this study on a course deemed exemplary might yield even greater improvements in course design and could provide further indication of the effectiveness of implementing usability testing.

References

Allen, I. E., & Seaman, J. (2007, October). Online nation: Five years of growth in online learning. Retrieved April 5, 2008, from http://www.sloan-c.org/publications/ survey/pdf/online_nation.pdf

Badre, A. N. (2002). Shaping web usability: Interaction design in context. Boston, MA: Pearson Education.

Barnum, C. M. (2002a). Usability testing and research. New York: Longman.

Barnum, C. M. (2002b). Tools/templates (.PDF files). Retrieved from the Usability Testing and Research website: http://www.ablongman.com/barnum/

Barnum, C. M. (2007). Show and tell: Usability testing shows us the user experience and tells us what to do about it. Poster session presented at the annual E-Learn 2007: World Conference on E-Learning in Corporate, Government, Healthcare, & Higher Education. Quebec: CA.

Bevan, N., Barnum, C., Cockton, G., Nielsen, J., Spool, J., & Wixon, D. (2003). The “magic number 5”: Is it enough for web testing? In CHI '03 Extended Abstracts on Human Factors in Computing Systems, 698-699.

Corry, M. D., Frick, T. W., & Hansen, L. (1997). User-centered design and usability testing of a website: An illustrative case study. Educational Technology, Research and Development, 45(4), 65-76.

Crowther, M. S., Keller, C. C., & Waddoups, G. L. (2004). Improving the quality and effectiveness of computer-mediated instruction through usability evaluations. British Journal of Educational Technology, 35(3), 289-303.

Dietz-Uhher, B., Fisher, A., & Han, A. (2007). Designing online courses to promote retention. Journal of Educational Technology Systems, 36(1), 105-112. Retrieved April 29, 2008, from the Baywood Journals database (DOI: 10.2190/ET.36.1.g)

Dumas, J. S., & Redish, J. C. (1999). A practical guide to usability testing . Portland, OR: Intellect Books.

Gould, J. D., Boies, S. J., & Lewis, C. (1991, January). Making usable, useful, productivity enhancing computer applications. Communications of the ACM, 34(1), 75-85.

Hard-Working College Students Generate Record Campus Wealth. (2005, August 16). Retrieved March 16, 2008, from http://www.harrisinteractive.com/news/ newsletters/clientnews/Alloy_Harris_2005.pdf

Jenkins, L. R. (2004). Designing systems that make sense: What developers say about their communication with users during the usability testing cycle. Unpublished doctoral dissertation, The Ohio State University, Columbus.

Ko, S., & Rossen, S. (2008). Teaching online: A practical guide (2 nd ed.). New York: Houghton Mifflin.

Krug, S. (2006). Don’t make me think! (2 nd ed.). A common sense approach to web usability. Indianapolis: New Riders.

Lewis, J. R. (1994). Sample sizes for usability studies: Additional considerations. Human Factors, 36(2), 368-378.

Long, H., Lage, K., & Cronin, C. (2005). The flight plan of a digital initiatives project, part 2: Usability testing in the context of user-centered design. OCLC Systems and Services, 21(4), 324-345. Retrieved March 20, 2008, from Research Library database.

Nielsen, J. (1993). Usability engineering. San Diego, CA: Academic.

Nielsen, J. (1994). GuerrillaHCI: Using discount usability engineering to penetrate the intimidation barrier. Retrieved March 17, 2008, from the useit.com Web site: http://www.useit.com/papers/guerrilla_hci.html

Nielsen, J. (1995, May). Usability inspection methods. In I. Katz, R. Mack, & L. Marks (Eds.), Conference Companion on Human Factors in Computing Systems (pp. 377-378), Denver. CHI '95. ACM, New York, NY. DOI=http://doi.acm.org/10 .1145/223355.223730

Nielsen, J. (1997a, July-August). Something is better than nothing. Software, IEEE, 14, 27-28. ( DOI: 10.1109/MS.1997.595892)

Nielsen, J. (1997b, October). How users read on the web. Retrieved February 26, 2009, from the useit.com website: http://www.useit.com/alertbox/9710a.html

Nielsen, J. (2000). Designing web usability: The practice of simplicity. Indianapolis, IN: New Riders.

Nielsen, J. & Landauer, T. K. (1993, April). A mathematical model of the finding of usability problems. In Proceedings of ACM INTERCHI’93 Conference (pp. 206-213). Amsterdam, the Netherlands: ACM Press.

Nitsch, W. B. (2003). Examination of factors leading to student retention in online graduate education. Retrieved April 30, 2008, from http://www.decadeconsulting.com/decade/papers/StudentRetention.pdf

Palis, R., Davidson, A., & Alvarez-Pousa, O. (2007). Testing the satisfaction aspect of a

web application to increase its usability for users with impaired vision. In G. Richards (Ed.), Proceedings of World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2007 (pp. 1195-1202). Chesapeake, VA: AACE.

Rosson, M. B., & Carroll, J. M. (2002). Usability engineering: Scenario-based development of human-computer interaction. San Diego, CA: Academic.

Roth, S. (1999). The state of design research. Design Issues, 15(2), 18-26. Retrieved April 29, 2008, from JSTOR database.

Rubin, J. (1994). Handbook of usability testing: How to plan, design, and conduct effective tests. New York: Wiley.

Schneiderman, B. (1998). Designing the user interface: Strategies for effective human-computer interaction (3 rd ed.). Reading, MA: Addison, Wesley, Long.

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22(2), 306-331. Retrieved April 29, 2008, from ProQuest database. (Document ID: 98666711)

Virzi, R. (1990). Streamlining the design process: Running fewer subjects. Proceedings of the Human Factors Society 34th Annual Meeting (pp. 291-294). Orlando: Human Factors & Ergonomics Society.

Virzi, R. (1992). Refining the test phase of usability evaluation: How many subjects is enough? Human Factors, 34(4), 457-468.

Welcome to Quality Matters. (2006). Retrieved November 1, 2009 from the Quality Matters website: http://www.qualitymatters.org/index.htm

Appendix

Checklists

CHECKLIST FOR THE RESEARCHER

Before each participant comes:

____ Monitor the evaluation team members to confirm they are using their checklists.

____ Greet the test observers.

During each test session:

____ Manage any problems that arise.

____ Observe and take notes, noting real problems and big picture issues.

After each test session:

____ Make sure the computer is set up for the next participant and clear the room of any materials left behind by the participant or facilitator.

____ Collect data from facilitator and begin analysis (surveys, notes, observation records, etc.)

____ Lead the team in a brief session to catalog results and identify any usability issues discovered during the test.

____Lock participants’ folders in cabinet in researcher’s office

After each day of testing:

____ Conduct a brief review with the other members of the evaluation team to summarize the test day's findings.

(Adapted from Usability Testing and Research sample Checklist for the Test Administrator with permission from Dr. Carol Barnum).

CHECKLIST FOR THE CAMERA OPERATOR

Before each participant comes:

____ Turn on the equipment.

____ Adjust the cameras to the proper setting for recording.

____ Check the sound both in and out of the observation room.

____ Label the CDs for the session.

During each test session:

____ Synchronize starting times with the Facilitator.

____ Run the equipment.

____ Select the picture to record and handle the recording.

____ Adjust the sound as needed.

____ Change the CDs when necessary.

After the participant leaves:

____ Check to make certain the CDs are labeled properly.

____ Give CD to Researcher.

(Adapted from Usability Testing and Research sample Checklist for the Camera Operator with

permission from Dr. Carol Barnum).

CHECKLIST FOR THE FACILITATOR

Before each participant comes:

____ Make sure the studio is properly set up. Turn on the test equipment.

____ Make sure the documentation is in place, if appropriate.

____ Have a pad and pens or pencils for taking notes.

____ Have an ink pen ready for the participant to use in signing the consent form.

At the beginning of each test session:

____ Greet the participant.

____ Check the participant's name to be sure that this is the person whom you expect.

____ Bring the participant into the studio.

____ Introduce the participant to the other team members and describe roles.

____ Let the participant see the cameras and other equipment.

____ Show the participant where to sit.

____ Have the participant practice the think-aloud protocol

____ Ask the participant to complete the pre-test survey.

____ Give the participant a brief introduction to the test session.

____ Ask if the participant has any questions.

____ Remind the participant to think out loud.

____ Put the “Testing in Progress” sign on the door.

At the end of each test session:

____ Thank the participant for his or her help.

____ Give the participant the gift card for participating.

____ Thank the participant and show him or her out.

After the participant leaves:

____ Save the MORAE recording on computer and give consent form to the Test administrator.

____ Turn off the equipment in the evaluation room.

(Adapted from Usability Testing and Research sample Checklist for the Briefer with permission from Dr. Carol Barnum).

PROTOCOL FOR USABILITY TEST

F = indicates facilitator comments

P = participant response expected

Italics indicate actions or activities or anticipated responses

F: Complete the Before the Participant Comes In and Before the Test Session Begins tasks on the facilitator checklist.

F: I would like to thank you on behalf of the team and welcome you to our usability test of an online course. We REALLY appreciate your coming in today to help us out. Your input is going to be very valuable to us as we evaluate the course and see how easy or how difficult you find it to use.

As you can see, we have cameras and observers here in the room with you. We will be looking at the computer screen with one of the cameras, so that as you work we can see what you are clicking on, and where you are going.

Also, we are going to be recording your facial expressions to see if things seem to be going just as you expected, or if you seem surprised at where a link takes you. We will also be able to see if you seem to be getting frustrated with what we've asked you to do, and just how things go for you.

We would like for you to talk out loud during this session. It would help us so much if you could tell us what you are thinking. For example, "I am clicking on this tab because I want to find a link to an assignment". You don't have to speak loudly because this microphone is very sensitive. Just speak in your normal tone of voice.

P: Participant responds

F: OK, so that you can practice thinking out loud, I would like for you to take this stapler and load some staples into it. While you are doing that, if you would, please tell us exactly what you are doing each step of the way. OK? Great.

P: Participant loads stapler while talking out loud.

F: Facilitator praises his efforts. Video recorder adjust microphone if needed. Please remember, we are testing the design of the course. We are not testing you. So don't be nervous. There are no right or wrong answers. We just want to see how things go for you as you work in the course. Does that sound all right?

P: Participant acknowledges.

F: All right, if you will please, just sign our consent form here.

P: Participant signs consent form.

F: All right, great. Now do you have any questions for me?

F: Because we are testing how well the online course is designed, we want to simulate as closely as possible the same environment you would be in if at home working on the course alone. While we ask that you voice any questions you have for recording purposes, we will not provide assistance, just as if you were at home. Instead you will need to utilize whatever means is present within the course for getting help. Any questions?

P: Participant responds.

F: When we click the red button to record, a survey will pop up. Complete the survey and click “Done” at the bottom of the form. After completing the survey, a pop-up window will show instructions for your first task. Read the instructions for the first task. When you’re ready, click “Start Task.” Once you click “Start Task,” the pop-up window will roll up and hide the instructions. To view the instructions again, click the “Show Instructions” button. When you have completed the task, click on the “End Task” button. There will be five tasks in all.

P: Participant responds.

F: Click the “Record Button.”

P: Participant completes the study.

F: Your contribution to the study is now complete. The facilitator provides the incentive offered for participation to the participant. Thank you so much for your participation in this study. Do you have any questions?

P: Participant may respond.

F: Prepare room for next participant. The facilitator follows the same protocol as before.

(Adapted from Usability Testing and Research sample Script for the Briefer with the Participant with permission from Dr. Carol Barnum).

USABILITY TESTING SCHEDULE

8:15 am Team arrives/prepares

8:30 am Participant 1 arrives

Facilitator gets signature on consent form and goes through protocol with participant

Participant completes test session

10:00 am Participant 2 arrives

Facilitator gets signature on consent form and goes through protocol with participant

Participant completes test session

11:30-12:15 pm Lunch

12:30 pm Participant 3 arrives

Facilitator gets signature on consent form and goes through protocol with participant

Participant completes test session

2:00 pm Participant 4 arrives

Facilitator gets signature on consent form and goes through protocol with participant

Participant completes test session

3:30 pm Participant 5 arrives

Facilitator gets signature on consent form and goes through protocol with participant

Participant completes test session

4:45 pm Team meeting to discuss findings

(Adapted from Usability Testing and Research sample Usability Testing Sessions with permission from Dr. Carol Barnum).

PRETEST SURVEY

Please answer the following questions about your computer experience:

1. What is your level of proficiency with personal computers?

2. What kind(s) of programs have you worked with? Check all that apply

o Word Processing o Spreadsheets o Graphics o Other(s) specify________________________

3. What type of technology equipment do you use? (check all that apply)

o PC o Laptop o Mac o Personal data assistant (PDA) o Smartphone o Other(s) specify________________________ o None

4 What social networking tools do you use?

o Blogs o Wiki’s o Email o Texting o My Space o IM o Other(s) specify________________________ o None

5. Have you ever accessed course materials online? o Yes o No

6. Have you ever taken a fully online course? o Yes o No

If you answered no, please stop here and return the survey to the test facilitator.

- How many online courses have you successfully completed?

o 1 o 2 o 3 o More than three

8. In which course management system (CMS) was the course delivered?

o Blackboard o WebCT o Desire2Learn o Other(s) specify _________

o The online course was delivered via a website.

9. With which activities have you had experience using in online courses?

o Discussion Board o Live Chat o Assignments o Assessments

o Calendar o Web links o Student Portfolios o Podcasting o Video

o Other(s) specify ______

10. Why did you take an online course instead of a traditional face to face course?

Thank you for completing our survey. We greatly appreciate your time.

(Adapted from Usability Testing and Research sample Pre-Test Questionnaire with permission from Dr. Carol Barnum).

TASK 1 SURVEY

Task 1: Begin the Course

- Rate how well you understood how to begin the course

o Very clear o Moderately clear o Neither clear nor confusing o Moderately confusing o Very confusing

- Rate how easy or difficult it was to access the various course components (e.g., syllabus, calendar, discussions, assignments, etc.)

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

- What did you think about the course tools menu?

- What did you think about the Course Content page?

- What was MOST DIFFICULT to find or understand?

- What was EASIEST to find or understand?

- When you were exploring the course, what components did you explore? What were your observations about what you saw?

- Optional: Please add any additional comments you would like to make.

(Adapted from Usability Testing and Research sample Post Test Questionnaire with permission from Dr. Carol Barnum).

TASK 2 SURVEY

Task 2 : Complete Assignment 1.

1. Rate how easy or difficult it was to access assignments.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

2. Rate how easy or difficult it was to submit an assignment.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

3. Were the instructions for submitting an assignment clear (easy to understand)?

o Very clear o Moderately clear o Neither clear nor confusing o Moderately clear o Very clear

4. What was MOST DIFFICULT to do or understand? (If you need more room, write on the back of this page.)

- What was EASIEST to do or understand? (If you need more room, write on the

back of this page.)

- Please add any additional comments. (If you need more room, write on the back of this page.)

(Adapted from Usability Testing and Research sample Post Test Questionnaire with permission from Dr. Carol Barnum).

TASK 3 SURVEY

Task 3 : Complete Assignment 2.

- Rate how easy or difficult it was to access assignments.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

- Rate how easy or difficult it was to submit an assignment.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

- Were the instructions for submitting an assignment clear (easy to understand)?

o Very clear o Moderately clear o Neither clear nor confusing o Moderately clear o Very clear

- What was MOST DIFFICULT to do or understand?

(If you need more room, write on the back of this page.)

- What was EASIEST to do or understand?

(If you need more room, write on the back of this page.)

- Please add any additional comments.

(If you need more room, write on the back of this page.)

(Adapted from Usability Testing and Research sample Post Test Questionnaire with permission from Dr. Carol Barnum).

TASK 4 SURVEY

Task 4 : Complete Assignment 3.

Rate how easy or difficult it was to access assignments.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

Rate how easy or difficult it was to submit an assignment.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

Were the instructions for submitting an assignment clear (easy to understand)?

o Very clear o Moderately clear o Neither clear nor confusing o Moderately clear o Very clear

- What was MOST DIFFICULT to do or understand?

(If you need more room, write on the back of this page.)

- What was EASIEST to do or understand?

(If you need more room, write on the back of this page.)

- Please add any additional comments.

(If you need more room, write on the back of this page.)

(Adapted from Usability Testing and Research sample Post Test Questionnaire with permission from Dr. Carol Barnum).

TASK 5 SURVEY

Task 5 : Complete Assignment 4.

Rate how easy or difficult it was to access assignments.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

Rate how easy or difficult it was to submit an assignment.

o Very difficult o Moderately difficult o Neither easy nor difficult o Moderately easy o Very easy

Were the instructions for submitting an assignment clear (easy to understand)?

o Very clear o Moderately clear o Neither clear nor confusing o Moderately clear o Very clear

What was MOST DIFFICULT to do or understand?

(If you need more room, write on the back of this page.)

- What was EASIEST to do or understand?

(If you need more room, write on the back of this page.)

- Please add any additional comments.

(If you need more room, write on the back of this page.)

(Adapted from Usability Testing and Research sample Post Test Questionnaire with permission from Dr. Carol Barnum).

EXIT SURVEY What was your favorite thing about this course?

- What is your least favorite thing about this course?

- What opinions about the course do you have?

- Would you enroll in this course?

- Would you recommend this course to others?

(Adapted from Usability Testing and Research sample Briefer’s Script Outline with Participant with permission from Dr. Carol Barnum).