Teacher Moderating and Student Engagement in

Synchronous Computer Conferences

|

Shufang Shi

Childhood/Early Childhood Education Department

State University of New York at Cortland

Cortland, NY 13045 USA

Shufang.Shi@cortland.edu

|

Abstract

The purpose of this study was to develop a deeper understanding of the relationship between teacher moderating levels and student engagement in synchronous online computer conferences. To achieve this understanding, the researcher developed new constructs and measurement methods to measure teacher moderating variables (number of teacher postings and quality of teacher postings) and student engagement variables (behavioral, social-emotional, and intellectual engagement). The researcher investigated the relationships between and among the teacher moderating variables and student engagement variables, with the final goal of identifying the critical factors that influence student intellectual engagement. The data for the study consisted of 44 transcripts of automatically archived online conferences from four groups of students over 11 weeks in a synchronous online course. Quantitative analysis revealed that student intellectual engagement was a function of both students’ participation and the number and quality of teacher postings. The findings of this study contribute to a better understanding of how students can become invested behaviorally, social-emotionally, and intellectually in the collaborative discourse of a community of inquiry through the medium of synchronous computer conferencing.

Keywords : teacher moderating levels, intellectual engagement, social-emotional engagement, behavioral engagement, transcript analysis. |

Introduction

As educators, we are all interested in the teacher’s role in a classroom. According to Cazden (2001), the nature of classroom discourse depends greatly on the teacher. We educators are not only interested in whether students are engaged, but also why and how. These issues have been studied and are relatively well understood in face-to-face learning environments. However, opinions on group learning dynamics and the role of online teachers in distance education environments vary greatly (Dennen, 2001; Zhang & Bonk, 2008). Despite the growing emphasis on the importance of teacher moderating and leadership in computer conferencing (Anderson, Rourke, Garrison, & Archer, 2001; Feenberg, 1989), its actual effect on student engagement is not well understood.

The purpose of this study is to examine the relationship between teacher moderating and student engagement within the context of an online college level course. The course was based completely on text-based (vs. video conferencing) synchronous computer mediated online discussion, which is also known as synchronous computer conferencing or synchronous instruction. Synchronous computer conferencing, in this study, refers to real-time instruction and discussion occurring entirely online.

Literature Survey

Synchronous Computer Conferencing

There are two modes of computer-mediated communication in distance learning: asynchronous (any time, any place) and synchronous (real-time, same place or real-time, different places, such as a chat room). Typically, online communication has depended more on an asynchronous system, with synchronous conferencing systems playing a supplementary role in socializing, brainstorming, or virtual office hours in online courses (Brannon & Essex, 2001, as cited in Park & Bonk, 2007a). With the advancement of communication technology such as software and systems that allow a distributed group of people to communicate and work together , the use of synchronous systems for instruction has rapidly increased and is anticipated to continue increasing (Learning Circuits, 2006). As Park and Bonk said, “the role of synchronous conferencing is not limited to an optional support medium but rather has extended to an instructional tool for various knowledge domains and addressing diverse subject matters” (2007a). There is increasing evidence that synchronous instruction has a positive impact on online students’ learning by supporting the types of elements often found in face-to-face contexts (Lobel, Neubauer, & Swedburg, 2002a, 2002b ; Murphy, 2005; Orvis, Wisher, Bonk, & Olson, 2002; Park & Bonk, 2007a; Rogers, Graham Rasmussen, Campbell, & Ure, 2003; Shi, Mishra, Bonk & Tan, 2006; Shi & Morrow, 2006; Wang & Chen, 2007 ) . Despite the evidence that synchronous instruction has a positive impact on student learning, our understanding of what type of learning or how students learn in synchronous formats is limited (Park & Bonk, 2007b; Shi, et al., 2006). An examination of synchronous conferencing is needed to provide evidence with regard to the type of learning that is taking place and how students’ knowledge construction occurs (Grant & Cheon, 2007; Romiszowski & Mason, 2004; Wang, 2008).

Teacher Moderating

One of the most difficult tasks online instructors must tackle is providing clear and visible guidance in a virtual environment ( Liu, Bonk, Magiuka, Lee, & Su, 2005). In this kind of virtual environment, i nstructors may function as moderators, facilitators, coaches, consultants, or resource providers who provide intellectual guidance for student learning (Berge, 1995; Bonk, Wisher, & Lee, 2003; Lee, Lee, Magjuka, Liu, & Bonk, 2009; Liu, et al., 2005). Numerous authors have drawn parallels to the role of the online instructor. To moderate is to preside or to lead, says Feenberg (1989). Cantor labels the instructor a “helper, guide, change agent, coordinator, and facilitator” (2001, p. 310). This list is expanded by Goodyear, Salmon and Spector (2001) to include moderator, advisor, counselor, facilitator, assessor, researcher, content facilitator, technician, and designer. Salmon coined the name “e-moderators” to describe instructors who act as “weavers,” pulling together the participants’ contributions (2000, p. 32). Another analogy provided by Heuer and King (2004) of the online instructor is that of a conductor or leader of a band who brings out the strengths and depths of the music. One of the most comprehensive classifications, first proposed by Berge (1995), then expanded by Ashton, Roberts, and Teles (1999) as well as Bonk, Kirkley, Hara, and Dennen (2001), describes the multiple roles of online instructors in four dimensions: pedagogical, social, managerial, and technical. There is a plethora of literature that addresses the various roles of instructors in distance learning; however, there is a lack of research investigating how much scaffolding is needed to intellectually engage students in an online classroom setting.

Student Engagement

The purpose of teacher moderating is to promote student engagement. All learning requires engagement to attain mastery (Bloom, 1956; Carroll, 1963). In the same sense, online conferencing also requires engagement to achieve ideal educational objectives (Xin, 2002; Shi, et al., 2006), although the divisions and the definitions of the components of student engagement are not the same as the classic taxonomies of learning objectives developed by Bloom (1956), Carroll (1963), or Schwab (1975). As Kaye (1992) has observed, the practical reality of collaboration is that it requires a higher order of involvement, or engagement. In this study, student engagement is defined as a phenomenon that occurs when students become invested behaviorally, social-emotionally, and intellectually in the collaborative discourse of a community of inquiry through the medium of computer conferencing (cf. Christenson & Menzel, 1998; Sanders & Wiseman, 1990). Here, engagement is used synonymously with investment, involvement, or commitment. It is used here not only in the general sense of participants interacting with each other, but also in the sense of engagement with the subject matter in a collaborative discourse (Xin, 2002). By synthesizing a broad literature beginning with Bloom’s educational objectives (1956), Carroll’s model of school-learning (1963), and Schwab’s learning community (1975), and encompassing the more current computer mediated communication field (National Survey of Student Engagement 2003; Scardamalia & Bereiter, 1996; Shneiderman, 1992, 1998), the researcher has developed constructs of student engagement in a collaborative discourse of computer conferencing. The researcher proposes that student engagement consists of three sub-constructs: behavioral, social-emotional, and intellectual engagement, and that these three variables are closely interrelated. The relationship among the three dimensions of student engagement will be tested in this investigation.

Relationship Between Teacher Moderating and Student Engagement in Synchronous Computer Conferencing

The relationship between teacher moderating and student engagement in a synchronous computer conferencing learning environment is complex (Anderson, et al., 2001). It is not surprising that some theorists in this area argue for strong teacher moderating intervention while others believe that self-direction on the part of students is more important. In a meta-analysis of the research about online learning, Tallent-Runnels, Thomas, Lan, and Cooper (2006) linked poor student contributions to deficiencies in instructor’s guidance in synchronous and asynchronous learning environments. Garrison, Anderson, and Archer (2000) indicate that the primary reason for student failure to learning in a computer conferencing learning environment is the absence of teaching presence and appropriate online leadership. However, researchers have identified problems when the instructor exclusively assumes the role of discussion leader (Duemer, et al., 2002). In examining a synchronous group discussion, Duemer et al. observed that authoritative mentors “posed questions, made judgment statements about the responses, and paced the discussion” (2002, p.4). The authors pointed out that these strategies have a negative impact on learning community formation.

An examination of the methodology used to research asynchronous and synchronous communication reveals that most studies focus on content analysis of online discussion, that is, the archived transcripts of online conferencing (Hara, Bonk, & Angeli, 2000; Henri, 1991; Herring, 1999, 2003; Rourke, Anderson, Garrison, & Archer, 2001; Shi, et. al., 2006). In the context of content analysis, a systematic analysis methodology would explore transcripts of the automatically archived online conferencing in order to examine the interaction patterns of group discussion in the learning process (Foreman, 2003; Rourke, et. al.,2001). In this area, most existing research is qualitative in nature. A review of the literature also reveals that the limited amount of empirical research on instructional effectiveness of synchronous communication consists mainly of case studies, survey studies, and other qualitative methods. These qualitative methods facilitated local clarification through observation, description, and interpretation of the features of interactions and the roles of teachers, peers, and tasks (Firestone, 1993; Pellegrino & Goldman, 2002). However, these methods lack consistency and it is very hard for other researchers to replicate, extend, modify, and possibly refute the findings. There is a lack of research addressing the relational dimensions of computer mediated communication, or revealing the broad patterns of conferencing activities, thus missing out on the complex web of interactions developed between and among teachers and students (Herring, 1999; 2003). As a result, there is a strong need for developing systematic methodologies that can evaluate computer mediated communication discourse not only qualitatively, but also quantitatively (Rourke & Anderson, 2004; Rourke, et. al., 2001; Sher, 2009). These gaps in the development of systematic methodologies to quantitatively evaluate the relational dimensions of teacher and student interactions will be addressed by this study. This study extends the descriptive results of content analysis to inferential hypothesis testing (Borg & Gall, 1989; Rourke, et. al., 2001) and empirically tests the effect of different levels of teacher moderating on different aspects of student engagement: behavioral engagement, social-emotional engagement, and intellectual engagement.

Research Questions

The purpose of the study is to investigate the relationship between and among teacher moderating variables and student engagement variables. Student engagement consists of three different aspects: behavioral engagement, social-emotional engagement, and intellectual engagement. Among these three aspects, what matters the most in a collaborative discourse of a community of inquiry (e.g., through computer conferencing) is whether students are intellectually engaged, that is, whether they reflect deeply on the issues of the prevailing task or subject matter and undergo cognitive change and growth by interacting and debating with each other. Therefore, through the investigation of the relationships between and among the teacher moderating and student engagement variables, the focus of the study is to identify the critical factors that influence student intellectual engagement variables.

The major research question is: what really contributes to student intellectual engagement? The sub-questions are:

- How are student social-emotional engagement variables related to student intellectual engagement variables?

- How are student behavioral engagement variables related to student intellectual engagement variables?

- How are teacher moderating level variables related to student intellectual engagement variables?

Discourse is used instead of discussion because it conveys the definition relating to the “the process or power of reasoning,” rather than the more social connotation of conversation ( Clark & Schaefer, 1989, p. 265).

Method

Study Context and Participants

The study was conducted within a synchronous, online, three-credit university level undergraduate course titled “Interpersonal Communications and Relationships.” The course was delivered in real time to 32 students in a fall semester that consisted of eleven consecutive three-hour weekly sessions taught from 7:00 to 10:00pm (EST) on Wednesday evenings during an entire fall semester.

Using HTML-formatted text, images, and an interactive messaging system, the medium “LearningByDoing” created a virtual, real-time classroom (Lobel, et al., 2002a; 2002b). The virtual classroom was accessible from anywhere a student happened to be during class hours. All course activities and interactions took place online. There were no face-to-face meetings between the students and the instructors during the eleven weeks.

The virtual classroom consisted of a mainroom and four breakout rooms for small group activities and discussions that followed a highly detailed agenda. A principal instructor and three co-facilitators or moderators staffed the classroom. The whole class met in the public main room where students received the course content for the first part of the session, which usually lasted for about two hours. At the end of the first session, students moved to one of the four rooms to participate in group activities and discussions for a second class session lasting approximately one hour. The discussion groups were randomly assigned to the 32 participants at the beginning of the semester. A discussion moderator was assigned in each group and the setup remained the same through the semester.

Here is a scenario of one of these virtual classroom sessions. The class first met in the main public room for a lecturette and theory processing and then went to breakout rooms for small group discussion. The lecturette in the main public room was about a theory - Shutz’s Inclusion Needs. The objectives of the small group discussion were to experience and observe how one would negotiate membership in a group, and to discuss strategies for improving interpersonal effectiveness through identifying effective and less effective elements. Major discussion questions were developed by the whole teaching team in their pre-class preparation. These questions were to serve as guidelines for the moderated discussion. For students in each of the four breakout rooms, there were six discussion questions. The first three discussion questions given to students were: 1) What are some of the typical behaviors you use in order to include yourself into a group? 2) What are some of the ways you include others into a group? 3) What behaviors would you like to practice more of and/or less of?

Measurements

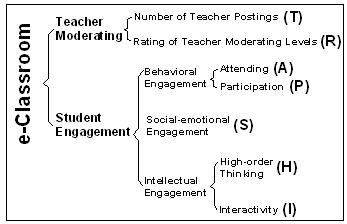

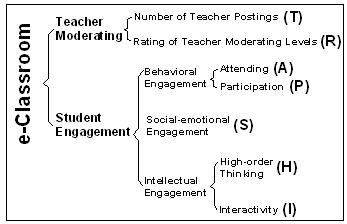

The variables in this study fall into two major categories: teacher moderating levels and student engagement. Each of these categories is further divided into sub-categories, as explained below. Figure 1 provides a schematic view of all the variables.

Figure 1 . Variables and Their Subcategories

Teacher moderating variables. Two dimensions are used to measure teacher moderating levels: the number of teacher postings and the ratings of teacher moderating levels. The number of teacher postings is a tally of teacher postings from each transcript. The rating of teacher moderating levels is assessed through a model developed by Xin (2002; Appendix A). The lowest level of moderation in this model (Level 1) consists of the moderator opening discussion; establishing the computer conferencing agenda; and observing conference norms. On the other end of the scale, at Level 5, the moderator strongly weaves and summarizes participants’ ideas, in addition to performing all previous moderating levels or functions.

Student engagement variables. Student engagement is measured by means of three indicators (sub- constructs): behavioral engagement, social-emotional engagement, and intellectual engagement. Based on earlier studies in the literature (Bloom, 1956; Carroll, 1963; Schwab,1975 ) and the more recent studies conducted by Lobel, et al. (2002a, 2002b), behavioral engagement in a computer conference is defined in this research as a phenomenon in which participants are attentively participating in the collaborative discourse (Lobel, et al., 2002b). From this framework, there are two aspects of student behavioral engagement: attending and participation. Attending is listening and participation is talking (Lobel, et al., 2002a). Based on Lobel et al. (2002a), attending is quantified as the frequency with which participants actively poll the server (by clicking the send/get button on their screen) for the data generated since their last request. Participation is defined as the state of being related to a larger whole (Lobel, et al., 2002a). Participation is quantified as the number of messages containing a communication sent by participants. While behavioral engagement is vital to the outcomes of online learning environments, social-emotional engagement of learners is also indicative of student involvement in collaborating in a community of critical inquiry. In this research, social-emotional engagement is defined as the phenomenon that occurs when students see themselves as part of a group rather than as individuals, and, therefore, make efforts to build cohesion, acquire a sense of belonging, and render mutual support (Rogers, 1970; Rourke, et al., 1999). The researcher adapted the model used by Rourke et al. (1999) and Garrison, Anderson and Archer (2000) to measure social-emotional engagement ( Appendix B ). Intellectual engagement is defined as the phenomenon in which participants interact and debate not only with each other, but also together or as individuals, reflect deeply on the issues of the prevailing task or subject matter, and undergo cognitive change and growth through engaging in this process (Xin, 2002). Higher order thinking and interactivity are considered key indicators of student intellectual engagement. The coding scheme for higher order thinking is adapted from Xin’s (2002) coding scheme to measure engaged collaborative discourse and Garrison et al.’s (2001) coding scheme to measure cognitive presence (Appendix C). The interactivity measure used for the current study is adapted from Rafaeli and Sudweek (1997) and Sarlin, Geisler, and Swan (2003) ( Appendix D ).

Procedure

The primary data sources for this study were 44 (11 weeks x 4 groups) automatically archived conference transcripts. Each set of archived transcripts contained about 350 postings. There were approximately 15, 400 (44 x 350) messages to analyze, comprising a far more comprehensive data set than have been used in previous studies in the literature.

The researcher conducted participant observation and compiled field notes during each observation. In order to better understand the context within which these discussions worked and to help triangulate research results ( Mills, 2007; Patton, 2002 ), the following additional types of data were collected: 1) Field notes taken by the researcher while observing each conference, both in the main room and in the breakout rooms; 2) The 11 archived transcripts of the public main room were also examined to better understand the context of the discussions in the breakout rooms; (3) Other class materials were collected, including the course syllabus, course readings, and classroom activity agendas. These data were used to help define the context of each conference.

Statistical Analyses

A mixed method approach using a combination of qualitative and quantitative methodologies was used to analyze data. The quantitative methods involved a process of converting communication content into discrete units and calculating the frequency of occurrence of each unit (cf. Rourke, et al., 2001). It also extended the descriptive results of content analysis to inferential hypothesis testing (Borg & Gall, 1989; Rourke et al., 2001) with the intent to verify the relationship between teacher moderating levels and student engagement.

Transcript analyses involved extensive and intensive coding of both latent and overt variables (cf. Rourke, et al., 2001). Inter-rater reliability was addressed by having a second rater code the same transcripts and sections of transcripts, and through discussion with the researcher. This approach produced a reliability rating of 0.72, which meets the cut-off point of 0.70, an acceptable reliability for interpretable and replicable research proposed by Riffe, Lacy, and Fico (1988, as cited in Rouke & Anderson, 2002).

Results and Discussion

The purpose of this study was to investigate factors that were related to student intellectual engagement in a community of inquiry maintained by synchronous computer conferencing. These factors were investigated by examining the relationships among variables using a series of regression analyses, including both linear and non-linear regression.

Student Social-emotional Engagement Variables and Student Intellectual Engagement Variables

To test the relationship between social-emotional engagement and higher order thinking, a simple linear regression analysis was conducted with higher order thinking as the dependent variable. The results suggest that there is insufficient evidence to conclude that social-emotional engagement can exert statistically significant effects on higher order thinking (t = .87, p = .39). To verify this result, nonlinear regression analysis was applied and the nonlinear regression results demonstrate that there are no significant nonlinear relationships (linear, quadratic, and cubic). Regression analyses were also used to investigate the relationship between social-emotional engagement and interactivity, the other variable of student intellectual engagement. The results suggest that there is not enough evidence to conclude that social-emotional engagement can exert statistically significant effects on interactivity (t = 0.34, p = .73).

Researchers who investigate online learning frequently argue that social-emotional engagement is an important element that contributes to student intellectual engagement (Garrison & Anderson, 2003; Gunawardena, 1995; Gunawardena & Zittle, 1997; Rourke, et al., 1999). The finding that social-emotional engagement is not significantly related to student intellectual engagement in this study contradicts the popular assumption in online learning literature that stresses the importance of student social-emotional engagement.

Student Behavioral Engagement Variables and Student Intellectual Engagement Variables

To test the relationship between attending and higher order thinking (one of the two variables of student behavioral engagement and student intellectual engagement, respectively; please refer to Figure 1), a simple linear regression analysis was conducted with higher order thinking as the dependent variable. The results suggest that there is insufficient evidence to conclude that attending can exert statistically significant effects on higher order thinking (t = 1.88, p = .067). Attending accounts for only 7.8% variance of higher order thinking.

A quadratic curvilinear regression model was then employed to fit the data to further investigate the relationship between attending and higher order thinking. Surprisingly, adding the quadratic term caused a substantial increase of R2 from .078 to .15 (F1, 41 = 3.62, *p < .036). It can be concluded that attending has a quadratic relationship with higher order thinking. This is an interesting discovery and its implications will be discussed in the following sections.

Regression analyses were applied to investigate the relationship between attending and interactivity, the other variable of student intellectual engagement. The results suggest that there is not enough evidence to conclude that attending can exert statistically significant effects on interactivity (t = 1.70, p = .97).

Regression analyses showed that the relationship between participation and higher order thinking was linear ( R2 = .367, t = 4.93, *p ≤. 05) and participation and interactivity was also linear R2 = .472, t = 6.13, *p ≤ .05).

These linear and quadratic relationships provide a strong reason to postulate that there may be an optimal level of attending and participation in relation to intellectual engagement. This is a very interesting postulation and will be elaborated on below.

First, there is an attention-grabbing relationship between participation and intellectual engagement. Regression analyses show that the higher the participation, the higher the higher order thinking and the interactivity, that is, the higher the level of intellectual engagement. Second, the quadratic relationship between attending and intellectual engagement is of interest. Nonlinear regression results showed that if attending was too low, which meant that if students did not listen or poll the server (or scroll the bar) (Lobel, et al., 2002a) or failed to pay attention, then students’ intellectual engagement would also be low. If attending was too high, which meant that students only listened without talking, then there would be low participation. As a result, there would be low intellectual engagement because participation has a significant linear relationship with higher order thinking and interactivity, the two variables of student intellectual engagement. The minimum attending (of the 44 discussion transcripts) of a group (of eight people) in a normalized time period of 90 minutes is 385 times, and the maximum, 2,743 times. This maximum attending meant that each individual listened or polled the server once every 16 seconds. With that high frequency of attending, one would not have much time to participate or talk in the group discussion. The nonlinear regression results indicate that the optimal level of attending (in relation to higher order thinking) for a group in a time period of 90 minutes is 1,750 times. Stated in another way, the optimal level of attending in a synchronous online learning session entails that an individual listens or polls the server (or scrolls the bar) every 25 seconds, or two to three times every minute. If they listen more than that, they are not contributing to the conversation and, consequently, have lower intellectual engagement levels.

It is necessary to point out that the purpose of doing the above quantitative analyses is more of an exploration of a research direction than of providing specific statistics. The purpose is to measure some variables and to quantify relationships between/among these variables. Within the limitations of this study, some of the R squared values are not big enough to draw reliable conclusions about relationships between variables.

Teacher Moderating Level Variables and Student Intellectual Engagement Variables

As explained in the above sections, the statistical results show that two of the student engagement variables -- behavioral engagement and intellectual engagement -- are related. There is not enough evidence to suggest that student social-emotional engagement is related to student intellectual engagement. Therefore, to answer the question of how teacher moderating levels are related to student intellectual engagement, it is legitimate to investigate how teacher moderating levels are related to the other two student engagement variables, social-emotional engagement and behavioral engagement.

Regression analyses were applied to investigate the relationship between teacher moderating levels (number of teacher postings and rating of teacher postings) and student social-emotional engagement. The results suggest that there is not enough evidence to conclude that the number of teacher postings (t = .25, p = .81), or the rating of teacher postings (t = .01, p =. 99) can exert statistically significant effects on student social-emotional engagement.

Regression analyses were also applied to investigate the relationship between teacher moderating levels and student behavioral engagement. The investigation showed how the quantity (number) and quality (rating) of teacher postings respectively and collectively influenced student attending and participation. The results revealed that the number of teacher postings exerted a significant effect on both attending (t = 2.96, *p ≤ .05 ) and participation (t = 4.72; *p ≤ .05 ). The results also revealed there was not enough evidence to conclude that the rating of teacher moderating levels can exert a significant effect on attending (t = .33, p = .72) or participation (t = .65, p = .54). In other words, in terms of student behavioral engagement, the quantity of teacher moderating levels mattered, but surprisingly, the quality of teacher postings did not. While investigating the quantity of teacher postings in a similar context, Lobel, et al. (2002b) found that the instructor did not need to interact with the students much more than one post per minute to facilitate the discussion that exhibited high rates of individual participation.

It is worthwhile to note that there is an interesting relationship between the number of teacher postings and student intellectual engagement. Regression analyses showed that the number of teacher postings had a linear relationship with both higher order thinking ( R2 = .330, t = 4.55, * p≤. 05) and interactivity (R2 = .340, t = 4.65, * p ≤ .05). A higher number of teacher postings might have produced a stronger sense of teaching presence (Rouke, et al., 2001), and, as a result, student intellectual engagement might have increased.

How did the rating of teacher moderating level relate to student intellectual engagement? Regression analyses showed that the rating of teacher moderating levels had a linear relationship with both higher order thinking (R2 = .176, t = 2.99, *p ≤ .05) and interactivity (R2 = .141, t = 2.63, * p ≤ .05).

With the limitations of the study, this finding confirms prevailing perspectives in the literature on the importance of teacher moderation, although there are few previous studies that reported supporting evidence through hypotheses testing as the present study did. In fact, most analyses available in the synchronous (as well as asynchronous) conferencing literature that attempt to relate rated teacher moderating levels to student intellectual engagement employed theoretical postulations rather than hypothesis testing. This study showed that highly rated teacher moderating levels (or better quality of moderating postings) resulted in better direction of student discussion and higher intellectual engagement.

To summarize, this section investigated how student intellectual engagement variables were related to student social emotional engagement, student behavioral engagement variables, and teacher moderating level variables. To better answer these questions, the relationship between teacher moderating levels and the other two student engagement variables -- social-emotional engagement and behavioral engagement -- were also investigated. Within the limitations of the study, statistical results showed that neither the number of teacher postings nor the quality of teacher moderating levels influenced student social-emotional engagement, a component of online learning that had been previously theorized as very important to intellectual achievement. Statistical results also showed that the number of teacher postings had a significant effect on student behavioral engagement while the quality of teacher moderating levels did not . Student participation had a significant effect on student intellectual engagement, but student attending or student social-emotional engagement did not. Finally, analyses showed that both the number of teacher postings and the quality of teacher moderating levels had a significant effect on student intellectual engagement.

Conclusions, Limitations and Suggestions

The study of the relationship between teacher moderating and student engagement is situated in a specific context. This context becomes the frame within which to understand the specifics and generalities of the conclusions. The interpretation of the results is limited to this context.

Within the limitations of its context, the study revealed that the quantity (number) of teacher postings, quality (rating) of teacher moderating levels, and student participation influenced student intellectual engagement. The more actively teacher moderators posted in a synchronous online learning conference, combined with higher quality of postings, the more active the student participation, and, consequently, the more elevated the levels of higher order thinking and interactivity.

Therefore, teachers’ goals should not merely be to have social-emotionally engaged students, but rather also to have students attend to each other’s thoughts and ideas and actively participate as a group. Rather than simply trying to create a safe or comfortable environment, teachers who try to get students listening and responding to each other will be rewarded with higher intellectual engagement. In contrast to what Cazden (2001) found in the teacher-student I-R-E (Initiation-Response-Evaluation) classroom discourse model, these data showed that intellectual engagement was brought about by effective teacher moderating, not simple initiation-response discussion. Thus, to ensure that students have higher intellectual engagement, teachers need to facilitate a dialogue that engages the whole group.

This study provides only one look at online synchronous moderation. Its purpose is to provide a starting point for future empirical studies. Understanding the nuances of synchronous online conferencing requires that research consider every aspect of online collaborative learning at the same time. In view of these facts, some suggestions and recommendations are relevant.

The online conferences examined in this study were structured conferences moderated by instructors. The course had its own unique subject matter, tasks, and structure. Differences in any of these aspects might generate different needs for moderation. According to Zhang and Ge (2006), different tasks may generate different needs for moderation and the effects of the same approach on other types of tasks may vary. Investigating the relative effects of the moderating approaches on online collaboration in other content areas or other disciplines could add to the understanding of the field of teacher moderating within synchronous conferencing.

This study is a research project based on quasi-experimental data. The assignment of group membership and moderators used only some randomization. A truly random setup would involve controlled or pre-selected moderating conditions that students were assigned to. Future studies might attempt to control teacher moderating levels to examine the effects of moderating on student engagement. Future studies might also observe students as they progress through a second or third course with this tool, i.e., conducting a longitudinal study. Data such as surveys, interviews, focus groups, and course products would help to build a better understanding of the issues and problems of synchronous online learning.

This study used only a single type of technology, a synchronous conferencing tool, with its own features, options, and drawbacks. The enormous variety of different tools available may provide different learning environments with vastly different affordances and constraints.

The study was based on one level of technology application. It occurred totally online without any face-to-face meetings. Future studies may investigate discussions in varied online settings; for instance, synchronous, asynchronous, and blended environments. Studies of blended learning may add to the understanding of synchronous learning and online teaching and learning in general.

The researcher made significant efforts to adapt and develop important constructs such as social-emotional engagement, higher order thinking, and interactivity. The researcher also extended descriptive analysis to inferential hypothesis testing and elucidated rationale, methods, and formulae for such testing. These methods and formulae might be deemed pioneering in the synchronous conferencing research arena, while also serving as a springboard for further research in this area.

This study linked both the processes and the educational objectives of computer conferencing to student behavioral engagement, social-emotional engagement, and intellectual engagement. As such, it fills a significant gap in the synchronous conferencing literature. Eventually, research in this area can extend to online training programs and curricula. The results of the study may help researchers and practitioners develop better protocols for moderating online discussions. Such knowledge is essential if online learning (particularly synchronous conferencing) is to achieve its full potential.

Acknowledgements

Judith Ouellette, Robert Rhodes, Punya Mishra, Curtis Bonk, Mia Lobel, Michael Neubauer, Susan Florio-Ruane, Blaine Morrow, Chris Wheel, Sophia Tan, Wei He, and Michael Guo contributed to the completion of this project.

|

References

Anderson, T. Rourke, L, Garrison, D. R. & Archer, W. (2001) Assessing Teaching Presence in a computer conferencing context. Journal of Asynchronous Learning Networks 5 (2).

Ashton, S., T. Roberts, and L. Teles. Investigating the Role of the Instructor in Collaborative Online Environments. Poster session presented at the CSCL '99 Conference, Stanford University, CA, 1999.

Berge, Z. (1995). Facilitating computer conferencing: Recommendations from the field. Educational Technology, 35, 22-30.

Bloom, B. S. (Ed.) (1956) Taxonomy of educational objectives: The classification

of educational goals. Handbook I: Cognitive domain New York; Toronto: Longmans, Green.

Bonk, C. J., J. R. Kirkley, N. Hara, and N. Dennen (2001). Finding the instructor in post-secondary online learning: Pedagogical, social, managerial, and technological locations. In J. Stephenson (Ed.). Teaching and learning online: Pedagogies for new technologies (pp.76-97). London: Kogan Page.

Bonk, C. J., Wisher, R. A., & Lee, J. (2003). Moderating learner-centered e-

learning: Problems and solutions, benefits and implications. In T. S. Roberts (Ed.). Online collaborative learning: Theory and practice (pp.54-85). Idea Group Publishing.

Borg, W. & Gall, M. (1989). The methods and tools of observational research. In W. Borg. & M. Gall (Eds.) Educational Research: An introduction (5 th ed.). London: Longman. 473-530

Brannon, R., & Essex, C. (2001). Synchronous and asynchronous communication tools in distance education. Tech Trends, 45 (1), 36-42

Cantor, J. A. (2001). Delivering instruction to adult learners (Rev. ed.). Toronto, Ontario, Canada: Wall & Emerson.

Carroll, J. (1963). A model of school learning. Teachers College Record, 64, 723-733.

Cazden, C. B, (2001), Classroom Discourse: the Language of Teaching and Learning, Heinemann, Portsmouth: NH.

Christenson, L. & Menzel, K. (1998). The linear relationship between student reports of teacher immediacy behaviors and perceptions of state motivation, and of cognitive, affective, and behavioral learning. Communication education, 47, 82-90.

Clark, H., & Schaefer, E. F. (1989). Contributing to discourse. Cognitive Science , 13, 259-294.

Dennen, V. P. (2001). The design and facilitation of asynchronous discussion activities in Web-based courses . Indiana University. Unpublished doctoral dissertation, Bloomington, IN.

Duemer, L., Fontenot, D., Gumfory, K., Kallus, M., Larsen, J., Schafer, S., et al. (2002). The use of synchronous discussion groups to enhance community formation and professional identity development. The Journal of Interactive Online Learning, 1 (2).

Feenberg, A. (1989). A user's guide to the pragmatics of computer mediated communication. Semiotica, 75 (3/4), 257-278.

Firestone, W. A. (1993). Alternative arguments for generalizing from data as applied to qualitative research. Educational Researcher, 22(4), 16-23.

Foreman, (2003, July/August), Distance learning and synchronous interaction, The technology source.

Goodyear, P., Salmon, G., & Spector, J.M. (2001). Competences for online

teaching: A special report. Educational Technology Research and Development, 49, 65-72.

Garrison, D. R., & Anderson, T. (2003). E-learning in the 21st Century: A framework for research and practice London: Routledge Falmer.

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2 (2-3), 1-19.

Garrison, D.R., Anderson, T., & Archer, W. (2001) Critical thinking, cognitive presence, and computer conferencing in distance education. American Journal of Distance Education, 15 (1), 7-23.

Grant, M. & Cheon, J. (Winter 2007). The Value of Using Synchronous Conferencing for Instruction and Students. Journal of Interactive Online Learning, 2009, 8 (2).

Gunawardena, C. N. (1995). Social presence theory and implications for interaction and collaborative learning in computer conferences. International Journal of Educational Telecommunications, 1 (2/3), 147-166.

Gunawardena, C. N., Lowe, C. A. & Anderson, T. (1997). Analysis of a global online debate and the development of an interaction analysis model for examining social construction of knowledge in computer conferencing. Journal of Educational Computing Research 17 (4), 397-431.

Gunawardena, C. N., & Zittle, F. (1997). Social presence as a predictor of satisfaction within a computer mediated conferencing environment. American Journal of Distance Education, 11 (3), 8-25.

Hara, N., Bonk, C. J., & Angeli, C. (2002). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28, 115-152.

Heuer, B. P., and King, K. (2004). Leading the Band: the Role of the Instructor in Online Learning for Educators. Journal of Interactive Learning Online 3 (1).

Henri, F. (1991). Computer conferencing and content analysis. In A. Kaye (Ed.), Collaborative learning through computer conferencing. Vol. 90, pp. 117-136. Berlin: Springer-Verlag.

Herring, S. (1999) Interactional Coherence in CMC, Journal of Computer-Mediated Communication 4 (4).

Herring, S. C. (2003). Computer-Mediated Discourse Analysis: An Approach to Researching Online Behavior. In S.A. Barab, R. Kling, & J.H. Gray, (Eds.). Designing for Virtual Communities in the Service of Learning. New York: Cambridge University Press.

Kaye, A. (1992). Learning together apart. In Kaye AR (Ed.): Collaborative Learning Through Computer Conferencing. NATO ASI Series. Vol 90. Berlin: Springer-Verlag, pp 1-24.

Learning Circuits, in conjunction with, E-Learning News, conducted the survey in January and February 2006. Results are based on 145 responses.

Lee, S., Lee, J., Magjuka, R., Liu, X., & Bonk, C. J. (2009 September). The challenge of online case-based learning: An examination of an MBA program. Journal of Educational Technology and Society, 12 (3), 178-190.

Liu, X., Bonk, C. J., Magiuka, R. J., Lee, S., & Su, B. (2005). Exploring four dimensions of online instructor roles: A program level case study. Journal of Asynchronous Learning Networks 9(4), 29 – 48.

Lobel, M., Neubauer, M. & Swedburg, R. (2002a). The eClassroom used as a Teacher's Training Laboratory to Measure the Impact of Group Facilitation on Attending, Participation, Interaction, and Involvement. International Review of Research in Open and Distance Learning.

Lobel, M., Neubauer, M. & Swedburg, R. (2002b). Elements of Group Interaction in a Real-Time Synchronous Online Learning-By-Doing Classroom Without F2F Participation, Journal of the United States Distance Learning Association, 16 (2).

Mills, G. E. (2007). Action Research: A Guide for the Teacher Researcher, 3rd Edition. Upper Saddle, NJ: Pearson, Merrill, Prentice-Hall.

Murphy, E. (2005). Issues in the Technology, 36 (3), 525-536.

National Survey of Student Engagement. (2003). The College Student Report.

Orvis, K. L., Wisher, R. A., Bonk, C. J., & Olson, T. (2002). Communication patterns during synchronous Webbased military training in problem solving. Computers in Human Behavior, 18 (6), 783-795.

Park, Y. J. & Bonk, C. J. (2007a). Synchronous learning experiences: Distance and residential learners perspectives in a blended graduate course. The Journal of Interactive Online Learning. 6 (3).

Park, Y. J. & Bonk, C. J. (2007b). Is online life a Breeze? Promoting a synchronous peer critique in a blended graduate course. Journal of Online Learning and Teaching, 3 (3).

Pellegrino, J. W. and Goldman, S. R. (2002) Be Careful What You Wish For - You May Get It: Educational Research in the Spotlight. Educational Researcher. 31 (8), 15-17.

Rafaeli, S. and Sudweeks, F. (1997). Networked Interactivity, Special Issue, Journal of Computer Mediated Communication, 2 (4).

Riffe , D., Lacy, S., Fico, F. ( 1998). Analyzing media message using quantitative content analysis in research. Mahwah, NJ: Laurence Erlbaum.

Romiszowski, A., & Mason, R. (2004). Computer-mediated communication. In D. H. Jonassen. (Ed.), Handbook of research for educational communications and technology (pp. 397-431). (2 nd ed.). New York: Simon & Schuster Macmillan.

Rogers, C. (1970). Encounter Groups. Longdon: Allen Lane, the Penguin Press.

Rogers, P. C., Graham, C. R., Rasmussen, R., Campbell, J. O., & Ure, D. M. (2003). Blending face to face and distance learners in a synchronous class: instructor and learner experiences. The Quarterly Review of Distance Education, 4 (3), 245-251.

Rourke , L., & Anderson, T. (2002). Exploring Social Communication in Computer Conferencing. Journal of Interactive Learning Research 13(3), 259-275.

Rourke, L., & Anderson, T. (2004). Validity in quantitative content analysis. Educational Technology Research and Development, 52(1), 5-18.

Rourke, L., Anderson, T., Garrison, D. R., Archer, W. (1999). Assessing social presence in asynchronous, text-based computer conferencing. Journal of Distance Education, 14 (3), 51-70.

Rourke, L., Anderson, T., Garrison, D. R., & Archer, W. (2001). Methodological issues in the content analysis of computer conference transcripts. Journal of Artificial Intelligence in Education, 12.

Salmon, G. (2000). E-moderating: The key to teaching and learning online. London: Kogan Page.

Sanders, J. & Wiseman. R (1990). The effect of verbal and nonverbal teacher immediacy on perceived cognitive, emotional, and behavioral learning in the multicultural classroom. Communication education, 39, 341-353.

Sarlin, D., Deisler, C., & Swan, K. (2003). Responsible selection: synchronous or asynchronous communication tools and patterns of engagement in online courses. Chicago, IL: Paper presented at the Annual Conference of the American Educational Research Association

Scardamalia, M., & Bereiter, C. (1996). Computer support for knowledge-building communities. In T. Koschmann (Ed.), CSCL: Theory and practice of an emerging paradigm. Mahwah, NJ: Lawrence Erlbaum.

Schwab, J. J. (1975). On learning community: Education and the state. The Center Magazine, 8 (3), 30-44.

Sher, A. (Summer 2009). Assessing the relationship of student instructor and student-student interaction to student learning and satisfaction in web-based online learning environment. Journal of Interactive Online Learning, 2009, 8 (2).

Shi , S., Bonk, C. J., Tan, S. & Mishra, P. (May, 2008). Getting in sync with synchronous: The dynamics of synchronous facilitation in online discussions. International Journal of Instructional Technology and Distance Learning, 5 (5), 3-27.

Shi, S., Mishra, P., Bonk, C.J., Tan, S., & Zhao Y. (2006). Thread theory: A framework applied to content analysis of synchronous computer mediated communication data. International Journal of Instructional Technology & Distance learning. 3 (3).

Shi, S., & Morrow, B. V. (2006). Econferencing for instruction: what works? Educause Quarterly. 29 (4).

Shneiderman, B. (1992) Education by engagement and construction: a strategic education initiative for a multimedia renewal of American education, In Ed Barrett (Ed), The social creation of knowledge: Multimedia and information technologies in the university. MIT Press, Cambridge, MA.

Shneiderman, B. (1998) Relate-Create-Donate: a teaching/learning philosophy for the cyber-generation. Computers & Education, 31, 25-39.

Tallent-Runnels, M. K., Thomas, J.A ., Lan, W. Y., Cooper, S., Ahern, T. C.,

Shaw, S. M., et al. (Spring 2006). Teaching courses online: a review of the research. Review of Educational Research, 76 (1), 93-135.

Wang, S. (2008). The Effects of a synchronous communication tool (yahoo messenger) on online learners’ sense of community and their multimedia authoring skills. Journal of Interactive Online Learning, 7 (1), 59-72.

Wang, Y., & Chen, N. (2007). Online synchronous language learning: SLMS over the Internet. Innovate, 3 (3).

Xin, M. (2002) Validity Centered Design for the Domain of Engaged Collaborative Discourse in Computer Conferencing, Brigham Yong University. Unpublished doctoral dissertation.

Zhang, K., & Bonk, C. J. (2008, spring). Addressing diverse learner preferences and intelligences with emerging technologies: Matching models to online opportunities. Canadian Journal of Learning and Technology (CJLT), 34 (2).

Zhang, K., & Ge, X. (2006). Dynamic contexts of online collaborative learning. In A. D. de Figueiredo & A. P. Afonso (Eds.), Managing learning in virtual settings: the role of the context (pp. 97-115). Idea Group, Inc.

|

Appendix A. Rubric for Measuring Teacher Moderating Levels

Moderating Levels |

|

Level 1 |

Open discussion;

Set conference norms; and

Observe conference norms |

| Level 2 |

Respond to prompts;

Acknowledge other contributions with simple confirmation;

Solicit contribution without providing context and related materials;

Refer to materials without explanation; and/or

Make easy meta comments;

In addition to performing Level 1 functions. |

Level 3 |

Recognize other contributions with elaboration;

Solicit feedback while providing context and related materials;

Refer materials with explanation;

Raise new relevant topics; and/or

Perform significant meta commenting;

In addition to performing Level 2 functions. |

Level 4 |

Weave in light forms;

Assess; and/or

Delegate;

In addition to performing Level 3 functions. |

Level 5 |

Weave in strong forms;

In addition to perform Level 4 functions.

|

(Adapted from Xin, 2002)

Appendix B. Rubric for Measuring Social-emotional Engagement

Category |

Indicators |

Definition |

Warmth |

Expression of emotions |

Conventional expressions of emotions, or unconventional expressions of emotion, includes repetitious punctuation, conspicuous capitalization, emoticons |

|

Use of humor |

Teasing, cajoling, irony, understatements, sarcasm |

|

Showing personal care. |

Expressing care to individual’s feelings and aspects of life outside of class |

|

Expression of compliment, appreciation, encouragement, and agreement |

Complimenting others or contents of others’ message, showing appreciation, encouragement and agreement |

|

Self-disclosure |

Presenting details of life outside of class, or expressing vulnerability. |

Cohesion |

Vocatives |

Addressing or referring to participants by name |

|

Addresses or refers to the group using inclusive pronouns |

Addressing the group as “ we”, “us”, “our”, and “group” |

|

Phatics, Salutations |

Communication that serves a purely social function; greetings, closures. |

Interactive

(SE-Interactive) |

Continuing a thread |

Continue with or reply to one or multiple previous messages rather than starting a new thread |

(Adapted from Rourke, et al., 1999 and Garrison, et al., 2000 )

Appendix C . Rubric for Measuring Higher Order Thinking

|

Descriptor |

Indicators |

Socio-cognitive process |

Phase I

Problem Initiation and Brainstorming |

Initiation |

Sense of puzzlement |

Asking questions

Messages that take discussion in new direction |

Recognizing the problem |

Presenting background information that culminates in a question |

|

Phase II

Problems Investigation and Meaning Co-construction |

Negotiation |

Divergence - within the online community |

Unsubstantiated contradiction of previous ideas |

Information exchange |

Personal narratives/descriptions/facts/

(not used as evidence to support a conclusion) |

Suggestions for consideration |

Author explicitly characterizes message as exploration, e.g. “does that seem about right?” “Am I way off the mark?” |

Brainstorming |

Adds to established points but does not systematically defend/justify/develop addition |

Leaps to conclusion |

Offers unsupported opinions |

Divergence - within a single message |

Many different ideas/themes presented in one message |

|

Phase III

Problem Solving and Idea Integration |

Integration |

Convergence among group members |

Reference to previous message followed by sustained agreement, e.g., “I agree because…” |

Convergence within a single message |

Justified, developed, defensible, yet tentative hypotheses. |

Connecting ideas, synthesis |

Integrating information from various sources - -textbook, articles, personal experience |

Creating solutions |

Explicit characterization of message as a solution by participant |

|

(Adapted from Xin, 2002 and Garrison et al., 2001)

Appendix D. Rubric for Measuring Interactivity

Category |

Descriptors |

Initiation with

A Question |

A question that is unrelated to a prior post |

Declarative |

An initiation or a new idea; a new line of thought; |

Reactive |

A response by one poster to a declarative message of another poster |

Interactive |

Any additional follow-up to an interactive message |

(Adapted from Rafaeli & Sudweek, 1997 and Sarlin, et al., 2003).

|