Student Exam Participation and Performances in a Web-Enhanced

Traditional and Hybrid Allied Health Biology Course

|

Abass S. Abdullahi

Assistant Professor

Biology and Med. Lab. Tech Dept|

Bronx Community College of City University of New York

Bronx, NY 10453 USA

and

Chemistry Department

City College of City University of New York

New York, NY 10031 USA

Abass.Abdullahi@bcc.cuny.edu

Abstract

This study compares student exam participation, performances and withdrawal rates in a web-enhanced traditional and hybrid Anatomy and Physiology II class. Although hybrid students had the convenience of attempting online tests over a period of a week, there was no difference in exam participation relative to traditional students that did the exams during a regularly scheduled class. About half of hybrid students did not attempt the four hourly online exams a second time, and over three quarter of them did not use their third attempt. Students in the hybrid sections generally waited until the last day, or after reminders were posted a day or two earlier, to make their first attempt. Overall, there were no significant differences in student performances between the traditional and hybrid students. Hybrid students had slight, but insignificant, improvements in performance in the four hourly exams, most likely due to the few students attempting the exams more than once. In contrast, web enhanced traditional students performed slightly better in the comprehensive final exam conducted on campus for both groups. Overall, the grade distribution for the two groups was similar, especially for D and C students, with hybrid students having more withdrawals and a lower failure rate.

Keywords: Distance Learning, Student Participation, Performances, Traditional, Hybrid, Biology, Comprehensive final exams.

|

Introduction

As a result of the increased demand for online courses and online degree programs across the country and the world, institutions of higher learning are also increasing their capacity to offer these courses. Online learning comes with advantages such as the convenience of attending class at the hours of one’s choice and therefore reaches wider audiences than traditional learning (Dutton et al. 2002). The demand for online courses may have also increased due to the recent economic downturn (Allen & Seaman, 2010). Consequently, universities are interested in increasing their enrollment rates by offering courses to some of these non-traditional students. Recent surveys from 2,500 colleges and universities in the United States show that about 4.6 million students were enrolled in at least one online course in Fall 2008 (Allen & Seaman, 2010). This represented a 17% increase from the 3.9 million students taking online classes in Fall 2007 (Allen & Seaman, 2008). It is estimated that online enrollment accounted for as much as 25% of the overall enrollment in 2008 (Allen & Seaman, 2010). This is higher than the 20% enrollment reported in 2005-2007 surveys (Allen & Seaman, 2007, 2008) and the 11% in 2002 (Allen & Seaman, 2003) and these numbers are only expected to steadily grow in future.

In line with the ongoing trends in institutions of higher learning and the college’s mission, Bronx Community College of the City University of New York, has also increased its online course offerings. A number of faculty have recently been trained by the college’s Instructional Technology department and the demand for these training workshops is growing. About 65% of recent survey respondents across the United States indicated that they use faculty training initiatives to prepare their instructors for distance learning (Allen & Seaman, 2010). Another 59% of higher learning institutions use informal mentoring and only a minority of colleges (19%) lack faculty training or mentoring programs. Faculty training workshops may be as simple as preparing instructors for web-enhanced traditional courses to fully online courses or a combination of traditional and online (hybrid). Allen & Seaman, 2003-2010 (The Sloan) annual surveys defined online instruction as courses with at least 80% instruction delivered online (Allen & Seaman, 2003, 2007, 2008, 2010). Face to face classes were defined as those with less than 29% online instruction including traditional and web-enhanced traditional courses. The rest of the courses that have between 30-80% of online instruction were defined as Blended or Hybrid courses. Distance learning in our case includes both blended (hybrid) courses and fully online asynchronous courses, as defined in the Sloan annual surveys.

Despite the demand for online learning, only about a third of faculty (31% in 2009 compared to 28% in 2002) have a favorable opinion, with about half the faculty non-committal (neutral) on its value and legitimacy (Allen & Seaman, 2010). About 64% of faculty surveyed feel that it takes more time to develop and teach an online course and those that have taught an online class (about one third) were more likely to give a favorable opinion (Seaman, 2009). As institutions increase their capacity for distance learning, there is a need to engage more faculty and learn more about the types and characteristics of online students relative to traditional students. The reasons for the low faculty acceptance of online learning are unclear; they may be related to different student profiles in distance learning courses, more effort in developing online courses and perhaps a need for a change in teaching style. Studies show that compared to traditional students, online students have lower retention rates (Frankola, 2001), are more independent learners (Diaz & Cartnal, 1999), are confident and self-motivated ( Ali et al. 2004), perform equally or slightly better (Allen et al. 2004, Somenarian et al. 2010) and generally have better computer skills ( Kenny, 2002).

Distance learning (both hybrid and fully online) requires more independent self-paced study skills, with less peer and teacher interaction than the traditional way of teaching (Diaz & Cartnal, 1999, Meyer, 2003) and students need to be more prepared for this change in style if they are to be successful. Students who enroll in distance learning classes just for convenience without giving much thought to the self-study skills needed to successfully complete the class or who feel that online classes are easier, generally contribute to the increased drop rates associated with distance learning (Frankola, 2001, Summers et al. 2005). In addition, it helps if online students have appropriate time management strategies and learning styles that enable them to better plan their study habits (Koohang & Durante, 1998). College administrators also need to ensure that students are aware that they are enrolling in a distance learning class and they make them aware of the expectations, challenges, and opportunities that this style presents.

Due to the distinct nature of distance learning that requires students to incorporate more self-study attitudes, instructors also need to adapt to this environment. They need to devise appropriate teaching styles that suit this group’s learning styles in the online environment that gives them less control over students. While one can easily change one’s teaching style in the traditional class by getting immediate feedback and interactions with students as the class goes on, the lack of visual cues in an online environment is a major challenge ( Johnson, 2008). Online instructors need to be as clear as possible with instructions, organization, and course delivery methods and they need to constantly communicate with students to minimize students feeling isolated ( Heale et al. 2010). One cannot expect to use the exact approach that works in traditional classes, for hybrid online teaching.

There is a need to compare traditional and hybrid student exam participation to find out whether hybrid students take advantage of the flexibility of distance learning. For example, the conveniences of scheduling exams online within the times the exams are up loaded presents an advantage. There is also the extra incentive of having multiple opportunities to attempt the exams in some cases. The more student characteristics that can be identified, the better their main driving motivations for doing the assigned activities can be understood. This helps instructors and administrators better plan their future online exam testing strategies and course or curriculum design, with the goal of improving student experiences and performances. This study aims to identify whether some of these student characteristics such as student overall exam participation, student performances, withdrawal rates and grade distribution are similar between traditional and hybrid students so as to design appropriate teaching styles. Specifically, the study was designed to answer the following research questions:

- How does the performance of traditional and hybrid students compare when given exams in a traditional versus online format?

- How does the performance of traditional and hybrid students compare, if the two groups have a similar traditional comprehensive final exam format?

- Does the option of multiple online exam attempts increase student performance in a hybrid class?

- Does the incentive of trying to improve their performance encourage students to participate in multiple online exam attempts?

- Does the flexibility of online testing increase student participation in the exams relative to traditional students?

- What time of the week do hybrid students actually do their first, second or third attempts?

- How does the overall grade distribution and withdrawal rates compare between hybrid and traditional students?

Method

Participants

Students were asked a number of introductory questions at the beginning of the semester to identify their majors, gender, and reasons for taking the class. The questions were open-ended and students could respond with as many responses as they wanted.

Traditional and Hybrid Lecture Formats

Four independent face to face web-enhanced traditional (Spring and Fall 2009, N=45) and online distance learning hybrid (Spring and Fall 2010, N=44) Anatomy and Physiology II class with the same professor and similar course content, but with different delivery formats were used for this study. The hybrid sections were clearly labeled with a distance learning “D” sign and brief information on the format was available to students prior to registration. Although most students informed the instructor that they knew this, a few students were either unaware of this or enrolled in these sections after options for other traditional sections became limited. In order to ensure that similar student types (such as those that prefer a specific schedule) were enrolled in each of the four sections, the instructor arranged to offer the class during the same days and times of the week. These were Wednesdays and Thursdays 9-11.50 am for lecture and laboratory sessions respectively. The traditional classes were presented in a face to face format, with the professor coming for class during the regularly scheduled lecture and laboratory hours and also supplemented with Blackboard PDF notes (web enhanced). The two hybrid sections met regularly for the laboratory sessions, but only met twice for the lecture sessions at the beginning of the semester to go over orientation issues and at the end for the comprehensive final exam.

In addition to similar Blackboard PDF notes provided to the traditional students, the Blackboard sessions of the hybrid students were more interactive with animations, movie clips, discussion board sessions, lecture quizzes, and lecture exams. The Blackboard PDF notes for the hybrid students were also carefully designed in weekly modules, rather than the web enhanced traditional student notes that were organized by chapter. The hybrid student’s Blackboard PDF notes also had a few questions that could be answered by studying the notes embedded in it and some of those questions were used for the five online lecture quizzes that carried 5% of the overall grade. This was done to keep them engaged with weekly activities so as to capture their attention and motivate them for the class, with their online participation used for attendance records.

Exam Formats

Four one hour lecture exams were given to the web-enhanced traditional students in a face to face format proctored by the professor on campus. The hybrid students were also offered similar four timed one hour lecture exams online using the Blackboard 8.0 test and assignments function. The hybrid lecture exam formats were such that the questions were randomized, but students were allowed three attempts so as to minimize complaints regarding technical issues. Another difference was that the hybrid students’ online exams were available for a week (Wednesday to Wednesday) and prior to taking the lecture exams, they were given a lecture quiz (on the same topics) with fewer questions and points the previous week, so as to motivate them to study for the exams. An announcement was posted when the quizzes or exams were posted and also a reminder was given a day or two before the deadline. The comprehensive final lecture exam format was the same for both the web-enhanced traditional and hybrid students as it was scheduled by the registrar’s office and was held on campus during the final’s week. The comprehensive final exams included material covered throughout the semester and there was the extra incentive of having it counted twice, if a student scored higher in the finals than in any of the other four exams. In that case, the mark for the lower exam was dropped and replaced by the final in the overall grade calculation.

Statistical Analysis

SPSS (PASW) version 20 software and Microsoft Excel 2010 were used for statistical analysis. Descriptive statistics (such as mean and standard error) were compared between web enhanced traditional and hybrid students’ exam data. Unpaired t-test was used to analyze the exams data for the traditional and hybrid sections, to find out whether there was any statistical significance in exam performance between the two groups. Paired t-test was used in determining statistical significance for multiple online exam attempts for the hybrid class. Significance was set at the 95% confidence level (p < 0.05).

A 2x2 contingency table analysis using Fisher’s exact value was used to analyze student exam participation, overall grades distribution and other categorical data. Statistical significance was also set at the 95% confidence level (two tailed P value <0.05).

Results

Student Survey

The overall response to the Anatomy and Physiology II student survey was high, ranging from 78% in the web enhanced traditional classes to 86% in the hybrid sections, respectively (Table 1). The study revealed that most of the students were taking the course to fulfill degree or major requirements. All four sections were dominated by females by about a 4:1 ratio, 80% in the hybrid classes and 78% in the traditional sections. About half of the allied health students taking this class were nursing students in both the traditional and hybrid sections (Table 1). A substantial number of students were also pursuing Nutrition and Dietetics majors, with the hybrid sections having a slightly higher number (25%) compared to the 17% in the traditional sections. Other represented majors included Therapeutic recreation, Radiology/Nuclear medicine, Occupational therapy and Community health.

1. Hybrid students have insignificant better performance in the four hourly exams

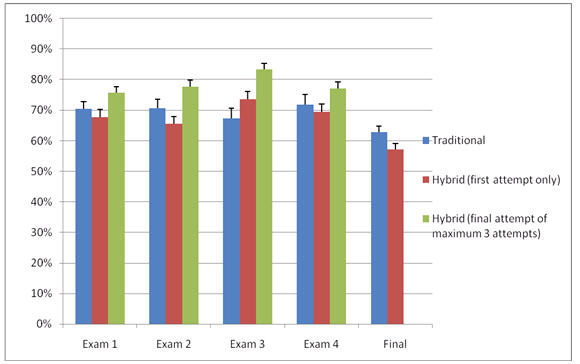

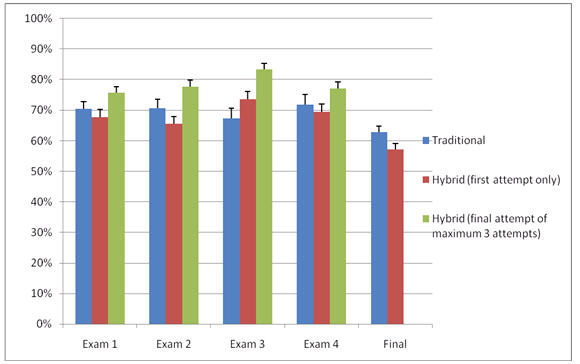

Student performances in exams 1, 2, 3 and 4 that were delivered to the hybrid students in an online format were relatively comparable to the traditional student scores (research question 1). Unpaired t-test analysis showed that there was no significant difference revealed between comparing the traditional student scores and only the first attempt for the hybrid students, which better mimics the traditional students that only had one chance (for example exam 1 t (76) = 0.78; p = 0.44). Students in the hybrid sections scored 68±3 (SEM) % on their first attempt for exam 1 compared to 70±2% for traditional students (Figure 1). Similar trends hold for exams 2 (66±2% versus 71±3%) and 4 (70±2% versus 72±3%). The only exam that was even slightly different was exam 3, where the students in the hybrid sections scored 74±3% on their first online attempt compared to 67±3% for the web enhanced traditional students, but the difference was not statistically significant (t (75) = 1.51; p = 0.14).

Figure 1: Comparison of student performances in a web enhanced traditonal and hybrid

classes showing the means and standard error of means. The data for students that dropped

the course or missed the tests was excluded.

Since the hybrid students had a maximum of three attempts, their final attempt was used for grade calculation. This means that when pooling the data for grade calculation, the scores on different online exam attempts were used for different students, depending on whether they did the first, second or third attempts. In contrast, the traditional students had only one chance to do the four hourly exams and therefore just one score per student. Despite this discrepancy, there were no significant differences between traditional and hybrid student performances in exam 1 (t (76) = 1.72; p = 0.09), exam 2 (t (78) = 1.90; p = 0.06) and exam 4 (t (75) = 1.44; p = 0.16).

However, the hybrid students had statistically insignificant better performances in exams 1, 2 and 4, when their final attempt (of a maximum of three online exam attempts) was compared to the traditional student scores (Figure 1). Students in the hybrid sections had an average score ± standard error of mean of 76±2% in exam 1, which was 6% higher than the 70±2% score for the traditional students. The performance of students in the hybrid sections in exams 2 (78±2% versus 71±3%) and 4 (77±2% versus 72±3%), were also 5-7% higher than traditional student scores (Figure 1). The performance of hybrid students in online exam 3 was significantly higher than the traditional students (t (75) = 4.21; p < 0.01). The hybrid students with an average score of 83±2% were 16% higher than the traditional students with a score of67±3% (Figure1).

Table 1: Web-enhanced traditional and hybrid student’s survey. The data shows their majors, gender,

reasons for taking the class and their overall responses.

|

Web enhanced

Traditional classes (N=45) |

Hybrid classes

(N=44) |

|

% of responses |

% of responses |

Overall response |

78% |

86% |

Gender |

Male 22%

Female 78% |

Male 20%

Female 80% |

Reasons for taking the class |

|

|

Fulfill degree's requirements |

52% |

82% |

No response or ignored question |

48% |

18% |

Majors |

|

|

Nursing |

43% |

48% |

Nutrition and Dietetics |

17% |

25% |

Therapeutic recreation |

4% |

2% |

Radiology/Nuclear medicine |

4% |

5% |

Occupational therapy |

4% |

0% |

Community health |

0% |

5% |

Undecided |

5% |

1% |

No response |

23% |

14% |

|

2. Traditional students have insignificant better performance on the comprehensive final

There was also no significant difference in exam scores between students in the hybrid and web enhanced traditional sections (research question 2), given a single attempt proctored comprehensive final (t (77) = 1.99; p = 0.05). Both groups scored their lowest average in the comprehensive final exam (Figure 1). However, unlike the previous trends in the hourly exams 1-4, where students in the hybrid sections had statistically insignificant, but better performances than the traditional students, the roles were reversed. The hybrid students average score of 57±2% in the comprehensive final was 6% lower than the web enhanced traditional student score of 63±2% (Figure 1).

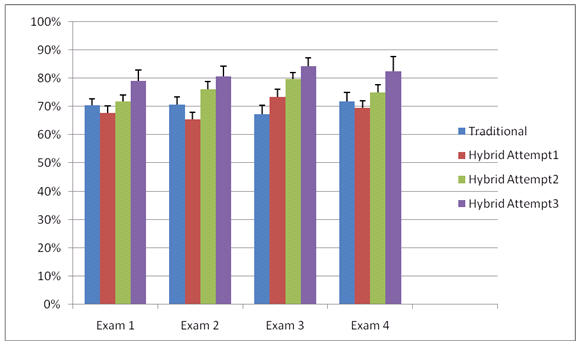

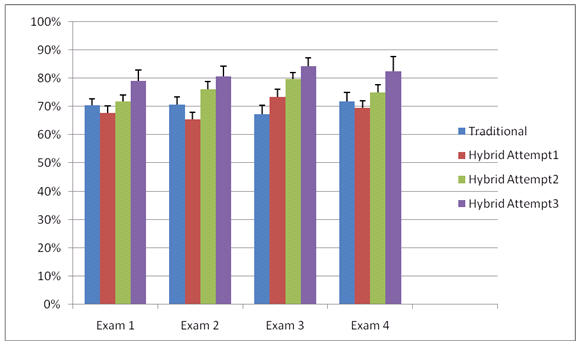

3. Multiple online exam attempts increase hybrid student performance

A paired t-test analysis of online exam attempts1 versus 2 revealed significantly higher second attempt performance (research question 3) for exams 2 (t (22) = 2.49; p = 0.02) and 3 (t (22) = 2.68; p = 0.01). The few hybrid students taking these exams a second time improved by 6-10% in exams 2 (66±2% in attempt 1 versus 76±3%) and 3 (74±3% versus 80±2%; Figure 2). Although not significant, there were also gradual improvements of 4-5% in exams 1 (t (21) = 1.26; p = 0.22) and 4 (t (18) = 1.26; p = 0.22).

There were further improvements for the negligible number of students taking the third attempts, but was statistically insignificant when compared to the second attempt, for all 4 exams (example exam 1 (t (5) = 1.58; p = 0.17). For instance, students in the hybrid section scored 79±4% in their third attempt for exam 1, which was 7% higher than the 72±2% for attempt 2. There was a similar pattern in exams 2, 3 and 4, with students doing their third attempts having scores of 5%, 4% and 7% higher, respectively, compared to the second attempt (Figure 2).

Figure 2: Student exam performances showing three attempts for the hybrid classes and one

attempt for the traditional classes. Note that the error bars represent standard error of means.

The data for students that dropped the course or missed the tests was excluded.

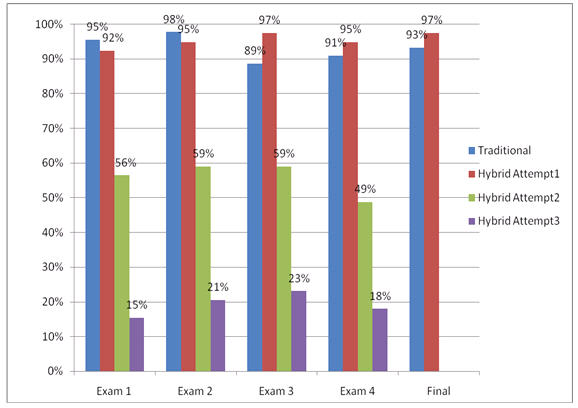

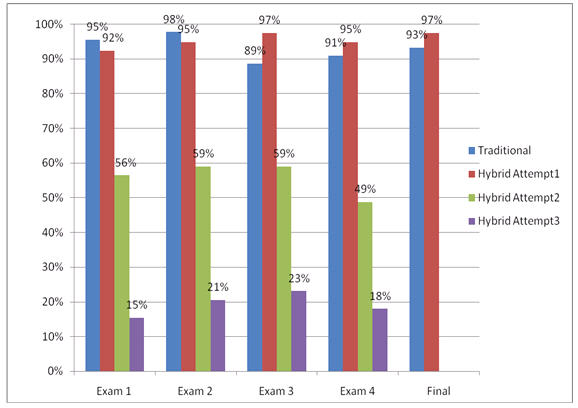

4. Many hybrid students were less likely to attempt multiple online exams

Since the option of having multiple attempts resulted in slightly better performances for students in the hybrid sections as shown in Figure 2, it was important to find out how many students participated in more than one attempt (research question 4). While the results in Figure 2 show gradual exam improvements from attempt 1 to 2 to 3, the student exam participation data in Figure 3 shows gradual decrease in the number of students attempting the exams from attempt 1 through 3.

The percentage of hybrid students doing exam 1 decreased from 92% (n=39) in attempt 1 to 56% in attempt 2, before decreasing to only 15% in attempt 3. A 2x2 contingency table analysis using Fisher’s exact value revealed a statistically significant two tailed P value <0.01, when exam 1 attempts 1 versus 2 or attempts 2 versus 3 participants were compared. There were similar statistically significant (two tailed P value <0.01) trends in exams 2, 3 and 4, where only 49-59% of students did the second attempt and a meager 18-23% attempted the exams a third time (Figure 3). It’s worth noting that exam 3, with the highest number of participants in attempts 2 (59% of students) and 3 (23% of students) had the best exam averages for the hybrid students in Figures 1 and 2.

Figure 3: Web enhanced traditional and hybrid students participation in four hourly exams

delivered online to the hybrid classes and on campus to the traditional classes. The final

was delivered in the same format (on campus) to both groups. Note that n=44 for the

traditional class and n=39 for the hybrid sections. The data for students that dropped the

course was excluded.

5. Traditional and hybrid student exam participation was similar when dropped students were excluded

Due to the flexibility of online tests, it was necessary to determine whether more students in the hybrid sections would participate in at least one attempt compared to the web enhanced traditional students (research question 5). The study revealed that although traditional students were required to come for the exams only once for each exam (during regular class hours), about 95-98% of them came for the first two exams, compared to 92-95% of hybrid students attempting these two exams at least once during the week that they were available (Figure 3). A 2X2 contingency table analysis using Fisher’s exact value revealed no statistical significance (two tailed P value =0.66), when exam 1 traditional and hybrid participants were compared.

Traditional student participation in the exams dropped slightly to 89-91% in exams 3 and 4 before increasing to 93% in the finals, but their participation was not significantly different (two tailed P value =0.21) from hybrid students (at 95-97% for these exams). However, it is worth noting that the participation data for dropped students was excluded and the hybrid classes had more of these students with generally lower exam participation.

6. Hybrid students take the exam close to the deadline.

In order to better understand why a significant number of students in the hybrid sections did not participate in attempts 2 and 3, an effort was made to find out when students completed their assigned exams over the week (research question 6). Over half of the students generally waited until the deadline or only a day or two earlier when reminders were posted to even do their first attempt for the four hourly online exams (Table 2). For instance, about 62% of students completed their first attempt for exam 1 during this time, with 26% completing their work on the day the exams were taken off (deadline) and another 36% only a day or two earlier after reminders were posted. Similar trends were observed for exams 2 and 3, where 72-79% of students completed their attempt 1 on the deadline day or after reminders were posted. About 71% of students completed their first attempt for exam 4 during this period, with most of them (38%) waiting until the deadline.

The total number of students in the hybrid sections completing attempt 2 were generally about half of those completing attempt 1, with most of them doing their second attempt at the deadline (Table 2). About 31% of students did their second attempt for exam 1 on the day of the deadline (out of a total of 57% of students doing attempt 2 for exam 1), with about 18% completing their work a day or two earlier after reminders were posted. The percentage of students completing the second attempt for exam 3 was similar at 21% on the deadline day and 33% after reminders were posted. Only about 15-28% of students completed their attempt 2 for exams 2 and 4 at the deadline, but the overall number of students completing exam 4 (48%) for this second attempt was also low. The number of students completing the second attempt more than 2 days early was constant at about 8% for the first two exams and 5% for exam 3 (Table 2).

The percentage of students completing the third attempt was generally very low at about 16-23% (Table 2). Most of those students also completed their third attempt at the deadline. About 16% of students doing their third attempt for exams 2 and 3 either waited until the deadline or just after reminders were posted. The situation was even worse for exam 1, where majority of those taking their third attempt (13% of a total of 16%) completed their work on the day it was taken off (Table 2).

Table 2: Hybrid student exam participation trends showing their exam attempt dates for attempts 1-3, grouped as more

than 2 days before the deadline, a day or two earlier or on the deadline (n=39). The data for students that dropped the

course was excluded.

|

Exam 1

(% participants) |

Exam 2

(% participants) |

Exam 3

(% participants) |

Exam 4

(% participants) |

Attempt # |

1 |

2 |

3 |

1 |

2 |

3 |

1 |

2 |

3 |

1 |

2 |

3 |

Deadline day |

26 |

31 |

13 |

28 |

28 |

8 |

26 |

21 |

5 |

38 |

15 |

5 |

A day or 2 before |

36 |

18 |

3 |

51 |

23 |

8 |

46 |

33 |

15 |

33 |

33 |

13 |

2 days before |

31 |

8 |

0 |

15 |

8 |

5 |

26 |

5 |

3 |

23 |

0 |

0 |

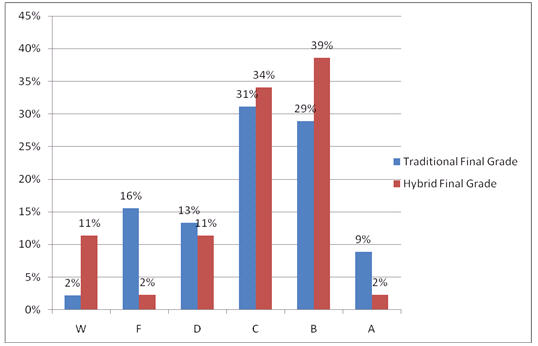

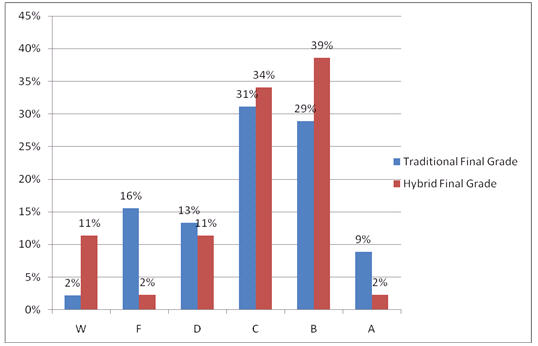

7. Hybrid and traditional grades were similar, with higher hybrid withdrawal rates

Hybrid student’s final grades distribution were comparable to the Web-enhanced traditional students especially in the middle group (research question 7). About a third of the students (representing 14 students for the traditional group and 15 students for the hybrid group) scored grade “C” (Figure 4). Another 29-39% of the students scored grade “B” in both groups, with about half of this group scoring B-. There were 6 students in the traditional sections and 5 students in the hybrid classes representing 13% and 11% respectively who scored grade “D”. The main difference were at either ends of the grade distribution spectrum, with more traditional students scoring grade “A” or grade “F” (Figure 4). Four traditional students representing 9% of the student population scored an “A” compared to 1 hybrid student, whereas 16% of the web-enhanced traditional class failed the course compared to 2% hybrid failure rate. However, there were fewer traditional students dropping the course (2%) compared to the 11% of hybrid student dropout rate for this course. A 2x2 contingency table analysis of traditional and hybrid students withdrawals and failures were statistically significant (two tailed P value =0.03, Fishers exact-test). There was no significant difference when other grades were compared between the traditional and hybrid students (example A and B grades gave a two tailed P value = 0.18).

Figure 4: Web enhanced Traditional (n=45) and Hybrid (n=44) final grades distribution in

an Anatomy and Physiology II class.

Discussion

As the demand for distance learning continues to rise, institutions of higher learning need to better understand online student characteristics and performances compared to traditional students. A key difference between the traditional class and distance learning courses is the convenience with respect to times of class attendance and completing exams as long as the students have reliable internet connection (Dutton et al. 2002). However, the students have to be motivated enough to actually perform the required tasks (Block et al. 2008). Some studies have shown that online students are more independent than traditional students, who may need structure (Diaz & Cartnal, 1999, Gee, 1990). Hybrid courses have the advantages of meeting face to face for a significant portion of the class and using online tools to continue class time (Young, 2002). One can therefore take the best of what works in traditional classes and fully online courses. The study was designed in such a way that hybrid students were constantly engaged with weekly online activities including discussion boards, studying posted lecture notes, watching animations and doing tests. They were also given the option of taking the exams up to a maximum three attempts, with their last attempt taken for their grade calculations.

There were no significant differences between web-enhanced traditional and hybrid student performances, in agreement with previous studies (Block et al. 2008 ; Dellana et al. 2000; Ashkeboussi, 2001, Arbaugh & Stelzer, 2003). The study showed trends toward statistically insignificant higher hybrid student performance when traditional student scores were compared to the last attempt of a maximum of three attempts (research question 1). The performance of the students in the hybrid sections was even significantly higher in one case (exam 3), but only when the online attempts data were pooled. R ecent meta-analysis showed that distance learning students had small improvements (Allen et al. 2004) or generally outperformed traditional face to face students (Shachar & Neumann, 2010). It is likely that the different delivery mode appeals to the online learners. However, i n this study, the small increases seem to be mainly due to the few motivated students attempting second and third attempts (research question 3). This is supported by the observation that the first attempt data for the hybrid students was very similar to traditional student average scores, and even in the case of exam 3, was not significantly different. In fact, had more students attempted the online exams multiple times, the increases will most likely have been higher.

However , a large proportion of hybrid students that attempted the exams once were unlikely to carry out further attempts, even with the incentive to improve their grades (research question 4) . While there were gradual statistically insignificant improvements in hybrid student performance for those doing multiple attempts, the number of students doing attempts 2 and 3 gradually decreased for all the four online exams. This was interesting given the conveniences of the hybrid exam format that allowed students the flexibility to attempt the exams multiple times at any time during the week. Hybrid student exam participation data was similar to the traditional students that only had one chance to attempt the exams during a regularly scheduled lecture session (research question 5). In fact, since students enrolled in the web-enhanced traditional classes were more likely to complete the course than those in the hybrid sections, their exam participation would have been higher than the hybrid students, had dropped students data been included.

In order to find out why a relatively small number of hybrid students took advantage of the option to have multiple exam attempts, an effort was made to study their attempt trends during the week. The study revealed that hybrid students generally waited until the last minute or after reminders were posted, before even doing their first attempt (research question 6). This implies that these non-traditional students had time constraints or time management issues (Aragon & Johnson, 2009, Grimes & Antworth, 1996) . It may also suggest that they need more reminders earlier in the week. If most students were doing their first attempt so close to the deadline, then it is not surprising that they probably ran out of time to carry out further second and third attempts, explaining the decreasing number of participants in these multiple attempts.

The comprehensive lecture final exam generally had the lowest scores in both groups. This seems to be the trend across the board and is thought to be due to the overwhelming amount of information the students have to master. Although statistically insignificant, there was a trend towards web-enhanced traditional students performing better than the hybrid students in the finals (research question 2), as they were more used to the on campus exam format throughout the semester. Since the comprehensive final exam was counted twice if a student scored higher than any of the four hourly exams and that exam dropped in overall grades calculation, this policy favored traditional students that had better finals performances. Specifically, there were about 20 (44%) of traditional students that benefited from this policy compared to only 7 (16%) of students in the hybrid sections. This may have allowed the web-enhanced traditional students to compensate for their slightly lower exams 1-4 performances compared to hybrid students.

The end result was final grades distributions that were very similar between the two groups, especially for D and C students (research question 7). However, hybrid students had higher withdrawal rates compared to the traditional students. This finding suggests that less motivated students may have felt more isolated in the online environment (Block et al. 2008, Stewart et al. 2010). Although dropped students were excluded in the exam participation data, it was obvious that these students that eventually withdrew were less likely to attempt the online exams. Further support for this view is implied from the exams performance data that shows lower standard deviation for hybrid students (that had more dropped students) compared to traditional students (data not shown). It seems that underperforming students were less likely to do the exams than score poorly (and therefore have less effect on increasing the standard deviation) in the online environment than the traditional classes. In addition to multiple attempts, this may have also contributed to the slight improved performances observed for hybrid students relative to the traditional students.

It is not surprising then that students who were not doing their exams were more likely to be less successful in meeting course objectives and eventually withdrew. Students who are usually at high risk for non-completion of degrees in the traditional system such as students with dependents to support, full time employment, physical disabilities, more commute distances, or adult learners are more likely to prefer distance learning ( Pontes et al. 2010, Thompson, 1988 ). This may explain the higher attrition rates reported for online students (Frankola, 2001, Patterson & McFadden, 2009) that were also found in this study. The grade distribution data shows that while underperforming students may have contributed to the higher hybrid student’s withdrawal rates, similar students in the traditional sections may have contributed to higher failure rates. In other words, rather than drop, struggling traditional students were more likely to actively participate in the exams, thus increasing the standard deviation, and settle for an “F” at the end. It is not clear whether that is because of the different course delivery formats, or traditional students enjoying the company of their instructor and fellow students for whatever reason, even when things were not going well.

In addition to the relatively small sample size, another limitation of the study is that it was only conducted in one university and may only be representative of that student population. Further studies with more sample sizes involving various instructors and universities may help better answer the research study questions. Other recommendations include the need to interact with online students more often to encourage more exam participation and minimize withdrawals. The weekly Blackboard PDF modules embedded with few questions, interactive discussion boards and animations, lecture quizzes, announcements when exams were posted, reminders before exams were taken off, very detailed syllabus with specific exam dates, and practice online exams on first day of orientation etc were all attempts made to better engage the online students in this study. It is likely that without this, the participation would have been even lower and the withdrawal rates higher. There is also the need for the online exam format to have multiple styles including matching, ordering, true and false and multiple choice questions that are randomized and timed, as was done for this study. Perhaps there is also an even higher urgency in constantly modifying the exams in an online environment.

Conclusions

In summary, despite the convenience of online testing over extended period of time (one week in this case), there was no difference in exam participation compared to traditional students with only one hour of scheduled class time. Traditional students were also more likely to complete the course and had more failure rates than hybrid students, who instead had higher withdrawal rates.

The study has revealed that despite faculty concerns with the safety of online hybrid exams, there were no statistically significant differences in student performances (except in pooled attempts data for exam 3) and overall grade distribution between regularly proctored traditional exams and the online hybrid exams. However, the students in the hybrid sections had statistically insignificant better performances in the four hourly online exams, where they had multiple attempts, compared to the web enhanced traditional students.

Interestingly, there was an inverse relationship between student performance and participation in student exam attempts. While there were gradual improvements in hybrid student performance in second and third attempts, there were also gradual declines in student participation. This is most likely due to the fact that students in the hybrid sections were more likely to attempt their exams at the eleventh hour; especially after reminders were posted, hence having little time to attempt exams multiple times. There is therefore a need to constantly engage the online hybrid students and may be even give them more reminders before the deadline.

There was also no significant difference when the two groups did the same comprehensive final exam in the same format, with traditional students having statistically insignificant better performance than hybrid students. Despite the relatively small sample size, overall the study shows that traditional and distance (hybrid, in this case) learning are very comparable in terms of exam performance and participation, but less so regarding withdrawal and failure rates.

Acknowledgements

Special thanks to the Bronx Community College of City University of New York’s Instructional Technology staff. The former Director, Howard Wach, and Instructional Technology Coordinator, Albert Robinson, were very helpful during the distance learning workshops. Interim director Steve Powers and Laura Broughton offered tips in course design and gave useful guidance even after the workshops.

References

Ali, N.S., Hodson-Carlton K., and Ryan, M. (2004). Students’ perceptions of online learning: Implications for teaching, Nurse Educator, 29, 111-115.

Allen, I.E., & Seaman, J. (2003). Sizing the Opportunity: the Quality and Extent of Online Education in the United States, 2002-2003. Babson Survey Research Group. Retrieved from http://www.aln.org/resources/sizing_opportunity.pdf

Allen, I.E., & Seaman, J. (2007). Online nation: Five years of growth in online learning. Needham, MA: Babson Survey Research Group & The Sloan Consortium. Retrieved from http://www.sloanconsortium.org/sites/default/files/online_nation.pdf

Allen, I. E., & Seaman, J. (2008). Staying the course: Online education in the United States, 2008. Babson Survey Research Group. Retrieved from http://www.sloanconsortium.org/sites/default/files/staying_the_course-2.pdf

Allen, I., & Seaman, A. (2010). Learning on demand: Online education in the United States, 2009: Babson Survey Research Group. Retrieved from http://www.sloanconsortium.org/publications/survey/pdf/learningondemand.pdf

Allen, M., Mabry E., Mattery, M., Bourhis, J., Titsworth, S., & Burrell, N. (2004). Evaluating the effectiveness of distance learning: A comparison using meta-analysis. Journal of Communication, 54, 402-420.

Aragon, S., & Johnson, E. (2009). Factors influencing the completion and non-completion of community college online courses. American Journal of Distance Education, 22(3), 146–158.

Arbaugh, J.B. & Stelzer, L. (2003). Learning and teaching via the web: what do we know?. In C. Wankel & R. DeFillippi (eds.) Educating Managers with Tomorrow’s

Technologies (pp. 17-51). Greenwich, CT: Information Age Publishing.

Ashkeboussi, R. (2001). A comparative analysis of learning experience in a traditional vs. virtual classroom setting. The MAHE Journal, 24, 5-21.

Block, A., Udermann, B., Felix, M., Reineke, D., Murray, S.R. (2008). Achievement and Satisfaction in an Online versus a Traditional Health and Wellness Course. Journal of Online Learning g and Teaching, 4 (1): 57-66. Retrieved from https://jolt.merlot.org/vol4no1/block0308.htm

Dellana, S.A., Collins, W.H., & West, D. (2000). On-line education in a management science course – effectiveness and performance factors. Journal of Education for Business, 76, 43-47.

Diaz, D.P., & Cartnal, R.B. (1999). Students’ learning styles in two classes: Online distance learning and equivalent on-campus. College Teaching, 47, 130-135.

Dutton, J., Dutton, M. & Perry, J. (2002). How do online students differ from lecture students? Journal of Asynchronous Learning Networks, 6(1), 1-20. Retrieved from http://sloanconsortium.org/system/files/v6n1_dutton.pdf

Frankola, K. (2001). Why online learners drop out. Workforce, 80 (10), 53-59. Retrieved from http://articles.findarticles.com/p/articles/mi_m0FXS/is_10_80/ai_79352432/print

Gee, D.G. (1990). The impact of students’ preferred learning style variables in distance education course: A case study. Portales: Eastern New Mexico University. (ERIC Document Reproduction Service No. ED 358 836)

Grimes, S. K., & Antworth, T. (1996). Community college withdrawal decisions: Student characteristics and subsequent reenrollment patterns. Community College Journal of Research and Practice, 20, 345–361.

Heale, R, Gorham, R and Fournier, J. (2010). An Evaluation of Nurse Practitioner Student Experiences with Online Education. The Journal of Distance Education . 24 (3). Retrieved from http://www.jofde.ca/index.php/jde/article/view/680/1136

Johnson, A. (2008). A nursing faculty’s transition to teaching online. Nursing Education Perspectives, 29 (1), 17-21.

Kenny, A. (2002). Online learning: enhancing nurse education? Journal of Advanced Nursing, 38 (2), 127-35.

Koohang, A. & Durante A. (1998). Adapting the traditional face-to-face instructional approaches to on-line teaching and learning. Refereed Proceedings of International Association for Computer Information Systems.

Meyer, K. (2003). The Web’s impact on student learning. T.H.E Journal, 30 (5), 14-24.

Patterson, B., & McFadden, C. (2009), "Attrition in Online and Campus Degree Programs", Online Journal of Distance Learning Administration, 12 (2). Retrieved from http://www.westga.edu/~distance/ojdla/summer122/patterson112.html

Pontes, M.C.F, Hasit , C, Pontes, N.M.H, Lewis, P.A, Siefring, K.T. (2010). Variables Related to Undergraduate Students Preference for Distance Education Classes. Online Journal of Distance Learning Administration, 13 (2). Retrieved from http://www.westga.edu/~distance/ojdla/summer132/pontes_pontes132.html

Seaman, J. (2009). Online learning as a strategic asset volume II: The paradox of faculty voices: Views and experiences with Online Learning : Babson Survey Research Group. Retrieved from http://www.aplu.org/NetCommunity/Document.Doc?id=1879 .

Shachar, M. & Neumann, Y ( 2010) . Twenty Years of Research on the Academic Performance Differences Between Traditional and Distance Learning: Summative Meta-Analysis and Trend Examination. Journal of Online Learning and Teaching, 6 (2), 318-334. Retrieved from https://jolt.merlot.org/vol6no2/shachar_0610.pdf

Stewart, C., Bachman, C., Johnson, R. (2010). Students’ Characteristics and Motivation Orientations for Online and Traditional Degree Programs. Journal of Online Learning and Teaching, 6 (2), 367-379. Retrieved from https://jolt.merlot.org/vol6no2/stewart_0610.pdf

Somenarain, L., Akkaraju, S., Gharbaran, R. (2010). Student Perceptions and Learning Outcomes in Asynchronous and Synchronous Online Learning Environments in a Biology Course. Journal of Online Learning and Teaching, 6 (2), 353-356. Retrieved from https://jolt.merlot.org/vol6no2/somenarain_0610.pdf

Summers. J. J., Waigandt. A. & Whittaker. T. A. (2005). A comparison of student achievement and satisfaction in an online versus a traditional face-to-face statistics class. Innovative higher Education, 29(3), 233-250.

Thompson, G. (1988). Distance learners in higher education. In Chere Campbell Gibson (Ed.) Higher Education: Institutional Responses for Quality Outcomes. Madison, WI: Atwood Publishing, p 9-24.

Young, G. (2002). Hybrid teaching seeks to end the divide between traditional and online instruction. Chronicle of Higher Education. A33-A34.