Development, Implementation, and Evaluation of a Grading Rubric

for Online Discussions

|

Ann M. Solan

Assistant Teaching Professor

Drexel University

Goodwin College of Professional Studies

Philadelphia, PA 19104 USA

solan@drexel.edu

Nikolaos Linardopoulos

Lecturer

Rutgers University

School of Communication and Information

New Brunswick, NJ 08901 USA

nick.linardopoulos@rutgers.edu

Abstract

This paper discusses the development, implementation, and evaluation of a grading rubric for online discussions. Despite the growing popularity of grading rubrics and the parallel growth of online learning, there is a lack of research on the topic of grading online discussions. Grading discussions (sometimes called class participation) in an online learning environment can be particularly challenging. In this paper, the authors share their experience of creating and implementing a comprehensive grading rubric for online discussions that evaluates the following criteria: quantity, quality, timeliness, and writing proficiency. Student perceptions regarding the use of the discussion rubric are also analyzed and areas of future research are suggested.

Keywords: e-learning, assessment, student perceptions, participation guidelines, faculty expectations

|

Introduction

This paper describes the experience of creating, implementing, and evaluating a comprehensive grading rubric for online discussions that evaluates the following criteria: quantity, quality, timeliness, and communication proficiency.

What is a Grading Rubric?

A grading rubric is a scoring tool, usually in the form of a matrix or table, which delineates the specific expectations or criteria that will be used to assess a student’s performance. Importantly, a grading rubric breaks down an assignment into its component parts and provides a thorough yet concise description of what constitutes acceptable and unacceptable levels of performance for each part (Stevens & Levi, 2005). Ideally, a grading rubric is distributed to students before the associated assignment is due. This way, students have time to think about how they will be evaluated. The advance availability of a grading rubric is particularly important for online courses since there is typically less opportunity for the students and the instructors to interact one-to-one.

Advantages of Grading Rubrics

There are a number of administrative and pedagogical advantages to using grading rubrics. From the student’s perspective it reduces the element of surprise (Doyle, 2008) and from the instructor’s perspective it mitigates the “I didn’t know that is what was expected” response from students. Bauer and Anderson (2000) state, “To ensure meaningful and fair assessment, professors should make students thoroughly familiar with how their work will be judged” (p. 66). Knowlton elaborates on the need for rubrics by describing what happens if instructors do not use rubrics. According to Knowlton, “If professors fail to communicate standards for participation, students will revert to their own past experiences to help them define acceptable levels of performance” (p. 314). These prior perception-shaping experiences may not be what instructors have in mind. Therefore, not to provide standards is to leave performance up to chance.

Furthermore, according to TeacherVision (2011) many educators believe that providing students with such criteria improves students’ end products and therefore increases learning. Having a grading rubric also helps teachers to focus how they spend class time. A welcome administrative advantage for instructors is that using grading rubrics usually reduces the amount of time spent on grading (TeacherVision, 2011). Finally, another appealing quality of grading rubrics is their applicability across disciplines. Although they may take various shapes, sizes, and formats, rubrics can be developed for practically any course and subject matter (TeacherVision, 2011) offered in practically any delivery mode.

Need for the Study

Indeed, there are a number of books and web sites (i.e. Stevens & Levi, 2005; TeacherVision, 2011) that provide the rationale for grading rubrics and provide examples of rubrics for such graded assignments as essays, presentations, class participation, and projects, to name a few. In looking for models from which to create a grading rubric for online discussions, the authors, one of which has taught online since 2004 and the other of which has taught online since 2008, found few publications that addressed the topic. A helpful general publication on rubrics (Stevens & Levi, 2005) offers at least a dozen rubrics for assessments as diverse as portfolios, lab experiments, presentations, speeches, research projects, book reviews, as well as in-class participation. Specifically, most components of the rubric for in-class participation, the topic closest to the purpose of this paper, though useful for face-to-face class, was not really appropriate for online discussions. Examples include active listening, assessing own performance, demonstrating energy, not watching the clock, and packing up things before class is over. The rubric components that did seem most relevant to online discussions include speaking to the point being discussed, being proactive rather than waiting for directions, responding when called upon, and responding without being called upon. Most of the components on the preceding list can be shaped to fit the online discussion environment. Yet, the list is incomplete as a tool to apply to the online discussion world as it does not address the expected length of contributions (although the components do touch on the notion that students should “respond fully,” a descriptor in the quantity component of the proposed rubric), the quality of contributions (using higher order thinking skills), by when contributions should be submitted, or the writing proficiency of the contributions. The closest models to the authors’ project are Bauer and Anderson’s (2000) three-part rubric for evaluating students’ written performance in the online classroom and Knowlton’s (2009) two-part rubric for evaluating college students’ efforts in asynchronous discussion. Bauer and Anderson’s and Knowlton’s work mirrors the authors’ in a few components, but there are also key differences, which will be delineated later in this paper.

Despite the growing popularity of grading rubrics and the parallel growth of online learning, there is a surprising lack of publications on the topic of grading online discussions. Bauer and Anderson (2000) accurately forecasted that “The class taught entirely online will become commonplace in higher education. Thus, effective evaluation in the online classroom will be a primary issue” (p. 70). Knowlton (2009) agrees and focuses on discussions, “Professors should establish criteria for evaluating students efforts in online discussions and communicate these criteria to students” (p. 313). Accordingly, this study seeks to provide a model tool for the effective evaluation of online discussion.

Most existing grading rubrics (such as for essays, case study analyses, research projects, and so forth) can be modified to be used, for an online course. However, grading discussions (sometimes called class participation) in an online learning environment can be tricky (Morgan, 2006). When are discussions due? Do we want students scrambling to submit discussion posts on the last night of the period? It seems hard to imagine that such a scenario would contribute to the “deep and durable” learning advocated by Hacker and Neiderhauser (2000). What about punctuation, spelling, grammar, and other writing issues? Unlike face-to-face classes, in an online learning environment most discussions take place via a text-based discussion forum. Therefore, written communication is unique to online discussion and this complicates the assessment process. Although it can be argued that online discussion is more analogous to a conversation than to formal writing (Knowlton, 2009), it also can be argued that in an online learning environment, students have more time to gather their thoughts and to produce a well-written and coherent post, and that should be the expectation.

The length of the student’s contribution (quantitative aspect) is also a critical variable. Do instructors expect 50 words or 500 words or something in between? Although quantity is not everything, it is an important component (Bauer & Anderson, 2000). Some students submit the bare minimum to meet the published requirement. Others exceed the minimum and then some. Still others fall short in either the minimum length or frequency of posts (for example, submitting an original post but not replying to a classmate). There also is the topic of quality. The students might have met the minimum word count and they might have submitted by the published deadline, but their posts might have demonstrated little critical thinking or appreciation for the issue under consideration. Furthermore, reply posts might simply agree with the classmate (“You make a great point. I agree with you completely.”) or might simply rehash in summary form what the original post said without making a unique contribution. How should these scenarios be addressed when grading online discussions? The lack of clear guidelines can make students and faculty alike feel frustrated.

Theoretical Foundations

Timeliness

The development of the rubric criteria was the result of wrestling with issues delineated in the need for the study section as the authors were grading online discussions each term. For example, with regard to posts being submitted on the last day of the period, the authors tried to think of a parallel scenario in a face-to-face class. In a live classroom most instructors would not find it acceptable if a student walked into a three-hour class with only fifteen minutes remaining. Sure the student can contribute, but the dialogue is usually over or is wrapping up. Why would there be a different standard in an online course? Those questions led to the inclusion of a timeliness component.

There are various models for how to address the participation timeliness factor. These models range from merely descriptive to highly prescriptive. For example, one online university offers a vague exhortation for students to participate in virtual discussions because doing so helps students feel connected to the university and because the discussions might even be mandatory (KS Education Online, 2011). On the opposite end of the pendulum, one large for-profit university emphasizes that online discussions comprise an important part of the learning experience and requires students in all online courses to contribute to discussions on four of seven days each week (Higbee & Ferguson, 2006). For the authors of this article, neither extreme was satisfactory. The authors strived to balance honoring the asynchronous nature of the program as it is advertized with the desire to encourage early discussion. To this end, requiring an initial post by day five of seven was chosen as a suitable compromise. Requiring an initial post by day five of the week leaves days six and seven for discussion, providing an opportunity for much richer dialogue than would take place with most students scrambling to submit original and reply posts in the same evening. Furthermore, the authors see no need to require participation on four of seven days in an asynchronous environment. Moreover, the authors have observed high quality student contributions over a time span of less than four days of seven days.

Bauer and Anderson’s (2000) rubric awards full credit for posts that are prompt and timely. However, neither term is defined, leaving it up to the student to assign meaning to the terms based on prior experiences. Timeliness is assessed by Knowlton’s (2009) rubric in an all or nothing manner, focusing more on the post not being on time, which earns zero points. In contrast, the proposed rubric rewards students who submit their original posts early in the academic week (full credit is earned for the “Timeliness” component if an original post is submitted by day five and a response post is submitted by day six). In the past, the authors have been discouraged by the all or nothing approach to grading timeliness, which usually yields a flurry of activity on the last day of the period and a lower level of contribution as discussed earlier. The authors believe that the graduated approach to grading timeliness is one of the most important contributions of the proposed rubric as it encourages early original posts and allows two days for in-depth discussion.

The authors recognize that the mere act of setting timeliness boundaries does not guarantee that dialogue necessarily will be richer than if no boundaries are set, but the authors do believe such boundaries create an important platform for success. It is then up to the instructor to take steps necessary to foster deeper dialogue in the discussion period. Hacker and Neiderhauser (2000) offer five principles to promote deep and durable learning in the online classroom. Two of the five principles relate to this point and might be employed by facilitators of online discussion. One principle is that students should be “active participants in learning” (p. 54), which is rooted in the constructivist teaching paradigm. As Hacker and Neiderhauser make their case for constructivist approaches to knowledge creation, they discuss various ways students can be encouraged to think deeply about their learning, reflect on feedback from the instructor and from peers, refine their thinking, and clarify their ideas for the instructor and peers. The second principle offered by Hacker and Neiderhauser is “collaborative problem solving” (p. 57). Similar to active learning, collaborative problem solving is highly interactive as Hacker and Neiderhauser advocate for construction of knowledge between individuals that extends beyond a mere taking of turns to students thoughtfully changing roles between active listeners to active speakers resulting in making meanings accumulate collaboratively and incrementally. Again, it is unlikely that these highly interactive and highly reflective processes, which often result in enduring learning, can be accomplished in a single sitting at 11:00 p.m. on the last night of the period.

Quantity

Bauer and Anderson (2000) offer three reasons why quantity is important. The first reason relates to the fact that online discussions are typically text-based, thus students are thinking and discovering what is known about a topic on paper. According to Bauer and Anderson, “Such discovery writing often requires a good dose of writing” (p. 68). Secondly, when students produce significant amounts of writing, it provides more opportunities to interact with the professor and thus develop a relationship of trust with the professor. According to Berge (1997, as cited in Bauer & Anderson, 2000) “a sense of trust between professor and student is a central element in the learner-centered approach. Assessing adequate and timely participation can determine if students are earning this trust” (p. 68). Thirdly, when professors evaluate students for quantity of contributions, students who typically are very quiet in face-to-face classes often come alive in online classes. Berge states, “Online postings neutralize those who are quick on their feet and give the reticent an equal chance to help discussions flourish” (1997, as cited in Bauer & Anderson, p. 68). Knowlton’s (2009) rubric assesses the degree to which students respond fully to the topics presented in the instructions, it addresses the number of posts (but not word count), and it assesses whether or not students reply to a classmate. However, Knowlton actually has separate rubrics for initial contributions and response contributions. While such an approach works for Knowlton’s single continuum rubric, the authors’ proposed rubric is too robust to duplicate for original and reply posts.

Quality

Bauer and Anderson (2000) have a separate rubric that assesses content. To earn full credit, a post should demonstrate understanding of key concepts, critique the work of others, provide evidence to support opinions, and offer new interpretations of discussion material. Although Bauer and Anderson’s rubric does not specifically mention critical thinking by name, they mention in the article’s narrative, and rightly so, that a post that demonstrates critical thinking provides evidence that the student grasps the concepts. Knowlton’s (2009) rubric assesses critical thinking by name, as does the proposed rubric. Notably, Knowlton observed, and the authors of this paper agree, that students have a hard time operationalizing what critical thinking means and how it is applied. Therefore, Knowlton suggests that instructors “may need to provide a resource that would help students in their applications” (p. 317). In this project, the authors addressed this valid concern in a twofold manner. First, the proposed rubric provides guidance about what critical thinking means by stating, “Student’s original post demonstrates substantial evidence of critical thinking about the topic through, for example, application or creativity.” Second, immediately following the rubric there is narrative that includes a reference to a web site about critical thinking.

Writing Proficiency

Writing proficiency is called “Expression” by Bauer and Anderson (2000), who devote an entire separate rubric to this topic, as does the proposed rubric. Bauer and Anderson’s rubric is comprised of four grades and is written with great detail. For example, to earn full credit, the following criteria must be present: “Student uses complex, grammatically correct sentences on a regular basis; expresses ideas clearly, concisely, cogently, in logical fashion; uses words that demonstrate a high level of vocabulary; has rare misspellings” (p. 68). Although Bauer and Anderson should be commended for their thorough and thoughtful descriptor, the expectations seem too prescriptive and too lofty. After all, Bauer and Anderson themselves concede that “The online class poses a particular difficulty in evaluating expression. Common verbal transgressions that go overlooked in normal class discussions become permanent artifacts in the online classroom” (p. 67). They go on to suggest reserving the rubric with lofty standards for “formal” posting and allowing more relaxed standards to prevail in more informal forums (such as those associated with small group work). However, some universities do not utilize informal discussions. Furthermore, it seems that students have enough pressure to write well given the permanent nature of the online discussion and given the fact that their writing is being evaluated. Is it really necessary to evaluate vocabulary, for example? Furthermore, how it is determined which words merit a high level of vocabulary, thus earning a grade of “A”? This seems highly subjective and unnecessarily complicated. Writing proficiency is addressed in Knowlton’s (2009) rubric, but again it is virtually an all or nothing assessment as Knowlton only awards one point out of a possible 10 for posts in which the writing skill impedes the reader’s ability to understand the post. There is no other reference to writing skill. It seems that Knowlton shares the authors’ concerns that Bauer and Anderson’s rubric is too prescriptive. However, writing proficiency (later named “communication proficiency” to accommodate audio as well as written posts) deserves more than a cursory mention. Therefore, the proposed rubric provides students with four ranges of possible grades, each describing levels of proficiency with regard to spelling, grammar, syntax, citing, and so forth.

Summary

There is a dearth of literature about grading online discussions. Bauer and Anderson (2000) have three separate rubrics for assessing online discussions—one each for content, expression, and participation. Although individually and collectively, these rubrics make a contribution to evaluating online discussions, individually and collectively they also fall short. For example, timeliness is encouraged, but is not defined and, interestingly, quantity is defended in the narrative, but is never mentioned in their rubrics. Ambitiously, Knowlton’s (2009) rubric tries to plot a number of distinct and important criteria along a single continuum, essentially, collapsing Bauer and Anderson’s three rubrics into one and then creating a separate rubric just for response posts. The authors of this paper believe this approach is too impractical and too simplistic. Although earlier versions of the proposed rubric attempted a similar approach, the authors kept naturally parsing out major components and plotting them along a single continuum. Eventually, four main criteria were isolated, they were weighted equally, and they were plotted along four distinct continuums. The authors believe the four continuum approach is another important contribution of the proposed rubric. Appendix A includes the original version of the rubric and Appendix B the revised version of the rubric.

Methodology

In order to evaluate the students’ perceptions regarding the utility of the rubric a survey was administered during the summer and fall quarters in 2010. The survey was administered in 12 sections which included six undergraduate, four graduate and two hybrid courses (see Appendix C for the complete list of courses). The survey instrument included Likert-scale and true/false questions, as well as open-ended questions in order to collect quantitative and qualitative feedback on the rubric. The Cronbach alpha coefficient of the survey instrument was .72 (see Appendix D for a copy of the survey).

All 154 students in the 12 sections referenced above were invited to complete the survey. The survey was available during the last week of the summer and fall 2010 quarters and was administered online. An additional qualitative question regarding the use of the rubric by the students was added in the fall version of the survey. Participation was voluntary, but students received a bonus towards their final grade for participating. The responses to the survey questions were anonymous in the sense that survey responses could not be linked to individual students.

Results

Quantitative Findings

Overall, 125 students across all 12 sections participated in the survey, a response rate of 81.14%. The aggregate results for each survey question are as follows:

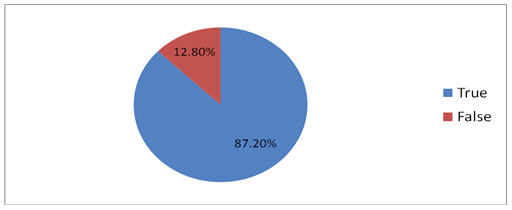

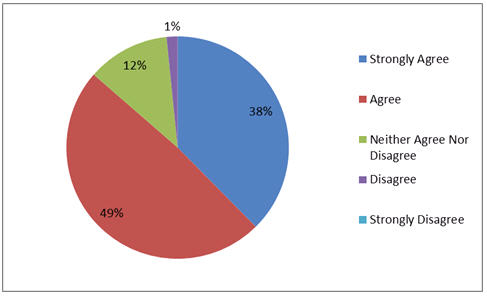

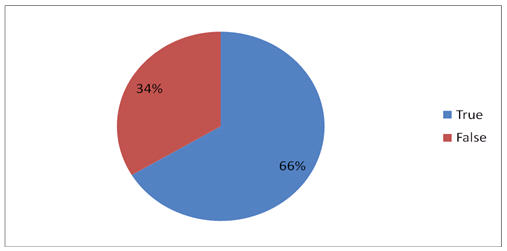

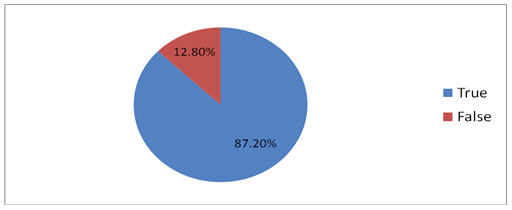

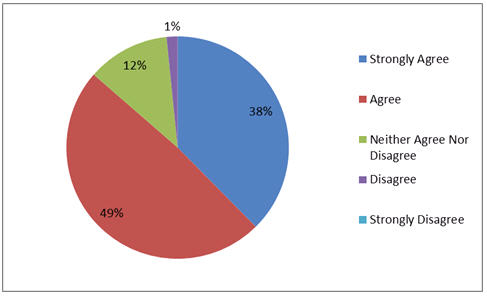

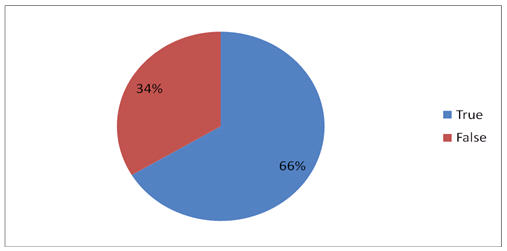

The first question asked students whether they reviewed the discussion grading rubric at the beginning of each course. The vast majority of students indicated that they did read the rubric when the course started. The second question assessed students’ perception regarding the clarity of the rubric. More than 85% of the students stated that the grading rubric was clear and easy to follow as illustrated below. The third question attempted to determine whether students consulted the discussion grading rubric when contributing to the weekly discussions. This question evaluated a different area of the rubric’s application compared to the one in question 1 (review and consultation of the rubric during the course versus the beginning of the course). While about two thirds of the students stated that they used and consulted the rubric for their weekly discussions, there was a high number of students who indicated that they did not use the rubric as a guide to the weekly discussion postings.

Figure 1. I thoroughly read/reviewed the discussion grading rubric at the beginning of the course

Figure 2. The discussion grading rubric was clear and easy to follow.

Figure 3. I used/consulted the discussion grading rubric when contributing to the weekly discussions.

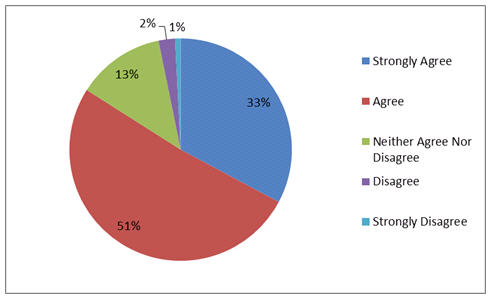

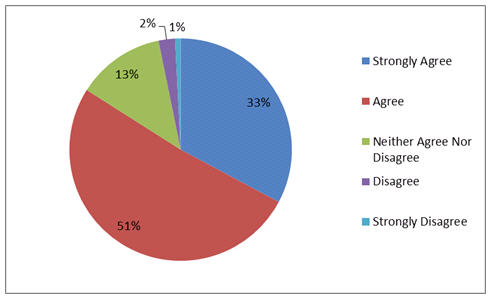

The last quantitative question asked students whether the assigned discussion grades corresponded to the evaluation criteria of the rubric. Almost 83% of the students agreed that the discussion grades did indeed align with the discussion rubric.

A comparison of the quantitative results between the different delivery modes (fully online, hybrid) and levels (undergraduate, graduate) did not reveal any striking differences with the aggregate results. Accordingly, a case can be made that the students who were exposed to the rubric and participated in this survey share similar views regarding its use regardless of the delivery mode or their degree level.

Figure 4. Assigned grades for the discussions followed the discussion grading rubric.

Qualitative Findings

The qualitative responses provided by the students to the open-ended questions of the survey, confirm the quantitative findings described above. Of particular interest is the appreciation and perception of usefulness of the rubric as illustrated by the student commentary:

“I really appreciated having what was expected clearly documented at the beginning of the course. It let me know what was expected and helped me to prepare for each week. Other classes have seemed extremely arbitrary in their discussion grading and it can be very frustrating. I really enjoyed the way it was setup in this class. Thank you.”

“I think the rubric is clear and is necessary because in other online classes I've taken, there has been no real understanding of how discussion posts were graded. This is a good roadmap for how to get a 10/10 on a discussion post and it's easy to follow.”

“The rubric made it clear what the professor expected. I appreciated this.”

At the same time, students were encouraged to and did offer constructive criticism on the rubric, especially as it pertains to the need for simplicity and accessibility. Furthermore, a suggestion was made for the need to provide a sample discussion thread to accompany the rubric in order for the students to derive the maximum benefit as illustrated by the comments below:

“For us visual learners perhaps the rubric could be represented as a pie chart. That might be easier to refer to.”

“The rubric was a lot to read and there was (sic) many things that you had to include in the discussion to get a good grade and it might have been a little too much.”

“The discussion grading rubric was very helpful and guided me to getting good grades. I think that the professor should go over the discussion rubric at the first class before our first discussion board is due because it will help out a lot of the students. I didn't use the rubric for the first discussion post because I didn't know there was one until he told us the following class. After I found out and started using it, I was receiving better grades then (sic) the first post.”

“After the first couple of discussions, I got a better idea of what was expected. The only suggestion I can think of is to post an example in the beginning of the term of what a 10 point discussion post would be and highlight the critical areas of it.”

Lastly, the relatively high number of students who indicated that they did not consult the rubric during the weekly discussion postings prompted the authors to add an additional open-ended question in the fall version of the survey instrument. The goal of the additional question was to prompt students as to why they did not consult the rubric while contributing to the weekly discussions. Two sample responses are provided below:

“In all reality I looked at the rubric when I first started the class then did not review it again until now. Therefore, I cannot say that I truly used the rubric in the way in which it was intended. So it might be good to remind students next quarter a couple of times to work according to the assigned rubric.”

“My intentions were to actively participate in the class discussions and I felt that my contributions were honest and on point. I didn't feel it necessary to keep referring to the rubric.”

Discussion

A number of discussion points emerge as a result of developing, implementing, and evaluating the proposed online discussion rubric.

First, the results clearly indicate that the students were appreciative of the fact that a rubric based on which the discussion component would be evaluated was made available at the beginning of the course. For the majority of the students the discussion rubric constituted clear expectations in terms of what the instructors expected, an attribute that students seem to value considerably. This is particularly true in an online environment where the majority of the interactions typically take place through the discussion component.

At the same time, the study underscores the importance associated with emphasizing and reviewing the rubric throughout the duration of the course. As indicated above, some students did not consult the discussion rubric while contributing to the weekly online discussions which may sound counterintuitive to the purpose of providing a discussion rubric in the first place. In light of this surprising revelation, the authors attempted to pinpoint possible reasons as to why some students did not consult the rubric. Some students indicated that they were not aware that the rubric existed, whereas others felt that it was simply not necessary to refer back to it. However, student responses highlighted the importance of emphasizing the existence and importance of the rubric at multiple instances during the quarter in order to ensure that it is used appropriately.

Furthermore, in order for the discussion rubric to fulfill its intended purpose, it is essential that the link between the discussion grades assigned and the discussion rubric is evident to the students. In other words, students must be able to understand clearly that their discussion grade corresponds to the discussion rubric. One of the options through which the link between the discussion rubric and the discussion grade can be made clear to the students is by providing a detailed breakdown on each of the rubric categories with the overall discussion grade. One of the authors has implemented this option. Overall, the students have responded very favorably when they were provided with a detailed breakdown of their discussion grade; at the same time the process is a bit more time-consuming for the instructor. For the most part, the students who participated in the survey indicated that the link between the discussion grades and the discussion rubric was evident.

Another important point is the need for continuous assessment of evaluation categories. The rubric has been a dynamic document since its inception. With each term, and the unique experiences therein, a few shortcomings of the rubric became apparent. For example, early versions of the rubric did not account for audio posts in the Quantity or Writing Proficiency categories. The most recent version, which is provided below (Appendix B), does consider audio posts. In fact, in the most recent version of the rubric the Writing Proficiency category was renamed “Communication Proficiency” to accommodate both text-based and audio-based discussions. The other two categories have undergone refinement as well. For example, the Quality category of the newest version of the rubric does a better job of parsing what quality looks like at the different grade levels and does not penalize students as harshly at the moderate level as much as previous versions. Finally, the Timeliness category has undergone considerable refinement to accommodate the large number of possible scenarios. This is a good segue to the next discussion point.

Finally, there is a need to account for multiple scenarios of discussion contributions in all categories. Despite the authors’ attempts to provide a comprehensive grading rubric that covers as many scenarios as possible, the authors have found students whose submissions may fall between grades in literally every category. This is not surprising because it is impossible to create a rubric of manageable size that accounts for every possible scenario. The authors deal with this in two manners. First, the authors added a comment in the narrative that accompanies the rubric that states:

“Please note that the instructor reserves the right to bypass the breakdown outlined above in cases of exceptional performance in one or more categories or in cases of extenuating circumstances (prior notification of and approval from the instructor is required).”

This comment provides the instructor with the flexibility to use judgment in scenarios outside of the rubric. This leads to the second point. In cases that fall outside of the published rubric, the authors use judgment and are careful to be consistent (as much as possible given that no two scenarios are alike) from student to student and from discussion to discussion. The Timeliness category seems to be the one where most of the varied scenarios emerge. For example, the most recent version of the rubric accounts for the scenario of a s tudent who submits an original post by Tuesday, but who does not submit a response post. However, the rubric does not account for the scenario of a student submitting a reply post by Tuesday, but who does not submit an original post. In this and similar cases that fall outside of the published rubric, the instructor has to use judgment to determine a fair and consistent score.

Suggestions for Future Research

The research undertaken for this study focused on students’ perceptions of the usefulness of this grading tool. Yet, still more can be gleaned regarding the student view. For example, the responses to the question about why students did not consult the rubric during the term, while not as comprehensive as one could expect, provided possible venues for further exploration on this topic. Furthermore, future research could examine faculty perceptions of the rubric’s value. Questions to be answered could include whether or not the rubric saves grading time as it claims to and whether or not students’ discussions are of higher quality than those produced without following the proposed rubric. Most faculty want deep and durable learning, such as is advocated by Hacker and Neiderhauser (2000), to take place in their students. A qualitative study could explore this important issue to see if it is happening in courses that use the proposed rubric.

Conclusion

This paper has presented the experience of developing, implementing, and evaluating a grading rubric for online discussions that considers quantity, quality, timeliness, and communication proficiency. The authors, both of whom are experienced online instructors, believed there was a clear need for a tool to assist both teachers and learners in navigating the unique waters of discussions in an online learning environment. No tool existed that dealt with the complexities of text-based and even audio-based discussions that take place in largely asynchronous, electronic formats. The authors believe this rubric, which has undergone several iterations, makes a valuable contribution to the field of online learning and teaching.

References

Bauer, J. F., & Anderson, R. S. (2000). Evaluating students’ written performance in the online classroom. In R. E. Weiss, D. S. Knowlton, & B. W. Speck (Eds.), Principles of effective teaching in the online classroom (pp. 65-72). San Francisco, CA: Jossey-Bass.

Doyle, T. (2008). Helping students learn in a learner-centered environment. Sterling, VA: Stylus Publishing, LLC.

Higbee, J., & Ferguson, K. (2006). Tending the fire: Facilitating difficult discussions in the online classroom. Paper presented at the 22 nd Annual Conference on Distance Teaching and Learning. Retrieved from the conference web site: http://www.uwex.edu/disted/conference/Resource_library/proceedings/06_4379.pdf

Hacker, D. J., & Neiderhauser, D. S. (2000). Promoting deep and durable learning in the online classroom. In R. E. Weiss, D. S. Knowlton, & B. W. Speck (Eds.), Principles of effective teaching in the online classroom (pp. 53-64). San Francisco, CA: Jossey-Bass.

Knowlton, D. S. (2009). Evaluating college students’ efforts in asynchronous discussion. In A. Orellana, T. L. Hudgins & M. Simonson (Eds.), The perfect online course (pp. 311-326), Charlotte, NC: Information Age Publishing.

KS Education Online. (2011). Six rules for online courses. Retrieved May 22, 2011, from http://www.kseducationonline.com/six-rules-for-online-courses.php

Morgan, K. (2006). Ground rules in online discussions: Help or hindrance? Journal of Teaching in Marriage and Family, 6, 285-305. Retrieved from the Family Science Association web site: http://familyscienceassociation.org/Journal%20articles/Morgan.pdf

Stevens, D. D., & Levi, A. J. (2005). Introduction to rubrics: An assessment tool to save grading time, convey effective feedback, and promote student learning. Sterling, VA: Stylus Publishing, LLC.

TeacherVision. (2011). The advantages of rubrics. Retrieved March 10, 2011, from http://www.teachervision.fen.com/teaching-methods-and-management/rubrics/4522.html

Appendix A: Original Rubric (Summer Quarter 2010)

Online Discussion Grading Rubric

Quantity (25%):

| 100% |

75% |

25% |

0% |

| Student has submitted one substantive original post responding fully to the question or topic. Student has submitted at least one substantive reply to a classmate’s post. Total word count for the unit is at least 250 words (or 6 minutes for audio board) |

Student has submitted one substantive original post responding fully to the question or topic. Total word count for the unit is at least 200 words (or 5 minutes for audio board) Student does not submit a reply to a classmate’s post. |

Student has submitted one substantive reply to a classmate’s post. Total word count for the unit is at least 50 words (or 1 minute for audio board). Student does not submit an original post. |

No discussion board posts are submitted. |

Quality (25%):

100% |

75% |

50% |

0% |

Student’s original post demonstrates that he or she has researched the topic, demonstrates critical thinking about the topic, and demonstrates application or creativity. Student’s reply post(s) demonstrate knowledge of the topic and take the discussion in a new direction. |

Student’s original post demonstrates that he or she has researched the topic, but does not demonstrate critical thinking about the topic, or do not demonstrate application or creativity. Student’s reply post(s) demonstrate knowledge of the topic and take the discussion in a new direction. |

Student’s original post demonstrates that he or she has researched the topic, but does not demonstrate critical thinking about the topic, and do not demonstrate application or creativity. Student’s reply post(s) demonstrate knowledge of the topic and take the discussion in a new direction. |

Student’s original posts do not demonstrate research, critical thinking, application, or creativity (for example, just stating opinion). Student’s reply post(s) merely repeat what the classmate said. |

Timeliness (25%):

100% |

90% |

85% |

75% |

50% |

0% |

Student has submitted one original post by Sunday and has submitted one response post by Monday. |

Student has submitted one original post by Sunday and has submitted one response post by Tuesday. |

Student has submitted one original post by Monday and has submitted one response post by Tuesday. |

Student has submitted one original post by Tuesday and has submitted one response post by Tuesday. |

Student submits posts after the unit ends and within one week of the original unit’s closing date. |

Student submits posts two or more units after the original unit’s closing date. |

Writing Proficiency (25%):

100% |

90% |

50% |

0% |

Student has submitted posts with one or fewer spelling, grammar, syntax, punctuation, citation, or other writing errors. |

Student has submitted posts with one to five spelling, grammar, syntax, punctuation, citation, or other writing errors. |

Student has submitted posts with six to nine spelling, grammar, syntax, punctuation, citation, or other writing errors. |

Student has submitted posts with 10 or more spelling, grammar, syntax, punctuation, citation, or other writing errors. |

Appendix B: Revised Rubric (Spring Quarter 2011)

Online Discussion Grading Rubric

Quantity (25%):

100% (25 points) |

75% (19 points) |

25% (6 points) |

0% (0 points) |

Student has submitted one substantive original post responding fully to the question or topic. Student has submitted at least one substantive reply to a classmate’s post. Total word count for the unit is at least 250 words (at least 5 minutes for audio posts). |

Student has submitted one substantive original post responding fully to the question or topic. Total word count for the unit is at least 200 words (at least 4 minutes for audio posts). Student does not submit a reply to a classmate’s post. |

Student has submitted one substantive reply to a classmate’s post. Total word count for the unit is at least 50 words (at least 1 minute for audio posts). Student does not submit an original post. |

No discussion posts are submitted. |

Quality (25%):

100% (25 points) |

80% (20 points) |

60% (15 points) |

0% (0 points) |

Student’s original post demonstrates substantial evidence of critical thinking about the topic through, for example, application or creativity. Student’s reply post(s) take the discussion in a new direction. |

Student’s original post demonstrates moderate evidence of critical thinking about the topic through, for example, application or creativity. Student’s reply post(s) take the discussion in a new direction. |

Student’s original post demonstrates little evidence of critical thinking about the topic through, for example, application or creativity. Student’s reply post(s) take the discussion in a new direction. |

Student’s original post demonstrates no evidence of critical thinking (for example, just stating opinion without justification). Student’s reply post(s) merely agree with the classmate or merely repeat what the classmate said. |

Timeliness (25%):

100% (25 pts) |

90% (22.5 pts) |

75% (19 pts) |

60% (15 pts) |

50% (12.5 pts) |

40% (10 pts) |

Student has submitted one original post by Sunday and has submitted one response post by Monday. |

Student has submitted one original post by Sunday and has submitted one response post by Tuesday. |

Student has submitted one original post by Monday and has submitted one response post by Tuesday. |

Student has submitted one original post by Tuesday and has submitted one response post by Tuesday. |

Student submits an original post by Tuesday, but does not submit a response post. |

Student submits posts after the unit ends and within one week of the original unit’s closing date. |

Communication Proficiency (25%):

100% (25 points) |

90% (22.5 points) |

50% (12.5 points) |

0% (0 points) |

Written posts : Student has submitted posts with no spelling, grammar, syntax, punctuation, citation, or other writing errors.

Audio posts : Student has submitted posts with no grammar errors. The posts are enunciated professionally. |

Written posts : Student has submitted posts with one to five spelling, grammar, syntax, punctuation, citation, or other writing errors.

Audio posts : student has submitted posts with one to five grammar or enunciation errors. |

Written posts : Student has submitted posts with six to nine spelling, grammar, syntax, punctuation, citation, or other writing errors.

Audio posts : student has submitted posts with six to nine grammar or enunciation errors. |

Written posts : Student has submitted posts with 10 or more spelling, grammar, syntax, punctuation, citation, or other writing errors.

Audio posts : student has submitted posts with 10 or more grammar or enunciation errors. |

Appendix C: List of Courses in which the Rubric was Used

Fully Online |

Hybrid |

Techniques of Speaking* |

Business Communication |

Interpersonal Communication* |

Sports and Mass Media |

Strategies for Lifelong Learning |

|

Customer Service Theory and Practice |

|

Creative Leadership for Professionals |

|

Communication for Professionals* (graduate level) |

|

Communicating in Virtual Teams (graduate level) |

|

*Two sections

Appendix D: Survey Instrument

Question 1: I thoroughly read/reviewed the discussion grading rubric at the beginning of the course.

Question 2: The discussion grading rubric was clear and easy to follow.

- Strongly Agree

- Agree

- Neither Agree nor Disagree

- Disagree

- Strongly Disagree

Question 3: I used/consulted the discussion grading rubric when contributing to the weekly discussions.

Question 4: If you did not refer to the grading rubric throughout the term, please indicate your reason(s)/rationale in the space provided below.

Question 5: Assigned grades for the discussions followed the discussion grading rubric.

- Strongly Agree

- Agree

- Neither Agree nor Disagree

- Disagree

- Strongly Disagree

Question 6: Please provide any additional comments regarding the discussion grading rubric. We are particularly interested in hearing whether the rubric was useful to you in helping you with the submission of your discussion postings. We are also interested in finding out ways in which you believe the rubric can be strengthened/clarified or improved.