Student Assessment in Online Learning:

Challenges and Effective Practices

|

Lorna R. Kearns

Senior Instructional Designer

Center for Instructional Development and Distance Education

University of Pittsburgh

Pittsburgh, PA 15213 USA

lrkearns@pitt.edu

Abstract

Assessment of student learning is a fundamental aspect of instruction. Special challenges and affordances exist in assessing student learning in online environments. This two-phase study investigated the types of assessment methods being used in online courses and the ways in which the online environment facilitates or constrains particular methods. In Phase One, syllabi from 24 online courses were reviewed in order to discover the types of method being used to assess student learning and contribute to the overall course grade. Five categories emerged: (1) written assignments; (2) online discussion; (3) fieldwork; (4) quizzes and exams; and (5) presentations. Phase Two consisted of a focus group and interviews with eight online instructors to discuss challenges and effective practices in online assessment. Challenges arose due to the impact of physical distance between the instructor and the students, adaptations resulting from the necessity of using technology for communicating with students, workload and time management issues, and the ongoing need to collect a variety of assessment data and provide feedback. Phase-Two interviewees offered strategies and suggestions to counteract the challenges they identified. The paper concludes with recommendations synthesizing the results of this study with those found in the literature.

Keywords: online learning, online teaching, online assessment, assessment methods, assessment challenges |

Introduction

With nearly 30% of U.S. college and university students now taking at least one online course, online learning enrollments in this country continue to grow at a much faster rate than overall enrollments in higher education (Allen & Seaman, 2010). More widely, the International Council for Open and Distance Education predicts that open and distance learning delivery formats will become the most significant driver of transnational higher education (Walsh, 2009). As this new mode of instruction becomes more prevalent, it is important to study the design and teaching of online courses. Identifying challenges instructors face and highlighting effective practices they have developed in this environment will enable us to propose strategies that can be empirically tested.

An area of focus that deserves special attention is the assessment of student learning. This encompasses how instructors assess student progress both formatively and summatively, how they distribute graded activities across an entire course, the issues involved in providing effective feedback, and the strategies with which they experiment to address these challenges. The purpose of this paper is to report on a study of such challenges and practices among a group of instructors teaching online graduate courses at one university in the northeastern United States.

Literature Review

Assessment Challenges in Online Learning

Snyder (1971) coined the term "the hidden curriculum" to describe how students infer what is important in a course based on the ways in which their learning is assessed. While Joughin (2010) has pointed out that the generalizability of this 40-year-old study may be limited, other researchers (e.g., Bloxham & Boyd, 2007; Gibbs & Simpson, 2004-2005) continue to find value and generativity in the notion. In online learning, where there is no face-to-face (F2F) interaction, instructors are particularly challenged to convey their intentions accurately and provide appropriate feedback to help students achieve the targeted learning objectives. Hannafin, Oliver, Hill, Glazer, and Sharma (2003) note that "the distant nature of Web-based approaches renders difficult many observational and participatory assessments" (p. 256). Oncu and Cakir (2011) observe similarly that informal assessment may be especially difficult for online instructors because of the absence of F2F contact. Beebe, Vonderwell, and Boboc (2010) reiterate that proposition in their study of the assessment concerns of seven instructors who moved their F2F courses into an online environment. They identified five areas of concern among the instructors: (1) time management; (2) student responsibility and initiative; (3) structure of the online medium; (4) complexity of content; and (5) informal assessment.

Other issues mentioned in the literature on assessment in online learning include the importance of authentic assessment activities (e.g., Kim, Smith, & Maeng, 2008; Robles & Braathen, 2002), the use of assessments that promote academic self-regulation (Booth et al., 2003; Kim et al., 2008; Robles & Braathen, 2002), concerns about academic integrity (Kennedy, Nowak, Raghuraman, Thomas, & Davis, 2000; Simonson, Smaldino, Albright, & Zvacek, 2006), and the challenges involved in assessing online discussion and collaboration (Meyer, 2006; Naismith, Lee, & Pilkington, 2011; Vonderwell, Liang, & Alderman, 2007).

Assessment Methods in Online Learning

Very few studies have reported on the types and distribution of assessments that are used by instructors to contribute to students' overall grades in an online course. Among those that exist, Swan (2001) examined 73 online courses and identified methods that include discussion, papers, other written assignments, projects, quizzes and tests, and groupwork. In her study, almost three quarters of the courses used online discussion as a graded activity. About half of the courses used written assignments and tests or quizzes. Arend (2007) made similar findings in a study that examined 60 courses. She identified methods that included online discussion, exams, written assignments, experimental assignments, problem assignments, quizzes, journals, projects, and presentations. Like Swan, she found a large percentage of the courses were using online discussion as a graded activity. Quizzes and tests were used in 83% of the courses and written assignments in 63%.

Gaytan and McEwen (2007) asked online instructors to identify assessment methods they found to be particularly effective in the online environment. These included projects, portfolios, self-assessments, peer evaluations, peer evaluations with feedback, timed tests and quizzes, and asynchronous discussion. Based on the data they collected, they recommended administering a wide variety of regularly paced assignments and providing timely, meaningful feedback. They highlighted the value of examining the written record of student discussion postings and e-mails in order to keep abreast of evolving student understanding.

A better appreciation of the assessment challenges and effective practices of online instructors may help illuminate next steps in the development of a framework for studying and practicing online teaching. Therefore, this study sought to answer the following research questions:

- What methods of assessment are being used in this population of online courses?

- How does the online environment facilitate or constrain particular assessment methods?

Method

The study was carried out in two phases starting in the Spring of 2011 at a large research university in the northeastern United States. Participants included instructors teaching online graduate courses in education, nursing, gerontology, and library science. All courses were offered on Blackboard, the university's course management system (CMS). The objective of Phase One was to review syllabi in order to discover the types of assessment being used to contribute to the overall grade students received in a course. Phase Two consisted of interviews and focus groups with online instructors about the challenges they faced and the practices they deemed effective in the area of online assessment.

In Phase One, an e-mail was sent to instructors who had taught at least one for-credit online course between Fall 2009 and Summer 2011, asking for their permission to review syllabi from those courses in order to determine the types of activity that were being used to assess student learning. Of the 30 courses for which permission was sought, approval was granted for 24 courses. In cases where more than one section of a course had been taught over the two-year period, the syllabus for the most recent section was reviewed and assessments that contributed to the overall course grade were recorded.

Phase Two of the study consisted of an online focus-group session and several telephone interviews with instructors of online courses. Three individuals participated in a single focus-group session; one-on-one phone interviews of 30 minutes each in duration were conducted with five others. The following questions guided the focus groups and interviews:

- What challenges do you face in creating and deploying assessments for your online courses?

- What assessment practices have you used online that have been particularly effective?

- How has your online teaching impacted your assessment practices in your F2F classes?

Results – Phase One

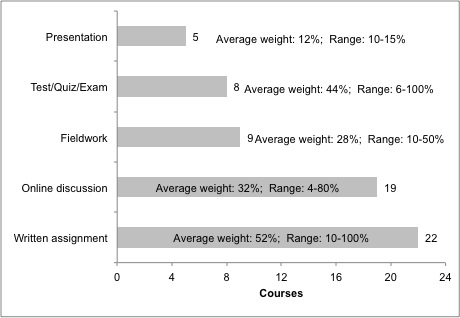

Data analysis of the syllabus-review phase began with the construction of a table in which graded assessments were recorded for each of the 24 courses in the sample. Assessments were then coded into larger categories, most of which are found in the literature. Figure 1 shows the frequency with which each category was used among the 24 courses, the average weight the type of assessment carried in the determination of the overall course grade, and the lowest and highest weight it was given among the courses. The five categories identified in this phase are:

- Written assignment: This large category encompasses assignments like research papers, case-study responses, and short essays;

- Online discussion: Assessments in this category include any asynchronous discussion activity undertaken on a discussion board, blog, or wiki;

- Fieldwork: This is a special type of written assignment requiring students to collect field data and write up some kind of report;

- Test/quiz/exam: In this group are traditional assessments composed of multiple-choice or short-answer questions;

- Presentation: This category includes student presentations; given the absence of F2F communication, the presentation delivery format had to be adapted for the online environment.

Figure 1. Assessment categories, along with their frequency of use, average weight, and range of contribution to overall course grade

The most frequently used assessment was the written assignment, with 22 of the 24 courses using it for at least one of their assessments. These assignments were much more open ended than those in the test/quiz/exam category and often gave students some choice of topic or even format. However, they were generally more formal than online discussion. Two common subcategories were case-study responses and research papers. As with online discussion, students were sometimes assigned into small groups and sometimes required to provide peer feedback on written work. Written assignments contributed an average of 52% to the overall course grade, with a range of 10% to 100%. In most cases, written assignments were submitted electronically through the CMS, though in a few instances, students were asked to submit the assignments to the instructor via e-mail.

Online discussion was used in 19 of the 24 courses as an assessment method. Most online discussion was conducted on the CMS's discussion board, although some courses used the wiki and blog tools for this purpose. Generally, the discussion was initiated by a set of questions posed by the instructor. In some discussions, students were asked to respond to questions about a reading they had to complete or a video they were required to watch. In a number of cases, students were placed into small groups for their discussions. Some courses called for students to take turns moderating the discussion, and some employed online discussion as a means for students to provide peer feedback to one another on assignments. In courses in which online discussion was assessed, the average contribution this method made to the overall course grade was 32%, with a wide range from a low of 4% to a high of 80%.

Fieldwork is a special type of written assignment in which students undertake some kind of data collection and/or undergo some kind of experience in the field that they then report on in written form. In a nursing course on health promotion, for example, students were asked to conduct a health evaluation on a patient or a relative. This same course required students to perform a health-risk assessment of members of a community, either at their own work site or another organization. In a teacher education course, fieldwork might entail the design and facilitation of a learning activity in a K-12 setting. For example, in a course on English language teaching, students carried out a formative assessment of a secondary student's written English. In one of the gerontology courses, students were tasked with visiting a dementia unit at a care facility and writing a report about the experience. Although these types of assignment result in written reports, the field-activity aspect they involve was considered to sufficiently differentiate them from other writing assignments so as to warrant a separate category. Nine of the 24 courses used fieldwork for assessment. On average, it contributed 28% to the overall course grade, with a range of 10% to 50%.

The test/quiz/exam category encompasses traditional tests and quizzes. Eight of the 24 courses used assessments of this type. In some cases, students were required to take the test at a proctored test site. Proctored tests were administered on paper or online; unproctored tests were taken online within the CMS. The average weight contributed by this category to the overall course grade was 44%, widely ranging from a low of 6% to a high of 100%. For courses in which the contributing percentage was low, this usually indicated a low-stakes quiz. Two of the 24 courses relied almost exclusively on high-stakes, proctored exams. These were courses in science-oriented subjects. According to Biglan's (1973) disciplinary classification model, instructors of such subjects much prefer objective, traditional assessments over open-ended assessments like written assignments (Neumann, Parry, & Becher, 2002).

Student presentations constitute the final category. In most cases where this type of assessment was used, the presentation assignment summarized what the student had submitted in a final project. Microsoft PowerPoint was often used by the students to create the presentation, sometimes as a set of slides and sometimes as a poster. Wikis, discussion boards, and synchronous webinar sessions were used as communication media. In all cases, students had to be prepared to take questions from their classmates about the material they presented. This category was used in five of the 24 classes and carried an average weight of 12% of the overall course grade, with a very small range of 10% to 15%.

Among the five categories, written assignments and online discussion appeared most frequently, with 22 of the 24 courses using the former and 19 using the latter. In 18 of the 24 courses, both of these methods were present. Presentation was the least used, at five out of 24 courses. The majority of courses used either two or three different types of assessment. Three courses used only one method – either written assignment or test/quiz/exam – while only one course used all five methods. The purpose of this phase of the study was twofold: (1) to identify the types of assessment being used by instructors in this population and gain a understanding of the contribution each type made to final course grades; and (2) to provide a starting point for discussion with and among the instructors in Phase Two of the study.

Results – Phase Two

Notes made during the interviews and focus-group session were analyzed for themes into which both challenges and effective practices might be grouped.

Challenges

Challenges and concerns appeared to cut across all five of the assessment categories mentioned above. Three broad themes emerged from the discussion of challenges:

- The impact of physical distance between instructor and student;

- Adaptations resulting from the necessity of using technology for communicating with students;

- Workload and time management.

In addition to these three themes, there emerged what might be called two meta-themes: (1) assessment data; and (2) feedback, both of which seem to have a role in all the concerns and challenges discussed below. The two meta-themes may be thought of as imperatives for interaction between the instructor and students. In order for students to benefit from assessment, they must make available to the instructor evidence of their learning (assessment data), and the instructor must, in turn, provide the students with feedback. The three abovementioned challenge themes may be thought of as conditions constraining the imperatives. In an online course, students and instructors do not meet on a regular basis; in fact, in most cases, they do not see one another at all. As one instructor put it, the "incidental opportunities" for communication that exist in a F2F class setting do not occur in an online class. Because of this, several instructors talked about the special attention they felt they owed their online students. Some expressed concern about being able to accurately assess their students' progress throughout the semester. Others commented on the difficulty in teaching complex, multi-step problem-solving methods to distance students. One instructor said, "When I have them on campus, I can walk them through step by step by step to a specific end point. But I couldn't tell where they were struggling when it was online." In general, the participants in this phase of the study felt more care must be taken in online courses to offer specific kinds of instruction and feedback. As one education instructor lamented, "It's more difficult online to guide students through the lesson-design process." Speaking about the affective needs of students, another instructor observed, "Students don't want to feel that they're out there by themselves."

Some instructors worried about parity with F2F courses (i.e., ensuring that students in their online classes were being assessed similarly to students in their F2F classes). This included, for instance, a desire to make sure that both sets of students were taking objective exams under the same conditions and that students in both programs were being asked to submit equivalent written work and other indicators of achievement of objectives. In one course, an ungraded discussion board was available for students to post questions about the content. Because the instructor believed that "students who participate do better," she expressed a desire to motivate students to participate by attaching a grade to the discussion board. However, she was reluctant to do this because the F2F students were not being given the opportunity to be graded on their participation.

A direct consequence of the physical separation of students and instructor is the need for all communication to be mediated by some kind of technology. In large part, communication among participants in the online classes reviewed for this study was accomplished via asynchronous technologies like e-mail, discussion boards, blogs, and wikis. Of the 24 classes reviewed in Phase One, 19 used online discussion as a graded activity. One problem arising from the asynchronous nature of online discussion is the impact of late posting. For a discussion that runs from Monday to Sunday, for example, students in the discussion group may miss the opportunity to fully engage if some wait until Saturday to begin. On the other hand, even in classes where discussion is sometimes less than robust, students may face the challenge of having to keep up with voluminous postings across multiple groups and discussion forums. As one of the participants pointed out, "Sometimes it's hundreds of entries."

The worry of intermittent technology failure, either on the university side or the student side, was also alluded to by some participants. They felt downtime on either end was likely to create anxiety for the student.

A recurring theme among instructors who participated in this phase of the study was the amount of time and effort involved in providing effective feedback to online students. One source of demand was online discussion. Several instructors reported being overwhelmed with the amount of reading this required. As one instructor remarked, the discussion board became "cumbersome when done every week." Another demand on an instructor's time that was raised was having to enter comments on student papers using Microsoft Word rather than being able to handwrite in the margins. For one instructor, this was "time consuming" and "more tedious" than annotating the hard-copy assignment. One instructor mentioned needing a greater number of smaller assessments to oblige students to complete activities that might otherwise be completed during F2F class time. In her words, "If there is not a grade associated with an assignment, it is completely eliminated." Finally, several instructors commented on having to answer the same questions more than once in the absence of a concurrent gathering of students.

Effective Practices

Most of the effective practices discussed by the participants seem to have emerged in response to the challenges described above. Many address more than one challenge as well as the meta-themes of assessment data and feedback.

Not surprisingly, many of the challenges identified by participants arose from the fact that students and instructor did not have real-time contact in the same physical location. When assignments in these classes involved complex, multi-step skills, an effective practice is to deconstruct the assignment into smaller, interim deliverables, thus affording the instructor multiple points at which to assess the students' developing mastery and supply appropriate feedback. Formulating such feedback, of course, takes time. To optimize instructor time and provide scaffolding support, a suggestion was made to develop online mini-tutorials that students could use to reinforce particular aspects of a learning module.

Rubrics were mentioned more than once as being an effective way to highlight the important features of a large assignment, communicate target performance to students, and simplify grading for the instructor. A rubric is a scoring guide that lists criteria against which assignment submissions will be evaluated (Suskie, 2009). Several instructors used rubrics to guide online discussion to specify how long discussion posts should be, how often students should post, and the level of critique and analysis they were looking for in each post. Many examples exist in the literature describing effective use of rubrics for assessing online discussion (e.g., Baker, 2011; Gilbert & Dabbagh, 2005). Most instructors agreed that, regardless of the tool used for discussion (e.g., blog or discussion board), the evolving discourse served as a useful record of student understanding of the material.

A special set of concerns arise when course material is highly technical in nature. In these courses, instructors want students to have access to the resources necessary to support their learning and ensure their correct understanding of the material. They also want assessments in such courses to be carried out under conditions that promote academic integrity. Several of the instructors used ungraded, self-check quizzes and enrichment exercises with automatic feedback to support their students' learning in technical domains. For formal assessment of such material, the typical approach adopted was a proctored exam.

An interesting finding related to the constraining aspect of technology is that while technology presents challenges, it also yields affordances. For example, the self-check quizzes rmentioned above give instructors a way to informally assess students' understanding as well as supply feedback to help them correct misconceptions. One popular method among the participants was to have students take an ungraded online quiz based on a reading assignment. At the end of the quiz, their scores, along with the correct answers to the questions they got wrong, are displayed to them. While the quiz does not contribute to their final grade, the score is entered into the gradebook within the CMS for both the student and instructor to see. Using ungraded quizzes in this manner is considered an effective way to formatively assess online students (e.g., Kerka & Wonacott, 2000).

Another way in which instructors respond to the challenges inherent in asynchronous computer-mediated communication is by leveraging synchronous technologies as and when appropriate. Synchronous technologies enable interaction between parties without a time lag. They include telephone, text chat, and web conferencing, all of which have a number of benefits and strengths. The problem with this form of communication, however, is that online students tend to have vastly different schedules and commitments; some even live in disparate time zones. Thus, they may not be in a position to participate in simultaneous communication at a given time. Some of the instructors in the present study ran optional chat sessions whose contents were recorded for later viewing by those unable to attend. In scheduling synchronous meetings, it is best to vary the times in order to allow as many students as possible to participate. Some of the instructors scheduled individual phone conversations with students having particular difficulties with the material. Despite the disadvantages, examples of the successful implementation of synchronous communication in primarily asynchronous courses can be found in the literature (e.g., Boulos, Taylor, & Breton, 2005).

In courses where online discussion was used deliberately to promote critical and divergent thinking about the course material, some instructors lamented that discussion participation was not what they had hoped it would be. An effective strategy for encouraging deep engagement with the material in discussion is the use of discussion prompts that ask students to relate course concepts to personal experiences from their own professional settings. This strategy aligns with recommendations from the literature for posing questions that foster rich online discussion (e.g., Muilenburg & Berge, 2000).

Several instructors talked about the use of technology tools to enable feedback on targeted portions of student work. In tests and exams deployed within the CMS, for example, it is possible to use the gradebook to write in feedback to individual test items as well as to include an overall comment on the entire test. For written work, Microsoft Word is a favorite tool for commenting on specific parts or sections of student papers. None of the instructors reported using an electronic marking application like eMarking Assistant or ReMarksPDF. Online marking tools like these enable instructors to build reusable comment banks, create and apply automated rubrics, and record audio feedback on assignments (Transforming Assessment, 2011).

Self-assessments can be employed to deliver useful, personalized feedback while not adversely affecting the instructor's workload. Peer assessment can accomplish this as well. One approach to peer assessment is to have students present portions of a field assignment for critique by one or two peers who have been equipped with a rubric to guide their review. In addition to reducing instructor workload, peer assessment provides multiple learning benefits for students (Yang & Tsai, 2010).

As stated earlier, many instructors referred to the challenge of keeping up with online discussion postings. One solution aimed at reducing the reading load on the instructor is to divide the class into two discussion groups and assign student moderators for each discussion. At the end of the discussion, the moderators post a summary for the rest of the class to read. Instead of grading all of the discussion postings in a given week, only the moderators for that week are graded. The instructor who used this approach noted, "I've gotten a lot of insight into the depth of people's thinking just by the way they summarize content."

More than one instructor recommended looking for opportunities to provide feedback to the entire class, as opposed to individual students, in the interest of efficiency. In grading written work, for example, instructors who see multiple instances of the same error need not comment on it in every student paper. Instead, they can send a class e-mail or post an announcement on the course website summarizing the patterns they have observed. Another suggested strategy was to use a "Q&A" (question-and-answer) discussion board to which students can post questions about course material. Since students often use e-mail for questions that may be of interest to the entire class, it is useful to include a statement in the syllabus differentiating for students how they should use the discussion board and how they should use e-mail. It is also valuable to include information about how often the instructor will read and respond to the discussion board questions.

Impact on F2F Teaching

In concluding the interviews and focus-group sessions, instructors were asked whether they thought teaching online had had any impact on the way they approached assessment in their F2F teaching. One instructor articulated a view that teaching online had forced her to be more creative in designing assessments for the new environment. Experimenting in this way set the stage for her to experiment and innovate within her F2F classes. For example, after using small-group discussion online, she began to implement it in her F2F classroom as well. Related to this is the experimentation with new technologies that online teaching necessitates. One participant commented that teaching online requires instructors to experiment more with new technologies and new teaching methods. Another had developed some instructional scaffolds to help online students that she then began sharing with her F2F students. In one course, the instructor assigned students into small online discussion groups to collaboratively review the assigned readings. As an experiment, she began using a discussion board for her F2F class to help students prepare for the in-class discussion. She found that the preparatory online discussion did indeed enable students to engage in deeper discussion in the classroom. Finally, one instructor who used reflective activities in her online course incorporated more of these into her F2F teaching because of the value she perceived them as adding to students' learning.

Recommendations

Based on the findings and insight gained from the interviews and focus group, the following recommendations are proposed for online educators:

- For complex written assignments that require synthesis of material from the entire semester, divide the assignment into phases and have students submit interim deliverables for feedback. For example, a systems analysis paper could be submitted in three phases: (1) context description; (2) problem analysis; and (3) recommendation for change. Several instructors in the study used this approach to scaffold students across a complex task. Feedback is important but it is labor intensive to prepare. To offset some of the load, develop rubrics ahead of time that students can use as guidelines for their work. Make available examples of previous student work. Give students opportunities to critique one another's work.

- Use rubrics to guide student activity on the discussion board as well as in written assignments. A rubric can be as simple as a checklist that specifies target performance criteria for an assignment. Developing the rubric ahead of time can help you clarify your own thinking about the objectives of the assignment. When students use it as they craft their assignment, it can help them understand your expectations and fine tune their performance accordingly. Once you have developed the rubric, it can simplify your grading process. A typical set of criteria that may be used in a rubric for online discussion might include specifications for how frequently students should post, how many initial and follow-up posts students are required to make, and the manner in which students are expected to relate postings to course content. Regarding student perceptions of the value of rubrics, one instructor said simply, "Students really like rubrics."

- For courses that teach dense, technical material, self-check quizzes can be very effective to oblige students to complete the required reading and help them (and instructors) gauge their understanding of the material. Most CMS platforms incorporate a mechanism for deploying such quizzes; instructors can experiment to see what features are available. Many platforms offer multiple options for generating automated feedback either immediately after a student completes the quiz or at a later time/date.

- Make use of synchronous technologies, where appropriate. Many of the challenges instructors face when teaching online are the result of the distant, asynchronous nature of most online learning. Web conferencing and telephone conferencing can help "close the gap" that asynchronous communication introduces. This said, it is probably not realistic to expect that all students in an online class will be able to attend a virtual conference session at the same time each week, given that one of the major reasons students enroll in online courses is the convenience and flexibility of the asynchronous format. Moreover, students may be spread across multiple time zones. If weekly synchronous sessions are to be held, try scheduling them at different times. For example, a session could be held at noon one week and 5:00 p.m. the next. Record the session for the benefit of students who cannot attend. Student presentations may be done via web conferencing at the end of the semester. Several sessions can be organized, each at a different time, and students can be permitted to choose a time that works for them. Leveraging synchronous technologies like this may give rise to some of those "incidental opportunities" for communication that instructors say they miss.

- Explore the use of peer-assessment strategies to foster community development and give students chances to learn through analyzing and critiquing the work of others. Rubrics are a must for this kind of activity. Their explicit specification of target performance criteria and deconstruction of a large task into smaller subtasks provide helpful scaffolding to novice evaluators. Peer assessment works well for writing assignments that have interim deliverables. For project assignments in graduate courses that require students to perform some kind of authentic analysis within their own professional setting, students appreciate the opportunity to learn more about their peers' work settings. For this and other reasons, one instructor stressed, "Graduate students need to interact with one another."

- Look for appropriate opportunities to address the entire class so as to reduce the time spent giving the same feedback to multiple students. After a big assignment, post an announcement summarizing some of the trends in the submissions, along with recommendations for next steps. Maintain a "Q&A" discussion board to which students can post questions for everyone to see. Monitor the board regularly, but also urge students to assist one another when appropriate.

Conclusion

This study was conducted in two phases. In Phase One, syllabi from 24 online courses were reviewed, with the goal of illuminating types of assessment used in the courses and gaining a sense for the level of contribution each type made to the overall course grade. In Phase Two, eight of the course instructors participated in either a focus group or a one-on-one interview to discuss assessment challenges they faced in moving their courses online and effective practices they developed to address those challenges.

Data gathered in Phase One resulted in the identification of five categories of assessment: (1) written assignments; (2) online discussion; (3) fieldwork; (4) tests/quizzes/exams; and (5) presentations. The richer qualitative data collected in Phase Two were thematically analyzed in order to identify categories of challenge. To summarize, online instructors face assessment challenges of two types. Firstly, in order to advance student learning, they must: (1) collect data to serve as evidence to inform assessment judgments; and (2) provide feedback to students based on those judgments. These are imperatives that point to activities instructors are required to perform, and they ran like threads through all of the instructor interviews and focus-group discussions. Secondly, in enacting these imperatives, online instructors are challenged by constraints resulting from: (1) the physical distance between themselves and their students; (2) the need for them to depend on the capabilities of technological tools for communication purposes; and (3) the demands of their workload.

Because this study was carried out with a small sample of courses and instructors within a single institution, it is not appropriate to attempt to generalize its findings to all online courses. Nevertheless, as an exploratory study, it does shine some light on the areas of concern that instructors have as they move into online teaching, together with some of the strategies they have found to be helpful in dealing with challenges they encounter. Moreover, the method itself proved to be an effective way to ignite a meaningful conversation with the instructors about their concerns. Having the Phase-One data as a starting point was useful in establishing a context for the Phase-Two discussions.

This research suggests some possibilities in terms of directions for future work. It is clear that instructors have concerns about student assessment in the online environment. They worry about monitoring their students' progress and understanding and providing actionable feedback under the constraints of being geographically separated from their students, needing to use technology to communicate with their students, and managing their time effectively. Each of these areas would benefit from further exploration. In addition, because online discussion is so frequently relied upon as part of an assessment strategy in online learning, more work on how to use it to address some of the challenges identified in this paper would likely be beneficial. Finally, an examination of instructors' thinking and decision making about assessment, including the process by which they evaluate the effectiveness of their assessments, would also be worthwhile.

References

Allen, I. E., & Seaman, J. (2010). Class differences: Online education in the United States, 2010. Babson Park, MA: Babson Survey Research Group. Retrieved from http://www.sloanconsortium.org/sites/default/files/class_differences.pdf

Arend, B. (2007). Course assessment practices and student learning strategies in online courses. Journal of Asynchronous Learning Networks, 11(4), 3-13. Retrieved from http://www.sloanconsortium.org/sites/default/files/v11n4_arend_0.pdf

Baker, D. L. (2011). Designing and orchestrating online discussions. MERLOT Journal of Online Learning and Teaching, 7(3), 401-411. Retrieved from https://jolt.merlot.org/vol7no3/baker_0911.htm

Beebe, R., Vonderwell, S., & Boboc, M. (2010). Emerging patterns in transferring assessment practices from F2F to online environments. Electronic Journal of e-Learning, 8(1), 1 -12. Retrieved from http://www.ejel.org/issue/download.html?idArticle=157

Biglan, A. (1973). The characteristics of subject matter in different academic areas. Journal of Applied Psychology, 57(3), 195-203. doi:10.1037/h0034701

Bloxham, S., & Boyd, P. (2007). Developing effective assessment in higher education: A practical guide. Maidenhead, UK: Open University Press.

Booth, R., Clayton, B., Hartcher, R., Hungar, S., Hyde, P., & Wilson, P. (2003). The development of quality online assessment in vocational education and training: Vol. 1. Adelaide, Australia: Australian National Training Authority. Retrieved from http://www.ncver.edu.au/research/proj/nr1F02_1.pdf

Boulos, M. N. K., Taylor, A. D., & Breton, A. (2005). A synchronous communication experiment within an online distance learning program: A case study. Telemedicine and e-Health, 11(5), 583-593. doi:10.1089/tmj.2005.11.583

Gaytan, J., & McEwen, B. C. (2007). Effective online instructional and assessment strategies. The American Journal of Distance Education, 21(3), 117-132. doi:10.1080/08923640701341653

Gibbs, G., & Simpson, C. (2004-2005). Conditions under which assessment supports students' learning. Learning and Teaching in Higher Education, 1, 3-31. Retrieved from http://www2.glos.ac.uk/offload/tli/lets/lathe/issue1/articles/simpson.pdf

Gilbert, P. K., & Dabbagh, N. (2005). How to structure online discussions for meaningful discourse: A case study. British Journal of Educational Technology, 36(1), 5-18. doi:10.1111/j.1467-8535.2005.00434.x

Hannafin, M., Oliver, K., Hill, J. R., Glazer, E., & Sharma, P. (2003). Cognitive and learning factors in web-based distance learning environments. In M. G. Moore & W. G. Anderson (Eds.), Handbook of distance education (pp. 245-260). Mahwah, NJ: Erlbaum.

Joughin, G. (2010). The hidden curriculum revisited: A critical review of research into the influence of summative assessment on learning. Assessment and Evaluation in Higher Education, 35(3), 335-345. doi:10.1080/02602930903221493

Kennedy, K., Nowak, S., Raghuraman, R., Thomas, J., & Davis, S. F. (2000). Academic dishonesty and distance learning: Student and faculty views. College Student Journal, 34(2), 309-314.

Kerka, S., & Wonacott, M. E. (2000). Assessing learners online: Practitioner file. Columbus, OH: ERIC Clearinghouse on Adult, Career, and Vocational Education. Retrieved from ERIC database. (ED448285).

Kim, N., Smith, M. J., & Maeng, K. (2008). Assessment in online distance education: A comparison of three online programs at a university. Online Journal of Distance Learning Administration, 11(1). Retrieved from http://www.westga.edu/~distance/ojdla/spring111/kim111.html

Meyer, K. A. (2006). The method (and madness) of evaluating online discussions. Journal of Asynchronous Learning Networks, 10(4), 83-97. Retrieved from http://www.sloanconsortium.org/sites/default/files/v10n4_meyer1_0.pdf

Muilenburg, L., & Berge, Z. L. (2000). A framework for designing questions for online learning. DEOSNEWS, 10(2). Retrieved from http://www.iddl.vt.edu/fdi/old/2000/frame.html

Naismith, L., Lee, B.-H., & Pilkington, R. M. (2011). Collaborative learning with a wiki: Differences in perceived usefulness in two contexts of use. Journal of Computer Assisted Learning, 27(3), 228-242. doi:10.1111/j.1365-2729.2010.00393.x

Neumann, R., Parry, S., & Becher, T. (2002). Teaching and learning in their disciplinary contexts: A conceptual analysis. Studies in Higher Education, 27(4), 405-417. doi:10.1080/0307507022000011525

Oncu, S., & Cakir, H. (2011). Research in online learning environments: Priorities and methodologies. Computers & Education, 57(1), 1098-1108. doi:10.1016/j.compedu.2010.12.009

Robles, M., & Braathen, S. (2002). Online assessment techniques. Delta Pi Epsilon Journal, 44(1), 39-49.

Simonson, M., Smaldino, S. E., Albright, M., & Zvacek, S. (2006). Teaching and learning at a distance: Foundations of distance education (3rd ed.). Upper Saddle River, NJ: Merrill/Prentice Hall.

Snyder, B. R. (1971). The hidden curriculum. New York, NY: Alfred A. Knopf.

Suskie, L. (2009). Assessing student learning: A common sense guide (2nd ed.). San Francisco, CA: Jossey-Bass.

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22(2), 306-331. doi:10.1080/0158791010220208

Transforming Assessment. (2011). Marking and grading software. Retrieved from http://www.transformingassessment.net/moodle/course/view.php?id=38

Vonderwell, S., Liang, X., & Alderman, K. (2007). Asynchronous discussion and assessment in online learning. Journal of Research on Technology in Education, 39(3), 309-328. Retrieved from ERIC database. (EJ768879)

Walsh, P. (2009). Global trends in higher education, adult and distance learning. Oslo, Norway: International Council for Open and Distance Education. Retrieved from http://www.icde.org/filestore/Resources/Reports/FINALICDEENVIRNOMENTALSCAN05.02.pdf

Yang, Y. F., & Tsai, C.-C. (2010). Conceptions of and approaches to learning through online peer assessment. Learning and Instruction, 20(1), 72-83. doi:10.1016/j.learninstruc.2009.01.003