Introduction

Computer-mediated learning has become ubiquitous in education, and has emerged as a method of disseminating information in an effective, efficient format that is comparable to outcomes in classroom learning (Swan, 2003; Davis, Chryssafidou, Zamora, Davies, Khan, & Coomarasamy, 2007; Cook, Levinson, Garside, Dupras, Erwin, & Montori, 20 08). Asynchronous online learning has been readily adopted by health care professions in part due to the convenience of 24/7 access, and studies have found it to be a viable teaching solution for medical students (Schilling, Wiecha, Polineni, & Khalil, 2006; Kulier et al., 2008), physicians (Davis et al., 2007), and nurses (Hart et al., 2008). Cook (2005) has stated that additional research comparing computer-based learning to classroom learning is futile due to confounding variables. Therefore, he strongly advocates for future research into the most effective way to deliver online content by comparing various computer-mediated methods. Furthermore, he advises researchers to carefully compare similar instructional design elements. For example, compare instructional methods (case-based versus didactic) or presentation delivery (screen layout versus multimedia), but not to confound the study by mixing too many elements.

Other researchers in online instruction have indicated a key element in the measurement of the effectiveness of online instruction is student satisfaction. In other words, if students like the content delivery method, they will be more likely to engage and learn. Therefore, educators need to consider student preference in course design ( Chiu, Sun, Sun, & Ju, 2 007; Palmer & Holt, 2009). Following this line of inquiry, Cuthrell & Lyon (2007) compared student preference for various online strategies, including interactive slide presentations, group discussion, audio files, and text-based readings. The researchers found their graduate students liked a variety of delivery methods, but preferred those that allowed them to work independently rather than in groups.

Working alone online without group interaction is not always a desirable or feasible option, but the computer/student interaction does constitute one element in a triad of themes identified by Swan (2003), with the other two themes being student/instructor and student/student interactions. When considering computer/student interaction, elements such as clarity of design, student control, and immediacy of feedback have been identified as focus areas when designing a course (Swan, 2003).

Research findings such as these are vital for faculty who teach online, yet also pose a challenge as more options become available for web-based delivery of content. Which software programs and techniques are worth investing in, both from a dollar and time perspective? Is newer better? Does more functionality make a difference, or it is just bells and whistles? These are some of the questions asked by the faculty in a graduate college of health sciences.

In order to gain insight into these questions, a case study was devised using an existing online tutorial for graduate health care students. This tutorial was designed using a basic text model, and had been offered online for two years to a variety of students, with each student working in a self-paced and independent manner. The tutorial had been shown to be effective in delivering educational content by pre/post test measures (York & Stumbo, 2007). The authors wanted to compare two methods of online delivery, new interactive multimedia format versus more traditional text-based PDF format, to see if students preferred one over the other. Based on the type of research advocated by Cook (2005), many of the variables would be controlled (content, time frame, independent student tutorial completion), with only the format element variable being changed between the two groups. This would allow a focused question to be answered: Which format did the students prefer?

The selected content area was the teaching of evidence-based practice principles to doctor of physical therapy (DPT) students. Evidence-based practice (EBP) is defined as the conscientious use of current best evidence combined with clinical expertise and individual patient preferences (Strauss, Richardson, Glasziou, & Haynes, 2005). EBP is considered an essential aspect of health care education today (Guyatt & Rennie, 2002). It is also considered an essential element of life-long learning and professional development for health care practitioners (Guyatt & Rennie, 2002; Shaneyfelt et al., 2006). Therefore, the authors proposed that an asynchronous, online tutorial would allow physical therapy students both on campus and on clinical internships to access and review EBP materials related to actual patients on their caseload. Students could return to the tutorial as often as needed throughout their education when new patient care challenges arose. Therefore, the authors posited that finding a delivery method that students preferred might improve tutorial usage rates (Chui et al, 2007). Furthermore, if successful, the tutorial could also be adapted to allow schools to provide clinical instructors in remote locations with up-to-date information in EBP.

For the current study, the authors utilized a text EBP tutorial that had previously been developed for an online EBP pilot study with DPT students (York & Stumbo, 2007). Results of the pilot study indicated the tutorial was successful in effectively teaching EBP principles with student outcomes being demonstrated by a pre/post test using the validated Fresno test of competence in evidence-based medicine (Ramos, Schafer, & Tracz, 2003). The tutorial content was then adapted into two different delivery methods: (1) enhanced, interactive modules with learner-controlled navigation using Articulate® software, and (2) PDF-text formatted with Adobe® software using color, column layouts, and graphics.

An interesting insight into online learning was revealed by the results of a Beta test of the updated tutorial the authors conducted with first year students in a master of physician assistant (PA) program at the same school. While the results indicated students could successfully learn the materials from either format, most (78%) would have preferred a live lecture to the online format (York, Nordengren, & Stumbo, 2008). This may be attributed to the fact that the PA students received the majority of their educational content in lecture format, and this was their first completely online learning experience. The authors were curious to see if this was also the case with DPT students who had more extensive online experience.

Therefore, the aims of this study were: (1) to identify which format students preferred for online delivery of EBP materials, and (2) to demonstrate that successful learning outcomes would be acheived by either group.

The authors hypothesized that: (1) Students would report preference of the interactive modules by 30% or more. This was based on the fact that the students in this study were experienced both with computer-mediated learning and active learning strategies (e.g., hands-on labs, discussion groups, projects) and would prefer this to more passive learning (e.g., reading a text file). This method would also give students more control and provide feedback. (2) Students would demonstrate successful EBP content acquisition by scoring an average of 40% higher on post-test than on pre-test no matter which module format was used. This second hypothesis was based on the fact that an early version of the online tutorial was successful in promoting learning while using a very basic text model without graphics. Furthermore, measuring pre/post test improvement for both groups provided a check to be sure no student or group was shortchanged by the assigned module format. If a significant difference in test scores between groups was detected, then remedial work would have been provided.

Methods

Subjects

A first year class of DPT students at a Midwestern osteopathic medical school was given the opportunity to enroll in the study, and all eligible students choose to participate (N=50). There was no compensation or coercion, and students were not required to participate. IRB approval and informed consent were obtained prior to the study. Subjects were randomly assigned into two groups by using a random number table, dividing by even/odd numbers.

There was no significant difference in gender between the two groups (p=.92). A chi-square goodness-of-fit test revealed there was no signficiant difference between the gender of this subject sample and the gender breakdown of members of the American Physical Therapy Association (p=.92). The mean age of Group A was 23.7 years (17 females and 8 males) and the mean age of Group B was 23 (16 females and 9 males). There was no significant difference between the age of the two groups (p=.77). Additional demographic information is presented in Table 1.

Table 1. Subject Demographics

|

Group A |

Group B |

Total |

|

Females |

17 |

16 |

33 |

|

Males |

8 |

9 |

17 |

|

Total |

25 |

25 |

50 |

P =.92 |

Median Age |

23.7 |

23.6 |

23.66 |

|

SD |

1.88 |

1.12 |

1.53 |

P =.77 |

Tutorial

Educational content was created using respected and peer-reviewed EBP sources (Guyatt & Rennie, 2002; Strauss et al., 2005). The learning flow was designed by content experts (AY, TS), and tutorial design was created by an educational technologist (FN). As noted above, the course content had been shown to be effective in a basic text format (York & Stumbo, 2007). The same content was then used to create two types of presentations.

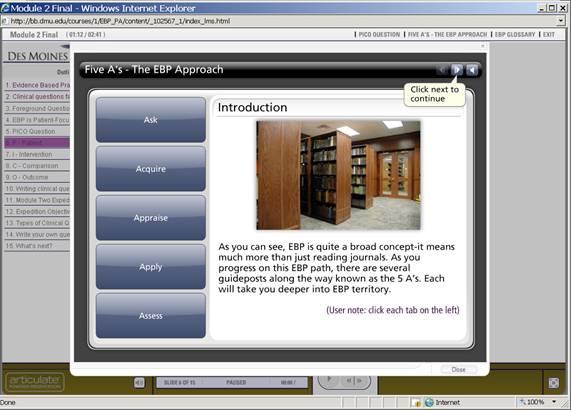

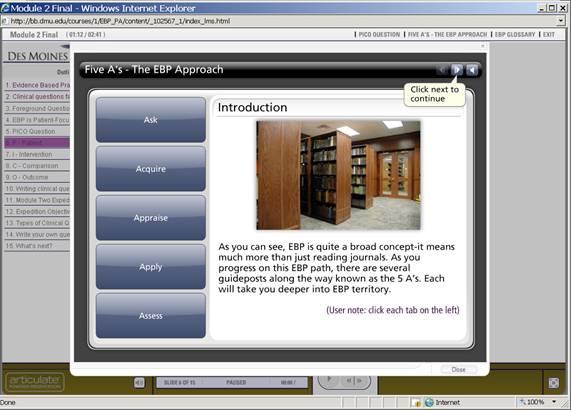

The first format consisted of interactive slides created using Articulate ® software. Material was embedded in multiple layers in the slides. This format required the student to gain access to essential learning content by clicking and interacting with the graphics and links. The intent in this design was to maintain learner engagement through the use of multi-layered navigation to additional learning resources. As opposed to traditional online slide presentations, this delivery balanced both single screen and multi-layer screen presentation of content. This format also contained music and auditory feedback during interaction with content. Students could not move onto the next screen until the previous one had been completed. This content could not be printed out. An example is shown in Figure 1.

Figure 1. Screen shot of interactive slide from Module 1.

Interactive modules required active participation and navigation to explore the content, in this case, by clicking each of the blue buttons on left of slide. Each click would take the student into a new screen. Students had to interact with content on all screens before they could progress. These screens could not be printed.

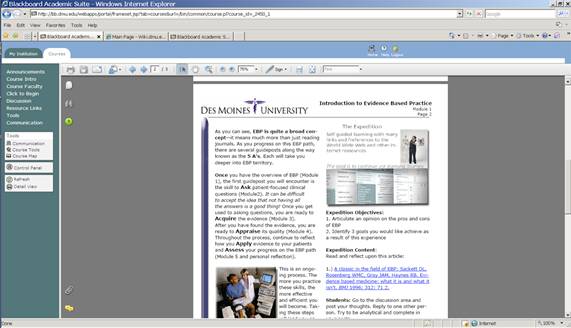

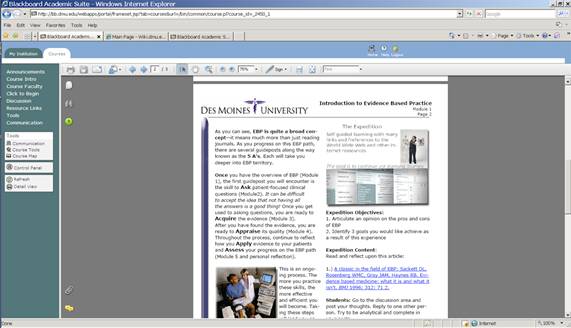

The second format consisted of taking the same text and photos used for the interactive modules, and placing them in an attractive PDF text format with color and graphics. The key differences were that the text documents could not be viewed on one screen without scrolling, there was no feedback or music, there was no interaction or completion required prior to the next slide, and these documents could be printed out. See Figure 2 for an example of a PDF text screen shot.

Figure 2.Screen shot of PDF text slide from Module 1.

Text documents required the students to scroll down to see the entire page. There were hyperlinks, but no other interactive options. These pages could be printed out.

The tutorial was presented using Blackboard® (version 7.1) course management software. The five modules were designed to teach the 5 basics steps of EBP in a systematic way. The modules and learning objectives are presented in Table 2.

A pre-course survey and a pre-test covering EBP content preceded the tutorial. The first module introduced the major components and basic steps of EBP. The second module taught students how to understand background and foreground questions, and how to create well-formed clinical questions based on clinical scenarios. The third module presented strategies for searching for evidence by locating appropriate databases and conducting library searches efficiently. The fourth module presented how to quickly screen and appraise a journal article, including a preliminary assessment of the validity and importance of the findings. The fifth module discussed patient applications, and allowed for student reflection on the EBP process. This was followed by the cumulative post-test and post-course survey. Table 3 shows the format order in which each group were presented the modules.

Also included in both formats of the tutorial were two videos from a medical librarian on database searching, Internet resource hyperlinks, and practice quizzes for Modules 1-4. Students were able to take the quizzes as many times as necessary to achieve a satisfactory score of 80%. Each quiz could be saved and returned to at a later time. The same supplemental elements were available to students in both groups, and they could access them at any time.

The tutorial was self-paced, was to be completed independently, and could be accessed at any time on or off campus within a designated four week time frame. Each module took approximately one to three hours to complete. Students could repeat any module, or completely skip a module if they so desired.

Data collection

To answer the primary study question, i.e., student preference of delivery format, the method of data collection used was a qualitative pre/post survey design. The surveys were administered through Blackboard®. The entry survey collected demographic information; the exit survey asked time spent on modules and preference for media delivery formats. Upon completion of the surveys, the Blackboard® software calculated response rates and collated answer results. The survey results were not linked with student names, although the researchers were able to see how many completed the surveys.

To address the secondary aim of the study, i.e., confirmation of content mastery, the method of data collection used was comparison of scores on a pre/post test. These test questions were based on a modified Fresno test of competence in evidence-based medicine, which has been shown to be a valid and reliable instrument (Ramos, Schafer, & Tracz, 2003). The authors were able to see student test scores, but they were de-identified and aggregated for data analysis.

Table 2. EBM Tutorial Learning Objectives

Module |

Content |

Objectives |

1 |

EBM Overview |

1. Define Evidence Based Practice (EBP)

2. List the 3 major components of EBP

3. State why learning about EBP is important in today’s health care arena

4. Understand the basic steps involved in pursuing EBP. |

2 |

Asking clinical questions |

Module 2, Part 1: Formulating a Clinical Question

1. Define the difference between background and foreground questions

2. Identify the components of a well-built clinical question

3. Create a well-formed clinical question based on a clinical scenario.

Module 2, Part 2: Types of Clinical Questions

Differentiate and construct clinical questions about:

a. Intervention

b. Diagnosis

c. Prognosis

d. Etiology/harm |

3 |

Searching for Evidence |

Module 3, Part 1: Types of Evidence

1. Complete the levels of the evidence pyramid.

2. Define and identify types of studies shown on the pyramid.

3.State which type of research is best to answer each type of clinical question.

Module 3, Part 2: Searching for the Evidence

1. Create a search strategy for researching clinical questions.

2. Identify databases that would be most appropriate for different types of questions.

3. Conduct a search for a clinical scenario. |

4 |

Appraising the Evidence |

Module 4, Part 1: Screening:

- Read the abstract and ask several basic screening questions

- Answer the question: Is this article worth my time?

Module 4, Part 2: Appraising a Therapy Article:

- Determine the validity of the study

- Estimate the importance of the findings

Module 4, Part 3: Appraising a Diagnosis article

1. Determine the validity of study

2. Estimate the importance of the findings |

5 |

Applying to patient

Assessing outcome |

1. Determine if the findings are applicable to your patient(s)

|

Table 3. Order of Module Format Delivery

Module |

Group A |

Group B |

1 |

Interactive |

Text |

2 |

Text |

Interactive |

3 |

Interactive |

Text |

4 |

Text |

Interactive |

5 |

Choice |

Choice |

Results and Discussion

To answer the primary question of the study, which delivery format was preferred, the survey response rate was 100%. The survey results reveal students preferred to have both formats available (Group A - 48%; Group B - 44%) rather than interactive alone (Group A - 24%; Group B - 32%) or text alone. (Group A - 16%; Group B - 4%). This was substantiated by the students’ actual choice of format for Module 5. The majority of students (Group A - 77%; Group B – 80 %) accessed both formats, while only a small number (Group A – 8%; Group B 4%) accessed interactive alone, and none accessed text alone. It should be noted that three students in each group (12%) accessed neither format. Results are summarized in Table 4. Comparing the two groups using a two-sample t-test, there was no significant difference (p>.05) for any category. Therefore, our first hypothesis that students would report a preference for the enhanced interactive-based modules by 30% was not upheld, and that in fact students preferred having both formats available and actually used them both.

Table 4. Preference of Delivery Format

Delivery Method |

Group A |

Group B |

Reported* |

Actual** |

Reported* |

Actual** |

Interactive only |

24% (6) |

8% (2) |

32% (8) |

4% (1) |

Text only |

16% (4) |

0 |

4% (2) |

0 |

Both Interactive & Text |

48% (12) |

77% (20) |

44% (11) |

80% (21) |

Face to face lecture only |

4% (1) |

n/a |

4% (1) |

n/a |

Blended Lecture/Web |

8% (2) |

n/a |

16% (4) |

n/a |

*Reported results obtained from post-tutorial survey asking student delivery preference.

**Actual results based on the delivery choice(s) the students made for Module 5. Three students (12%)

in each section did not complete either interactive or text.

Note: Face to face lecture and blended format questions were asked in the survey to determine interest, but were not available options for Module 5 choice.

This is consistent with other research findings indicating students prefer a variety of formats (Cuthrell & Lyon, 2007), both interactive and passive, even though they prefer to work alone. An interesting point here is the majority of students indicated they liked the online format, and did not report wishing they had a live lecture (4%) or blended format (8-16%). This is different from a similar study conducted with PA students, where 78% would have preferred a live lecture instead of online modules (York, Nordengren & Stumbo, 2008). This may be correlated with the fact that the PA students were relatively inexperienced with online learning compared to the DPT students, and received most of their educational content through a passive lecture format. These findings suggest assessing the student body for their readiness to learn online would be an important pre-requisite to being presented with an asynchoronous online learning experience. In other words, more study is needed in the area regarding when to provide online learning (Cook et al., 2008).

Our secondary hypothesis that students would demonstrate successful EBP content acquisition by improving pre/post test scores by 40% was upheld as shown in Table 5. Both groups demonstrated significant improvement between pre/post test scores (p<.001) and there was no significant difference between groups. It is interesting to note all students passed the final examination even though they had been presented with approximately 50% of the material in one format or the other, and not both. This would imply that even if a student was presented with a text module (not the preferred format) they were able to obtain educational advantage from the experience.

Table 5: Pre/Post Test Scores

|

Group A |

Group B |

t -test comparison between groups |

Pre-Test

Mean Scores |

95/150

(63.3%) |

96/150

(64%) |

p =.867 |

Standard Deviation |

16.93 |

24.33 |

|

Post-Test

Mean Scores |

142.8/150

(95%) |

141.6/150

(94.4%) |

p =.686 |

Standard Deviation |

8.73 |

11.89 |

|

Mean Increase in Scores |

50.3% |

47.5% |

|

t -test of change scores |

P <.001 |

P <.001 |

|

An important additional finding was that while students reported they wanted the PDF text format, and accessed this text format online, 68% reported never printing them out, and only 10% always printed them. This would suggest that the selection of the text format was usually not based on the desire to print the documents. Therefore, using a landscape format (rather than the typical portrait layout) for the text modules would make better use of the screen “real estate” and make them easier to read online.

Limitations

This case study was limited to a small sample from a DPT class in a Midwestern school which is predominantly young and female, so generalizing to other health professionals, geographic areas, or age/gender distributions would be difficult. Also, while self-report and qualitative surveys may provide insight, they are not definitive and cannot show causation. Finally, there was no follow-up test or survey administered after a period of time had elapsed to check for knowledge retention or application.

Based on the findings that the majority of students preferred having both formats, additional studies could proceed from this point. For example, both groups could be offered content in both formats, then additional online design elements such as podcasts or video streaming could be added as independent variables. Preference and learning outcomes between groups could be compared and contrasted.

Conclusion

Students prefer having a choice of delivery options, and they may use all, some or none of them. Furthermore, even if students prefer a particular type of instruction, they were able to demonstrate content mastery when using a less preferable format. This seems to support previous studies that indicate students prefer a variety of formats. This is significant for educators to understand that just as in the classroom, online learning preferences vary. Allowing choice and flexibility may increase student satisfaction and ultimately learning outcomes.

Acknowledgements

Funding was provided through an Iowa Osteopathic Educational Resources grant. Des Moines University Institutional Review Board approval was obtained. We would like to acknowledge the significant contributions of Rebecca Hines, MLS, and Ashley Jarek, research assistant. We would like to extend our appreciation to Traci Bush, P.T., O.T./R., D.Sc., and Juanita Robel, M.S., P.T., at Des Moines University for allowing us to work with the DPT students.