|

|

| MERLOT Journal of Online

Learning and Teaching |

Vol.

6, No. 2, June 2010

|

Systematic Improvement of Web-based Learning: A Structured Approach

Using a Course Improvement Framework

|

Liz Romero

Fischler School of Education and Human Services

Nova Southeastern University

North Miami Beach, FL 33162 USA

lisbia@nova.edu

Noela A. Haughton

Judith Herb College of Education

University of Toledo, Toledo, OH 43606 USA

Noela.Haughton@utoledo.edu

|

Abstract

The Course Improvement Matrix was designed to provide a structured approach for online instructors – critical but sometimes marginalized stakeholders – to become more involved in the continuous improvement of online courses. This paper describes the development of this tool and its application at an online university. An online instructor successfully spearheaded the improvement of an undergraduate course by using the tool to: identify instructional issues based on student feedback; examine the course content; and propose theoretically sound prescriptions for solving these instructional design issues. The authors propose that the use of Course Improvement Matrix and similar tools can help online institutions to leverage the knowledge and potential contribution of part-time instructors to support the design team’s effort to maintain online course quality.

Keywords: Online instructors , part-time instructors, course quality , online learning, higher education

|

Background

Distance education (e-Learning) opportunities in higher education have exploded over the last two decades. While it is clear that unprecedented growth has taken place, the exact level is unknown (Sprague, Maddux, Ferdig & Albion, 2007). According to the US Department of Education, in 2000-01, 56% of all degree-granting institutions offered distance courses to approximately 3 million students. Allen and Seaman (2007) reported that higher education institutions taught nearly 3.2 million students online in the fall 2005 semester, which represented an approximately 35% growth over the previous year. These authors also reported that approximately two-thirds of higher education institutions had some type of online course or program offering indicating that growth is occurring across institution size and type. This growth is not limited to the United States. Debeb (2001) as cited in Sprague, et. al. (2007), estimated that in 2001, 90 million learners were enrolled in 986 institutions in 107 countries, and that by 2025, the number of distance education learners could reach 120 million.

The expansion of e-learning has given rise to virtual universities as realistic and viable competitive options to the traditional brick-and-mortar institutions. American online institutions such as Walden University (www.walden.edu), Capella University (www.capella.edu), and Argosy University (www.argosy.edu) are regionally accredited institutions that offer degree programs ranging from the baccalaureate to doctorate level, each enrolling thousands of students worldwide. Similar institutions exist in other countries such as the Open University in the United Kingdom (http://www.open.ac.uk/) and Athabasca University (www.athabasca.ca) in Canada, both of whom offer comprehensive programs of study at the undergraduate and graduate levels. It is likely that this increasingly worldwide competition for students will continue to influence the growth of e-Learning. It is also reasonable to expect that distance education will continue to expand in reach and evolve in method (m-learning) as a viable higher education option as technology becomes faster, cheaper, and better.

“Institutions of higher education have increasingly embraced online education, and the number of students enrolled in distance programs is rapidly rising in colleges and universities throughout the United States” (Kim & Bonk, 2006). This increase in enrollment and e-Learning opportunities will continue to impact the teaching and learning environment. Higher education and other distance education providers, as part of their strategic plans, continue to invest in and evolve with new technologies. This includes the development of new programs and re-development of existing courses and programs to leverage emergent software (podcasting, wikis, blogs, virtual worlds such as Second Life, etc.) and hardware (smart phones, personal digital assistants, cell phones, etc.) technologies into their online environments (Jeong & Hmelo-Silver, 2010; Nakamura, Murphy, Juma, Rebello, & Zollman, 2009). An accompanying impact is the reliance on part-time faculty to support the growing instructional mission of both online and traditional institutions. This growing demand has helped to fuel higher education’s growing reliance on part-time online instructors (Allen & Dorn, 2008; Gerrain, 2004; Patrick & Yick, 2005; Spector 2005).

The increasing number of e-Learning programs has sparked concerns about the quality of the instruction ( Yang & Cornelious, 2005). In order to attract new students and maintain existing ones, institutions need to ensure comparable quality between online and face-to-face offerings (Allen & Seaman, 2007). Therefore the periodic revision, update, and maintenance of online courses are becoming increasingly important. As part of content delivery teams, instructional design (ID) professionals are challenged with evolving with the field as well as assuring sound professional design practice to lead the e-Learning enterprise (Moller, Forshay & Huett, 2008).

The maintenance of face-to-face courses is a continuous process typically within full-time faculty workload. Accordingly, there is freedom to develop and update in accordance with the demands of the instructional situation and context. This includes the ability to respond to course quality issues as they arise, especially in the face-to-face context. The direct and instantaneous verbal and non-verbal exchanges between teacher and students that are present in face-to-face learning situations ( Avgerinou & Andersson, 2007) facilitate the continuous improvement process. This type of informal feedback is supported by the post-course formal feedback typically gathered through course evaluation questionnaires. This course evaluation process is the major process used by higher education institutions to seek feedback from stakeholders (Kember & Leung , 2008). This source of feedback data informs faculty about possible course modification decisions for the next teaching period. However, this model largely does not hold for part-time instructors, who are mostly at-will, contingent employees with minimum control and involvement beyond teaching duties (Reeves & Reeves, 2008). This reality is, to some extent, exacerbated by the structure of the instructional support in the online context.

The revision process at online institutions may have additional complexity because of the structure of the development team. In many cases, the development team generally exercises considerable control and is typically comprised of instructional designers, subject matter experts, and media producers (Hodges, 2006; Koller, Frankenfield & Sarley, 2000; Parrish, 2009). They design and develop the instructional components such as content presentations, activities, and the nature of the interaction between the content and stakeholders, including the instructors ( Koszalka & Ganesan, 2004 ). Consequently, formal feedback data from students, usually by means of questionnaires ( Young & Norgard , 2006), are passed directly to the development team, who subsequently propose and implement changes to the instruction ( Oliver, 2000) . The process largely bypasses the instructor whose part-time status (Ruth, Sammons & Poulin, 2007) minimizes control over instruction (Ascough 2002) and thus leads to exclusion from the revision and modification decisions, and, to some degree, the feedback loop. Therefore, it is fair to say that the role of the part-time online instructor is largely to facilitate (Reeves & Reeves, 2008) instructional content and learning activities that they did not develop (Smith & Miltry, 2008). Despite this facilitator role and minimal involvement within course improvement process, the online instructor is still the primary contact for students in online courses. This reality creates involvement and expertise with the online context by default.

Some institutions ignore the fact that beyond facilitators, online instructors are the ones who interact and communicate with students (Huckstadt & Hayes, 2005). Through normal instructor-student interaction, instructors, regardless of employment status, are also recipients of other sources of course quality feedback – formal and informal – from students. The importance of online instructors in online course quality ( Avgerinou & Andersson, 2007 ) and in supporting the online learning process ( Young & Norgard, 2006) has been recognized. They are also the ones who deliver the course to the end-users, making them the common link between the course, the students and the development team, and thus ultimately, the institution. Online instructors have the privilege to receive feedback from the learning process (Chen & Chuang, 2008), and therefore, have the potential to play a critical role in online course quality. It can therefore be said that the part-time instructor controls a critical and perhaps missing element to the course quality process: the online perspective. Thus, the challenge for any university offering online options supported by part-time instructors is to proactively recognize and constructively leverage this critical perspective such that the facilitator role extends wider participation in course improvement. In other words, as posited by Huckstad and Hayes (2005), the maintenance of online courses would become a part of the instructors’ workload.

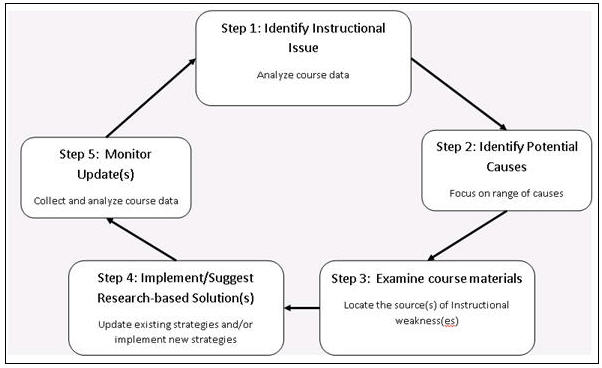

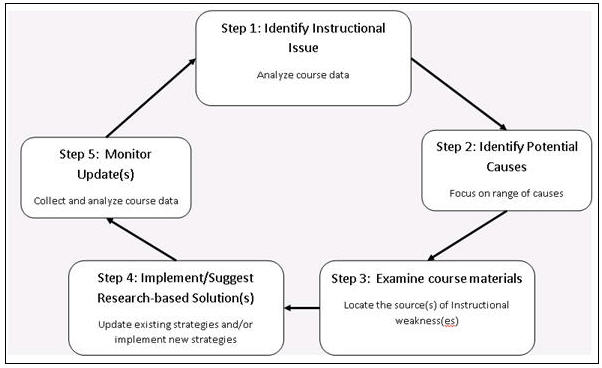

This paper describes the development and application of a tool – the Course Improvement Matrix (CIM) – that facilitates the involvement of online instructors in the course improvement feedback loop process. The CIM is based on the Online Course Improvement Model (Figure 1), a feedback loop process that supports instructor involvement in systematically: 1) identifying course-related issues using student data; 2) identifying potential causes of the identified issue(s); 3) examining the instructional situation (materials, activities, sequencing, etc.) to locate the source of weakness(es); 4) proposing research-based solutions that will address the identified source(s); and 5) collecting and analyzing student feedback and other data to monitor the effectiveness of course updates to ensure that weaknesses have been addressed.

Figure 1. Online Course Improvement Model.

Course Improvement Matrix

The Course Improvement Matrix, as applied in Table 1, was developed to provide a structured method for part-time instructors to systematically investigate the cause of quality issues they encounter while teaching. This tool supports their ability to identify areas of weaknesses within the course and to provide the instructional design and development team with prescriptions for improvements. The tool was designed in the form of a matrix to allow instructors the freedom and flexibility to input the critical issues that emerge while teaching a course – as against using preconceived categories developed by those who are not in direct communication with the users of the instructional materials.

The CIM is comprised of the four sections that align with the Online Course Improvement Model feedback loop process. Issue relates to an identified course quality issue, which typically may be problems/weakness in the instructional materials. Issues are identified based on student feedback such as specific communications and other indicators such as performance on course assessments and questionnaire results. Cause relates to one or more reasons for the perceived weaknesses. Instructors use student feedback as a basis for focused examination of course content to identify areas of weakness. Suggestion for improvement enables the identification of prescriptions for transforming identified weaknesses into potential strengths. These prescriptions may be improvements to existing instructional strategies within the course or the implementation of additional strategies whose effectiveness is supported by research.

Table 1. Application of Course Improvement Matrix

Issue |

Cause |

Suggestion for Improvement |

Justification |

Students describe content instead of applying it.

|

The assignment was very general and did not provide a context for application.

|

Provide a scenario and a context for application.

Components:

- goal

- Case/story

- Role

- Resources

- Assessment

|

Goal-base learning: students will achieve the goal by applying target skills and using relevant content knowledge. This type of learning emphasizes the “how to” rather than “know that” (Schank, & Macpherson 1999). |

Students are unclear about assignments; their responses lack focus. |

Requirements and grading criteria are unclear. |

Provide grading rubrics that clarify requirements and grading criteria.

|

Measuring complex outcomes: students need to be certain about what is being measured. When students are uncertain, they need to rely on their ability to interpret assignments (Nitko, 1996). |

When students were required to teach a lesson plan that moved students from concrete to abstract thinking, they used examples from the book and from course materials.

|

The assignment was theoretical, did not provide an authentic problem

|

Provide a real life problem that student need to solve.

Components:

- Goal

- Objectives

- Materials

- Introduce

- Present content

- Closure

- Asses if students moved from the concrete to the abstract

|

Problem-based learning: instruction should consist of real life problem to facilitate application of content. The goal of the learners is to interpret and solve the problem. The problem should drive the learning. Students learn content when they apply it (Jonassen & Rohrer-Murphy, 1999).

|

Justification refers to research-based evidence that instructors must provide to support their proposed prescriptions. Development teams are more likely to adopt and implement prescriptions that are sound in terms of theoretical justification. Once the suggested prescriptions have been implemented, the instructor along with the design team should focus on the final step of the feedback process – Monitoring. This final step in the process would be most effective if the course is subsequently taught by the same instructor involved with the course updates. However, it is certainly possible for the design team, using existing documentation from the CIM matrix, to involve a new instructor with the monitoring of the updated strategies.

Methods and Procedures

Teaching Context

The CIM was used in the spring and winter 2008 terms to evaluate two undergraduate courses in the area of human development, at a major online college. These were advanced four credit courses that typically enroll students completing pre-requisite requirements in education. There were approximately 20 students in each course representing diverse areas of study including elementary education, religious education, alcohol and drug rehabilitation counseling, and the culinary arts. In terms of degree level, all students were pursuing undergraduate degrees. A few quality issues emerged during the teaching of these courses, one of which will be further described.

Quality Issue

The first issue related to the trend of students asking for assignment clarifications just prior to the assignment’s due date. Students were required to demonstrate their understanding of basic instructional strategies in a novel situation within their perspective professional contexts. For example, elementary education majors were required to apply the content in the area of elementary education. However, many of them requested clarification just prior to the due date. These requests, in turn, indicated that too many students were unsure about and/or not confident in their abilities to apply the knowledge within their respective contexts. The CIM tool was used to further examine this issue. This specific quality issue was chosen because it related to a foundational assignment for a foundational course. Therefore, failure to demonstrate an understanding of basic instructional concepts was likely to affect performance in advanced coursework.

Application of the Course Improvement Matrix

Further investigation confirmed that the last minute requests for clarification were directly related to an inability to complete the assignment. It was also discovered that this inability was a direct result of a lack of understanding of the assignment, its purpose, and the criteria for success. Submitted assignments were also analyzed and the results provided additional evidence of the problem. A majority of the students described the concepts instead of applying them; many presented verbatim extracts from the text textbooks. Examination of the instructional content indicated that, while content was sound, the assignment was too general and lacked a context for application. The absence of an anchor or scaffold resulted in the failure of understanding, which subsequently affected the students’ ability to apply the content in a novel situation.

The instructor applied the CIM and proposed two strategies to improve the course: 1) an authentic scenario for applying the relevant instructional strategies, and 2) a goal within the scenario. A goal-based scenario provides students with a real-world context that includes an instructional goal. Both the authentic nature of the scenario and the inclusion of an instructional goal would support the ability to understand and apply instructional strategies in a discipline specific context. When students need to reach a goal they will emphasize the “how to” rather than “know what” (Schank, & Macpherson, 1999). The use of real-world problems provides a bridge between the instruction and student experiences, which in turn facilitates application of content. Since the goal of learners will be to interpret and solve the problem, this problem-solving process will drive the learning and result in students learning the content as they apply it (Jonassen & Rohrer-Murphy , 1999).

A second issue – inadequate information about the grading of written assignments –arose based on student comments and complaints. The instructor saw another opportunity to apply the CIM to improve this aspect of the course, which was directly related to the first issue. The development and distribution of a grading rubric for each assignment was proposed. The use of grading rubrics serves a critical purpose in assessment activities because they support course improvement on a number of levels (Mueller, 2004). From the student’s perspective, rubrics provide clear guidelines and expectations. From the instructor’s perspective, they support the ability to diagnose strengths and weaknesses in each student’s performance, thus facilitating targeted feedback and differentiated instruction. Rubrics also support students’ ability to self-monitor their understanding of the material (Tobias, 1982) . Finally, the use of rubrics enables multiple stakeholders – instructors, the design team, etc. – to use student outcomes data to monitor course quality over the long term.

Table 1 shows the application of the CIM to address both quality issues, including the prescriptions and research-based justifications. The prescribed improvements were shared with the design team by the online instructor at the end of the courses. The proposed course updates were positively received by the design team who subsequently invited the instructor to collaborate with them to update the courses. Specifically, the instructor outlined the learning activities and proposed the content and graphic elements needed. After the plan was accepted by the area coordinator, the instructor provided the supporting content and activities, and a media producer professional added the new elements, along with corresponding changes to the graphic elements. Upon final approval by the course coordinator and the instructor, the updates were finalized and implemented by the media professional who updated versions of both courses. This whole process took about two months to complete. Both the development team and the instructor will evaluate the effectiveness of the changes in the winter 2010 term when updated versions of both courses will be taught. Therefore, the CIM tool, which is based on the Online Course Improvement Model feedback loop process, successfully facilitated the collaboration between a part-time instructor and a virtual college’s design team. This collaboration utilized the knowledge and skills of both stakeholders to improve the quality of two online courses.

Discussion, Limitations, and Implications

There are some assumptions and limitations that must be mentioned. The premise of this paper assumes that the instructor in question has the instructional design knowledge and ability, as well as the interest to participate in this course improvement process. This may not always be case and therefore, the process would have to be modified to match the reality of the specific situation. The nature of the entire process is reactive and is thus activated after a problem has occurred. Additionally, student performance and feedback data may be misleading and as such, should be considered with other sources of evidence where possible. In the above described situation, some of the students may not have understood the content because of lack of required preparation. A related concern is the potential for overlooking other critical factors that may add even more value to the course in question. These include course materials and components such as consistent objectives, quality instructional materials, and appropriate, consistent, and fair assessment activities.

Despite the assumption and limitations of this process, value can be gleaned by the respective stakeholders. Therefore, the process does have a potentially positive role to play in the instructional quality of online courses. Many virtual and other universities, who have invested heavily in e-Learning, maintain an infrastructure that is dedicated to creating and implementing courses. This infrastructure may include subject matter experts, instructional design professionals, and web developers. This team also helps to support faculty who are in the process of moving existing face-to-face courses into e-Learning formats. Many would agree, however, that the same level of attention and resource allocation is not always a reality for existing online courses (Rahm-Barnett & Donaldson, 2008; Zhanghua, 2005). The very opportunity provided by e-learning creates a challenge, which continues to loom large – maintaining thousands of e-learning courses and products. The paper proposed a process that would help to universities to mitigate this challenge, by presenting a framework that supports their ability to leverage a critical but under-utilized resource – the knowledge and skills of part-time instructor – to improve course quality. The Online Course Improvement Model and the CIM tool facilitates a structured approach to engage with instructors to: identify course quality issues based on student outcomes data and other sources of evidence; and improve gradually these courses using research-based instructional prescriptions.

One major implication of using the Online Course Improvement Model along with implementing the CIM tool is the increased and expanded workload for part-time online instructors. Regardless of status, these instructors are the primary contact for students in online courses. Accordingly, they bring critical knowledge and perspectives to course quality issues. However, depending on workload, these activities can also adversely impact the online instructor’s contractual responsibilities and perhaps effectiveness. Therefore, in addition to cost issues related to the increased responsibilities, institutions need to thoroughly understand the costs and benefits of changes related to this strategy.

With the phenomenal growth of e-Learning opportunities comes the issue of course quality. Related to the quality issue are the need for ongoing course maintenance and the heavy reliance on part-time instructors. In many online institutions, these instructors have limited involvement with the course improvement process. Use of the CIM and similar tools provide the institution with a structured approach to increase this involvement by providing part-time instructors the opportunity to participate in the course and online learning improvement. Many would agree that this evolved role will help universities leverage the contribution of this critical group of stakeholders as part of the design team while making gains in online course quality.

|

References

Allen, I.E., & Seaman, J. (2007). Changing the landscape: More institutions pursue online offerings. On The Horizon, 15, 130 – 138.

Allen, C., & Dorn, R. (2008). Graduate degrees in geographic education: Exploring an online model. California Geographer, 4887-103.

Ascough, R.S. (2002). Designing for online distance education: Putting pedagogy before technology. Teaching Theology and Religion, 5, 1, 17-29.

Avgerinou, M., & Andersson, C. (2007). E-moderating personas. Quarterly Review of Distance Education, 8, 4, 353-364.

Chen, K. C., & Chuang, K.W. (2008). Building an e-learning system model with implications for research and Instructional use. Proceedings of the World Academic of Science, Engineering and Technology, USA, 30, 479-481.

Gerrain, D. (2004). Online Courses Offer Key to Adjunct Training. Community College Week , 16(16), 8.

Hodges, C. B. (2006). Lessons learned from a first instructional design experience. Int'l J of Instructional Media, 33, 4, 397-403.

Huckstadt, A. & Hayes, K.(2005). Evaluation of interactive online courses for advanced practice nurses. Journal of the American Academy of Nurse Practitioners, 17, 3, 85-89.

Jeong, H., & Hmelo-Silver, C. (2010). Productive use of learning resources in an online problem-based learning environment. Computers in Human Behavior, 26(1), 84-99.

Jonassen, D. H. & Rohrer-Murphy, L. (1999). Activity theory as a framework for designing constructivist learning environment. Educational Technology, Research and Development, 47, 1, 61-79.

Kember, D., & Leung, D.Y.P. (2008). Establishing the validity and reliability of course evaluation questionnaires. Assessment & Evaluation in Higher Education, 33, 4, 341–353.

Kim, K-J., & Bonk, C. (2006). The future of online teaching and learning in higher education: The survey says… Educause Quarterly, 29, 22 – 30.

Koller, C., Frankenfield, J., & Sarley, C. (2000). Twelve tips for developing educational multimedia in a community-based teaching hospital. Medical Teacher, 22(1), 7-10.

Koszalka, T.A., & Ganesan, R. (2004). Designing online courses: A taxonomy to guide strategic use of features available in course management systems (CMS) in distance education. Distance Education, 25, 2, 243-256.

Moller, L., Forshay, W., & Huett, J. (2008). The evolution of distance education: Implications for instructional design on the potential of the web. TechTrends, 52, 70 – 75.

Mueller, J. (2004). Authentic Assessment Toolbox, Rubrics. Retrieved on August 20, 2008 from http://jonathan.mueller.faculty.noctrl.edu/toolbox/index.htm .

Nakamura, C., Murphy, S., Juma, N., Rebello, N., & Zollman, D. (2009). Online Data Collection and Analysis in Introductory Physics. AIP Conference Proceedings, 1179(1), 217-220.

Nitkko, A. J. ( 1996). Educational assessment of students. (2 nd ed.). Englewood Cliffs, NJ: Prentice-Hall, Inc.

Oliver, M. (2000). Evaluating online teaching and learning. Information Services & Use,20(2/3), 83.

Parrish, P. (2009). Aesthetic principles for instructional design. Educational Technology Research & Development , 57(4), 511-528.

Patrick, P., & Yick, A. (2005). Standardizing the interview process and developing a faculty interview rubric: An effective method to recruit and retain online instructors. Internet & Higher Education, 8(3), 199-212.

Rahm-Barnett, S., & Donaldson, D. (2008). 5-4-3-2-1 Countdown to Course Management. Online Classroom, 2-8.

Raymond, E. (2000). Cognitive Characteristics. Learners with Mild Disabilities (pp. 169-201). Needham Heights, MA: Allyn & Bacon, A Pearson Education Company

Reeves, P. M., & Reeves, T.C. (2008). Design considerations for online learning in health and social work education. Learning in Health and Social Care, 7(1), 46–58.

Ruth, S., Sammons, M., & Poulin, L. (2007). E-Learning at a crossroads – What price quality? Educause Quarterly, 30, 32 – 39.

Schank, R., Berman, T., & Macpherson, K. ( 1999). Learning by doing. In C.M. Reigeluth (ed.). Instructional-design theories and models: A new paradigm of instructional theory.

Smith, D., & Mitry, D. J. (2008, January/February). Investigation of higher education: The real costs and quality of online programs. Journal of Education for Business, 147-152.

Spector, M. (2005). Time demands in online instruction. Distance Education, 26(1), 5-7.

Sprague, D., Maddux, C., Ferdig. R., & Albion, P. (2007). Online education: Issues and research questions. Journal of Technology and Teacher Education, 15, 157 – 166.

Tobias, S. (1982). When do instructional methods make a difference? Educational Researcher,11(4), 4-9.

Yang, Y., & Cornelious, L. F. ( 2005). Preparing instructors for quality online instruction. Online Journal of Distance Learning Administration, 8. Retrieved on August 18, 2008 from http://www.westga.edu/~distance/ojdla/spring81/yang81.htm.

Young, A., & Norgard, C. (2006). Assessing the quality of online courses from the students' perspective. Internet and Higher Education, 9, 107–115.

Zhanghua, M. (2005). Education for Cataloging and Classification in China. Cataloging & Classification Quarterly, 41(2), 71-83.

|

|

|

|

|

|

|