|

|

MERLOT Journal of Online

Learning and Teaching |

Vol.

6, No. 4, December 2010

|

Share on Facebook

The Indicators of Instructor Presence that are Important to Students

in Online Courses

Los Indicadores de la Presencia del Instructor Que son Importantes

para los Alumnos de Cursos Online

|

Abstract

While many researchers have sought to identify the components of teaching presence and examine its role in student learning, there are far fewer studies that have investigated which components students value the most in terms of their perceived contribution to a successful or satisfying learning experience. The research presented in this paper addressed this issue by examining which indicators of instructor presence were most important to students in online courses and how those indicators were interrelated. The indicators that were most important to students dealt with making course requirements clear and being responsive to students’ needs. Students also valued the timeliness of information and instructor feedback. While students generally placed high value on communication and instructor’s responsiveness, they did not place as much importance on synchronous or face-to-face communication. Nor did they consider being able to see or hear the instructor as very important. Among the implications of the findings is the need to pay particular attention to the communicative aspects of instructor presence.

Keywords: Teaching Presence, Online Learning, Distance Learning, Student Perceptions, Course Facilitation, Course Communication, Course Setup, Student Feedback

|

Resumen

Mientras que muchos investigadores han tratado de identificar los componentes de la presencia docente, y examinar su papel en el aprendizaje de los alumnos, no hay muchos estudios que hayan investigado qué componentes son más valorados por los alumnos, en términos de su contribución a la percepción de una experiencia exitosa o satisfactoria de aprendizaje . La investigación presentada en este trabajo abordó este asunto exa minando qué indicadores de presencia de instructores eran más importantes para los alumnos en cursos online y cómo estos indicadores están relacionados entre sí. Los indicadores que eran más importantes para los alumnos, se encargaron de aclarar los requisitos del curso y de responder a las necesidades de los alumnos. Los alumnos también valoran una oportuna información y retroalimentación del instructor. Mientras que los alumnos generalmente valoran la comunicación y la capacidad de respuesta del instructor, no le dan tanta importancia a lo sincrónico o a la comunicación cara a cara. Tampoco consideraron que la posibilidad de ver o escuchar al instructor era muy importante. Entre las consecuencias de las conclusiones, está la necesidad de prestar especial atención a los aspectos comunicativos de la presencia del instructor.

Palabras claves: La presencia docente, aprendizaje online, aprendizaje a distancia, percepciones de los estudiantes, facilitador, comunicación, instalación del curso, retroalimentación de los alumnos

|

Introduction

Online instruction is no longer a new phenomenon in higher education institutions. Research indicates that many higher education institutions view online learning as an integral and necessary mode of delivery (Berg, 2002; Durrington, Berryhill, & Swafford, 2006; Natriello, 2005; Tabatabaei, Schrottner, & Reichgelt, 2006) with conveniences including increased access, fast delivery, potentially improved pedagogy, and decreased costs for both students and institutions. With higher education institutions heavily adopting and investing in this delivery modality, several potential challenges arise for instructors trying to establish conditions to enhance the students’ learning experiences (Brinkerhoff & Koroghlanian, 2007; Durrington, Berryhill, & Swafford, 2006; Natriello, 2005). One of the issues that instructors wrestle with is the optimal level of engagement in their online courses. After developing course materials and making them available to students, some instructors may adopt a minimalist approach. For example, they may not participate in students’ discussions, may only respond to student inquiries rather than ever initiating contact, and may never review course materials to make formative revisions. At the other end of the spectrum, instructors may be highly engaged in the course both before and during course delivery by developing learning materials and activities that promote high levels of cognitive engagement, providing students with in-depth feedback for growth and development, exchanging ideas in student discussions, and continually challenging students to deepen their thinking. These strategies are just a few of the ways that instructors make their presence known in online courses. Other indicators of an instructor’s presence during the setup and development of an online course include the way the course is designed and the way the course is organized (Garrison, Anderson, & Archer, 2000). Indicators of the instructor’s presence during the delivery of an online course include his or her communication with students both within and outside of content discussions, the sharing of information related to the students’ professional interests and goals, and efforts to establish and maintain a sense of community among students (Garrison et al., 2000; Palloff & Pratt, 2003).

Many of these strategies are implicit in researchers’ conceptualizations of teaching presence in online courses and have also been shown to relate to students’ success or satisfaction in online courses (Garrison & Cleveland-Innes, 2005; Hong, Lai, & Holton, 2003; Shea & Bidjerano, 2009; Shea, Li, & Pickett, 2006; Swan & Shih, 2005). While many researchers have sought to identify the components of teaching presence and examine its role in student learning, there are far fewer studies that have aimed to determine which components students value the most in terms of their perceived contribution to a successful or satisfying learning experience (Brinkerhoff & Koroghlanian, 2007). The research presented in this paper addresses this issue by examining which indicators of instructor presence are most important to students in online courses and how those indicators are interrelated. By identifying the factors that contribute to students’ perceptions of their success in online courses, this research extends the body of literature on teaching presence and its role in strengthening students’ learning.

Literature Survey

The labels “instructor presence” and “teaching presence” have been used almost synonymously in the literature. Kassinger (2004) has defined instructor presence as the instructor’s interaction and communication style and the frequency of the instructor’s input into the class discussions and communications. Similarly Pallof and Pratt (2003) pointed out that an instructor’s presence entails “posting regularly to the discussion board, responding in a timely manner to e-mail and assignments, and generally modeling good online communication and interactions” (p. 118). In the model of teaching presence proposed by Anderson, Rourke, Garrison, and Archer (2001), teaching presence has been defined as “the design, facilitation, and direction of cognitive and social processes for the realization of personally meaningful and educationally worthwhile learning outcomes” (p. 5). Within this model, Anderson et al. emphasize three components of teaching presence: instructional design, facilitation of discourse, and direct instruction. The instructional design component includes organizing the course, setting curriculum, establishing time parameters, and laying out netiquette criteria. Facilitating discourse focuses on identifying areas of agreement and disagreement within students’ discussions, encouraging and reinforcing student contributions, setting the climate for learning, and prompting discussion. Direct instruction focuses on presenting course content and discussion prompts, summarizing discussions, examining and reinforcing students’ understandings of main concepts, diagnosing students’ misperceptions, providing information for students, and responding to students’ concerns. Research has shown that facilitation of discourse and instructor visibility are of key importance for establishing teaching presence (Mandernach, Gonzales, & Garrett, 2006). Similarly, there is a consensus that it is the instructor’s responsibility to create a space for social interaction, engage in discourse with the students, provide information and course content, and provide students with feedback in a timely manner (Lowenthal, in press; Pallof & Pratt, 2003).

Research indicates that teacher presence has an impact on students’ success in online learning (Bliss & Lawrence, 2009; Garrison & Cleveland-Innes, 2005; Garrison, Cleveland-Innes, & Fung, 2010; Pawan, Paulus, Yalcin, & Chang, 2003; Varnhagen, Wilson, Krupa, Kasprzak, & Hunting, 2005; Wu & Hiltz, 2004). LaPointe and Gunawardena (2004) reported that students’ perceived teaching presence had a direct impact on their self-reported learning outcomes. Wise, Chang, Duffy, and del Valle (2004), however, found conflicting results. Upon manipulating the level of social presence that instructors projected to the students in their online courses, Wise et al. found that the instructor’s social presence affected the students’ interactions and perceptions of the instructor but had no impact on the students’ perceived learning or actual performance. On the other hand, Wise et al. found positive relationships between instructor presence and student satisfaction in online courses. Based on these results, the authors concluded that a high level of instructor social presence did not result in students actually learning more, perceiving that they learned more, or finding the learning experience more useful. This finding highlights an equivocal relationship between the instructor’s social presence and student learning or perceived learning in the literature and underscores the need to better understand its role in teaching presence.

Pawan et al. (2003) indicated that teaching presence was reflected through the depth and quality of students’ interactions and discussions. Students’ engagement in discussions was primarily characterized by one-way monologues when the students lacked guidance and teaching presence. Related to the level of students’ engagement, teaching presence has also been shown to impact students’ sense of cognitive presence. As defined by Swan, Richardson, Ice, Garrison, Cleveland-Innes, and Arbaugh (2008), cognitive presence refers to the degree to which learners construct and confirm meaning through discourse and reflection. The elements of cognitive presence comprise a triggering event, exploration, integration, and resolution. A triggering event is something that causes the students to be interested or motivated to engage in the course. Once this triggering occurs, it may catalyze exploration, integration, and resolution. Exploration refers to the students using a variety of sources and discussions to explore the content of the course. Integration is the students’ ability to combine new information with existing knowledge in order to make sense of and understand the concepts being taught. At the latter stage resolution is the ability to apply the knowledge gained. Cognitive presence is highly related to teaching presence in that the way that an instructor designs and delivers a course can have a tremendous impact on the students’ cognitive presence in the course. For example, Shea and Bidjerano (2009) found that teaching presence is the primary catalyst for formation of both cognitive and social presence.

Other research that has examined student presence in online courses also has implications for understanding and investigating the role of teaching presence on student social presence. Both cognitive presence and social presence have been cited as having an impact on student success (Hong, Lai, & Holton, 2003; Garrison & Cleveland-Innes, 2005; Shea, Li, & Pickett, 2006; Swan & Shih, 2005). Baker (2004) found that instructor presence was a more reliable predictor of learning than social presence, though. As defined by Swan, et al. (2008), social presence represents the students’ feeling of connectedness, both socially and emotionally, with others in the online environment. It encompasses three elements: affective expression, open communication, and group cohesion. Affective expression is the students’ expression of emotions, use of humor, and self-disclosure. Open communication represents the students’ willingness to strike up conversations and respond to one another in an honest yet respectful manner. Group cohesion refers to the students agreeing with one another, using inclusive terms such as ”we” when referring to the group, and using salutations, vocatives, and phatics when referring to classmates (Lowenthal, in press).

While these aspects of social presence are at the student level, the instructor plays a key role in modeling the desired level of engagement and interaction among students (Pallof & Pratt, 2003; Pawan et al., 2003). In their study on the role of teaching presence in developing a learning community, Shea, Li, Swan, and Pickett (2005) found that instructor assistance with student discussions and conversations, as well as the quality of the course design, were important for establishing a clear teaching presence in the online environment and that this presence was positively related to students’ perceptions of support and inclusiveness. Shea et al. (2006) also reported positive relationships between instructor presence and students’ sense of classroom community. Although research has shown that social and cognitive interactions are vitally important to a successful online learning community, they are not sufficient to ensure optimal student learning in online courses (Garrison, Anderson, & Archer, 2000).

Conclusions and Purpose

What these findings confirm is that instructor presence is one of the keys to the effectiveness of online learning and that instructors need to be actively engaged in online courses. A question that has not been explored extensively is what aspects of this engagement are most important for students’ success from their perspectives. A deeper understanding of the value that students place on indicators of teaching presence was the aim of the research presented in this paper. The purpose of the study was to answer the following research questions:

-

How important are various indicators of instructor presence for students enrolled in online courses?

-

What indicators of instructor presence do students consider to be most important for their success in online courses?

Methods

The study utilized a cross-sectional survey design to answer the research questions. Data collection was conducted via a questionnaire administered online to graduate and undergraduate students enrolled in several online courses offered by the education departments at either of two large universities in the Midwest.

Instrument

The questionnaire consisted of three sets of items: 64 close-ended items to measure the importance of various indicators of instructor presence in online courses, 5 open-ended items for students to report which indicators were most important, and a mixture of open- and close-ended items targeting students’ experience with online learning and their preferences for various types of learning contexts. The list of indicators of instructor presence was compiled primarily from instruments used to measure instructor presence in online courses and other literature on the role of instructor presence and community building in online courses. Many of the indicators were drawn from the teaching presence and social presence scales of the Community of Inquiry (CoI) instrument by Garrison et al. (2000). Other indicators were developed from the cognitive presence scale based on actions that an instructor might take to establish or maintain these conditions. Additional items were added based on instructor experience and online learning literature. The intent was to present a comprehensive list of typical actions that an instructor would take in setting up, delivering, and monitoring online courses. For each indicator, students were asked to rate its importance on a scale of 1 (not important at all) to 10 (very important). For the open-ended items, students were asked to “write the 5 most important instructor behaviors for your success in an online class.”

Analysis

The close-ended items were analyzed primarily using descriptive statistics. Of particular interest were (a) the items that had the 10 highest mean ratings, which represented the indicators that students considered to be most important; (b) the items that had the 10 lowest mean ratings, which represented the indicators that students considered to be least important; and (c) the items that had the highest dispersion, which represented the indicators for which there was least consensus among the students. The authors also examined the correlations between the students’ prior online course experience and the ratings of a subset of indicators. Prior online course experience was treated as an ordinal variable based on the number of prior online courses that students had taken, ranging from 1 (no prior online courses) to 5 (4 or more prior online courses). Spearman’s rho was used for the correlation coefficients. The authors had also planned to examine potential group differences among the importance of a subset of items in terms based on graduate or undergraduate status, but the low number of undergraduates in the sample (n = 9, 13.8%) prevented this analysis.

For the open-ended items, the authors engaged in several levels of analysis, including classical content analysis (Leech & Onwebbuzie, 2007) and concept mapping (Kane & Trochim, 2007; Novak & Gowin, 1998). The purpose of the content analysis was to determine what indicators were most important to the students based on the frequency of the response. Although students were not asked to rank these items, a higher frequency was assumed to indicate greater importance. During the first phase of content analysis, the authors deductively coded the open-ended responses by assigning the variable names used for the close-ended items. For example, the student’s response of “choose a good textbook” was coded as ChooseGoodBook. The purpose of this deductive analysis was to determine how the indicators that students deemed most important aligned with the close-ended items. For any responses that did not have an existing match in the codebook, a new open code was created. At the end of the initial coding pass which had been done independently, the authors reviewed the coded responses simultaneously. Although agreement statistics were not calculated, it was noted anecdotally that the majority of the responses had been coded similarly. For items that had been coded differently, a consensus was ultimately reached on the assigned code.

In the second stage of content analysis, the authors reviewed the responses assigned to each code to determine whether the assigned codes were consistent. After grouping the responses that had been assigned the same codes, the next step involved independently reviewing each group and noting the cases where there were cross-coded responses (i.e., where similar responses had been assigned different codes). Also noted were potential occurrences of code creep (i.e., cases where a code had been assigned to a response that was just beyond its original intended definition). After the independent reviews, coding discrepancies were discussed and revised based on a consensus between the authors. From this final coding pass, frequency data was generated for each code as an indicator of its relative importance.

Due to the hierarchical nature of the open-ended items, a concept map was constructed to show the relationship among the assigned codes. This analysis was extremely useful for representing the level of specificity in some of the responses. For example, for some students simply being getting feedback was most important whereas for other students, the timeliness or constructiveness of the feedback was most important. In creating a concept map to show the relationships among the responses, the authors used the students’ responses as the main concepts and drew from their own experiences and literature on online learning in identifying the linking phrases. By superimposing the results of the content analysis onto the map, the authors were able to examine both the relative importance of groups of actions and the level of specificity that was important.

Results

There were 65 respondents who completed the questionnaire. Based on the demographic data collected, the vast majority of the respondents were enrolled in graduate degree programs (n = 53, 81.5%). Undergraduate students comprised 13.8% of the sample (n = 9). In terms of students’ prior enrollment in online courses, slightly more than a quarter of the respondents (n = 18, 27.69%) had no experience with online learning. Another quarter of the students had extensive experience with online learning, having taken four or more online courses prior to the study (n = 16, 24.62%).

Ratings of Indicators of Instructor Presence

Almost all of the close-ended items had a mean rating that was above the midpoint of the scale (5.50). The 10 most important and least important indicators based on the mean ratings are reported in Table 1. The top four items, which had a mean rating at or above 9.75, dealt with making course requirements clear and explicit. For the most important item (“Makes course requirements clear”), the minimum rating of importance was a 9 (M = 9.95, SD = 0.21). The indicator that was least important to students was the instructor having a personal website that they could visit (M = 5.38, SD = 3.25). Among the other items that were least important to students were seeing the instructor via video (M = 5.74, SD = 3.17) and participating in chat sessions (M = 5.60, SD = 3.17).

The items with the highest mean ratings also had the least variability. The 10 items that had the greatest variability are reported in Table 2. Three of these items were related to the timeliness of the instructor’s responses to students’ questions. The item that had the highest variability was “ Respond to student questions or concerns within 1 week” (M = 7.56, SD = 3.42). A suspected reason for this high variability was that some respondents may have believed that by indicating that responding within a week was important they were indicating that they were willing to wait a week for a response, when in reality they valued a quicker response.

Follow-up correlation analyses were conducted to determine whether students’ ratings of the items with the highest variability varied systematically depending on their prior online course experience. The results of this analysis are reported in Table 3. For six of the items, there was a negative correlation between the number of prior online courses taken and the importance of the behavior. The three items with the strongest negative correlations were providing an instructor video, r s(64) = -.45 , p < .01, engaging in chat sessions, r s(64) = -.40 , p < .01, and providing an instructor website, r s(63) = -.57, p < .01.

Table 1 . Ten Most Important Behaviors and Ten Least Important Behaviors Based on Mean Ratings

Item |

N |

Min |

Max |

Mdn |

M |

SD |

Items with Highest Mean Ratings |

Makes course requirements clear |

63 |

9 |

10 |

10 |

9.95 |

0.21 |

Clearly communicated important due dates/time frames for learning activities a |

65 |

8 |

10 |

10 |

9.86 |

0.43 |

Sets clear expectations for discussion participation |

65 |

8 |

10 |

10 |

9.78 |

0.54 |

Provides clear instructions on how to participate in course learning activities a |

65 |

7 |

10 |

10 |

9.75 |

0.59 |

Provides timely feedback on assignments and projects |

65 |

7 |

10 |

10 |

9.71 |

0.65 |

Clearly communicates important course topics a |

65 |

7 |

10 |

10 |

9.68 |

0.69 |

Creates a course that is easy to navigate |

65 |

6 |

10 |

10 |

9.66 |

0.76 |

Clearly communicated important course goals a |

64 |

7 |

10 |

10 |

9.64 |

0.68 |

Keeps the course calendar updated |

65 |

5 |

10 |

10 |

9.62 |

1.01 |

Always follows through with promises made to students |

65 |

7 |

10 |

10 |

9.55 |

0.77 |

Items with Lowest Mean Ratings |

Uses icebreakers to help students become familiar with one another |

63 |

1 |

10 |

8 |

7.35 |

2.41 |

Responds to student questions when ever I need a response/24 hours a day |

65 |

1 |

10 |

8 |

6.77 |

3.25 |

Feedback and comments are always positive |

65 |

1 |

10 |

7 |

6.57 |

2.59 |

Provides weekly lectures |

64 |

1 |

10 |

7 |

6.45 |

2.71 |

Participate daily in discussions |

65 |

1 |

10 |

7 |

6.32 |

2.74 |

Reply to each individual student’s posts in the discussion area |

65 |

1 |

10 |

5 |

5.83 |

2.91 |

Provide a video that allows me to hear and see the instructor |

65 |

1 |

10 |

6 |

5.74 |

3.17 |

Engages in “real time” chat sessions |

65 |

1 |

10 |

5 |

5.60 |

3.17 |

Create chapter quizzes |

64 |

1 |

10 |

5 |

5.53 |

2.93 |

Has a personal website for me to go to |

64 |

1 |

10 |

5 |

5.38 |

3.25 |

a Items from CoI (Arbaugh et al., n.d.). |

Most Important Indicators of Instructor Presence

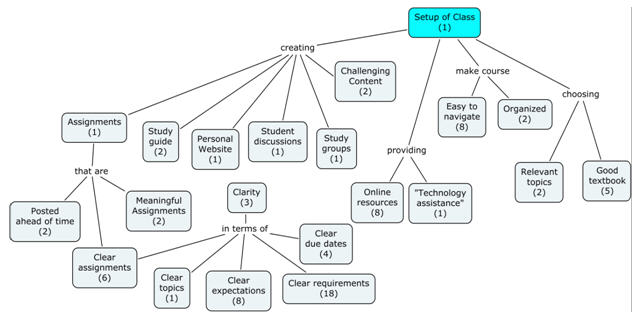

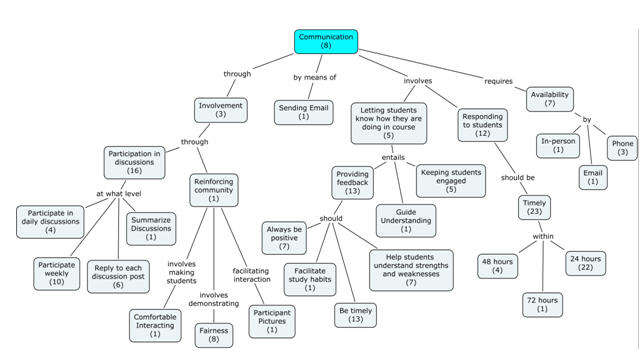

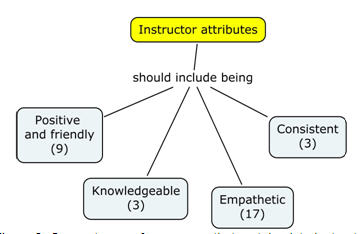

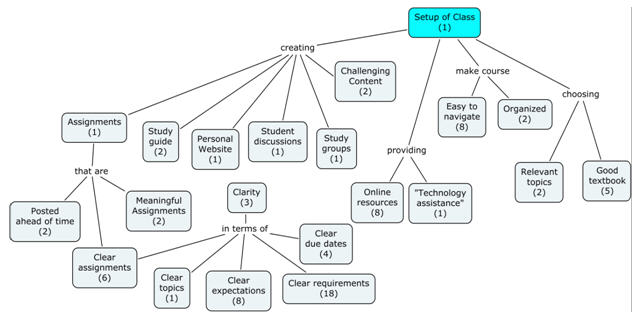

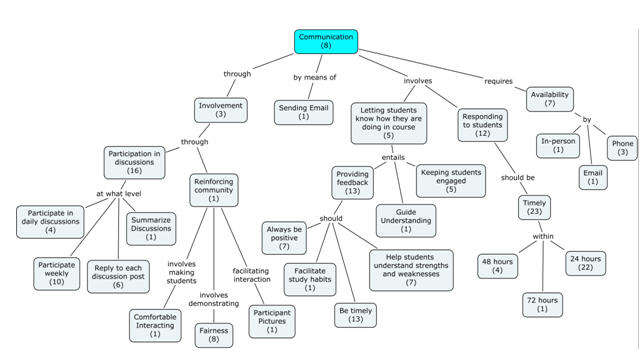

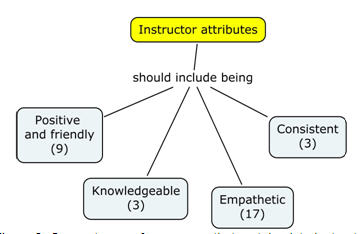

There were a total of 299 responses entered for the five most important indicators of instructor presence. The frequency counts (f) of the top 10 items, which were grouped in terms of similarity, are reported in Table 4. Three main higher-order constructs were represented in the concept map: setup of class, communication, and instructor attributes. The cluster of concepts representing each construct is shown in Figure 1, Figure 2, and Figure 3, respectively. Rather than crosslinking the clusters as commonly done in a traditional concept map (Novak & Gowin, 1998), each cluster is presented separately for emphasis. It is also important to note that each cluster represents a simplified view of the interrelationships among the concepts. The main intent was to show the representativeness of students’ responses rather than being comprehensive in forming propositions.

Table 2 . Items with the Highest Standard Deviation

| Item |

N |

Min |

Max |

Mdn |

M |

SD |

Respond to student questions or concerns within 1 week |

62 |

1 |

10 |

9 |

7.56 |

3.42 |

Responds to student questions whenever I need a response/24 hours a day |

65 |

1 |

10 |

8 |

6.77 |

3.25 |

Has a personal website for me to go to |

64 |

1 |

10 |

5 |

5.38 |

3.25 |

Provide a video that allows me to hear and see the instructor |

65 |

1 |

10 |

6 |

5.74 |

3.17 |

Engages in “real time” chat sessions |

65 |

1 |

10 |

5 |

5.60 |

3.17 |

Respond to student questions or concerns within 72 hours |

62 |

1 |

10 |

9 |

8.08 |

2.94 |

Create chapter quizzes |

64 |

1 |

10 |

5 |

5.53 |

2.93 |

Reply to each individual student’s posts in the discussion area |

65 |

1 |

10 |

5 |

5.83 |

2.91 |

Participate daily in discussions |

65 |

1 |

10 |

7 |

6.32 |

2.74 |

Provides weekly lectures |

64 |

1 |

10 |

7 |

6.45 |

2.71 |

Table 3 . Correlations between the Number of Prior Online Courses Taken and the Ratings of Items with the Highest Variability

|

OC |

RW |

R247 |

PW |

IV |

EG |

R72 |

CQ |

RI |

DD |

OC |

|

|

|

|

|

|

|

|

|

|

RW |

-.00 |

|

|

|

|

|

|

|

|

|

R247 |

.04 |

-.03 |

|

|

|

|

|

|

|

|

PW |

-.33 ** |

-.15 |

.29 * |

|

|

|

|

|

|

|

IV |

-.45 ** |

.18 |

.33 ** |

.59 ** |

|

|

|

|

|

|

EG |

-.40 ** |

.15 |

.27 * |

.56 ** |

.77 ** |

|

|

|

|

|

R72 |

.05 |

.84 ** |

-.01 |

-.17 |

.10 |

.12 |

|

|

|

|

CQ |

-.26 * |

.12 |

.19 |

.50 ** |

.49 ** |

.57 ** |

.20 |

|

|

|

RI |

-.31 * |

.01 |

.50 ** |

.60 ** |

.59 ** |

.61 ** |

-.02 |

.41 ** |

|

|

DD |

-.30 * |

.03 |

.43 ** |

.57 ** |

.68 ** |

.64 ** |

.01 |

.31 * |

.74 ** |

|

WL |

-.05 |

-.05 |

.33 ** |

.37 ** |

.56 ** |

.46 ** |

-.02 |

.29 * |

.41 ** |

.43 ** |

Note .

OC = No. of prior online courses.

RW = Respond to student questions or concerns within 1 week.

R247= “Responds to student questions whenever I need a response/24 hours a day”.

PW = “ Has a personal website for me to go to”.

IV = “Provide a video that allows me to hear and see the instructor”.

EG = Engages in “real time” chat sessions”.

R72= “ Respond to student questions or concerns within 72 hours”.

CQ = "Create chapter quizzes”.

RI = “Reply to each individual student’s posts in the discussion area”.

DD = Participate daily in discussions.

WL = “ Provides weekly lectures”.

**p < .01. *p < .05. |

Table 4 . Indicators with the Highest Frequencies

Indicator |

Frequency |

Percenta |

Responding in a timely manner |

23 |

7.69% |

Responding within 24 hours |

22 |

7.36% |

Making requirements clear |

18 |

6.02% |

Being empathetic |

17 |

5.69% |

Participating in discussions |

16 |

5.35% |

Providing feedback |

13 |

4.35% |

Providing timely feedback |

13 |

4.35% |

Responding to students |

12 |

4.01% |

Participating in discussions at least weekly |

10 |

3.34% |

Being positive and friendly |

9 |

3.01% |

a Percent is based on 299 responses. |

The setup of the class cluster (shown in Figure 1) comprised indicators such as creating an easy-to-navigate and organized course, choosing relevant topics and textbooks, creating a place for students to read announcements and engage in study groups and discussions, and creating clear requirements for assignments and the course overall. Within this cluster, making requirements clear was the most frequently reported indicator (f = 18, 6.02%), far exceeding the relatively low frequency counts of the other indicators within the cluster. Overall, making requirements clear was the third most important indicator. The two indicators with the second highest importance (i.e., the second highest frequency) within the cluster were setting clear expectations and providing online resources (f = 8, 2.68%). Overall, clarity was the most salient concept within the cluster although the object of the clarity ranged in importance. For example, only three students indicated that the higher-order concept of “clarity” was important, while other students specified that clarity was specifically important in assignments (f = 6, 2.01%), topics (f = 1, 0.33%), due dates (f = 4, 1.34%), expectations (f = 8, 2.68%), and requirements (f = 18, 6.02%). Most of the other indicators in the setup cluster were considered important by only a few students or just a single student. For instance, only one student reported that it was important for the instructor to have a personal website.

Figure 1 . Concept map of responses that pertained to the instructor setting up the course.

The numbers in parentheses indicates the frequency of the response.

The communication cluster (shown in Figure 2) consisted of indicators such as responding to students in a timely manner, being available for students, participating in discussions, and letting students know how they were doing in the course. Within this cluster, communicating with students in a timely manner was the most frequently reported indicator (f = 23, 7.69%). For almost the same number of responses, timeliness meant that the instructor would respond with 24 hours (f = 22, 7.36%). The nature of the instructor’s communication through discussions also varied in terms of importance. Sixteen responses (5.35%) indicated that it was most important for the instructor to participate in discussions, and four responses (1.34%) indicated that it was most important for the instructor to participate in daily discussions. Another 10 responses (3.34%) indicated that it was most important for the instructor to participate in weekly discussions. Getting a response to each individual discussion post was important for some students but of lesser importance than many other forms of communication such as providing feedback (f = 6, 2.01%). Providing feedback was the fourth most important indicator within the communication cluster (f = 13, 4.35%) and the sixth most important behavior overall (refer to Table 4). For some students the most salient aspect of getting feedback was its timeliness (f = 13, 4.35%). Other students indicated that it was most important that “feedback and comments are always positive” (f = 7, 2.34%). An equal number of responses indicated that improvement was the most important aspect of the feedback and that it was important that the instructor “provides feedback that helps me understand my strengths and weaknesses” (Arbaugh et al., n.d., item 12).

The communication cluster also included a branch that emphasized the instructor’s availability and the desired mode of availability. Only one student reported that it was important for the instructor to be available for in-person communication. There were also three responses (1.00%) that indicated that it was important for the instructor to be available for telephone communication.

Another branch that was represented in the communication cluster was the notion of reinforcing community. Only one student, though, specifically mentioned that it was important for the instructor to “create a feeling of community”. A few specific indicators that have commonly been associated with establishing a sense of community, such as “makes me feel comfortable interacting with other course participants” (Arbaugh et al. item 19) and “demonstrates fairness” were also represented (f = 1, 0.33% and f = 8, 2.68%, respectively). Only one student reported that the instructor “providing pictures of students or making students' pictures available” was important to him or her.

Figure 2 . Concept map of responses that pertained to instructor communication.

The numbers in parentheses indicates the frequency of the response.

The instructor attributes cluster (shown in Figure 3) included elements such as being empathetic and positive toward the students. Being empathetic was the most important indicator within the cluster (f = 17, 5.69%) and the fourth most important indicator overall (refer to Table 4). This indicator, which focused on being considerate of students’ personal obligations, included responses such as “Take[ing] our jobs and busy lives in account when setting class expectations” and “Instructor taking the stress [that] a student feel[s] into consideration” when planning assignments and course activities. The second most important indicator within the cluster was the instructor being positive and friendly (f = 9, 3.01%). This indicator was also the 10th most important instructor behavior overall based on the frequency counts.

Figure 3 . Concept map of responses that pertained to instructor attributes.

The numbers in parentheses indicates the frequency of the response.

Discussion

Overall the ratings of the close-ended items were consistent with the results obtained from the open-ended items. The indicators of instructor presence that were most important to the students dealt with making course requirements clear and being responsive to students’ needs. In addition to valuing the clarity of information presented in the course, students also valued the timeliness of information and feedback. These results were largely congruent with literature on online learning that indicates that a high degree of clarity and communication is essential for student satisfaction (Durrington, et al., 2006). Students recognized the importance of understanding what was expected of them in all aspects of the course, including assignment requirements and due dates, and they also valued feedback on their performance (Brinkerhoff et al., 2007; Durrington et al., 2006; Kupcyzynski et al., 2010).

While the students generally placed high value on communication and the instructor’s responsiveness, they did not place as much importance on synchronous or face-to-face communication. Participating in synchronous chat sessions had the third lowest rating of importance across all of the rated items, but it also had one of the highest variabilities, suggesting that it was very important to a least a few of the students. Based on a significant negative correlation between the number of online courses taken and the importance of chat sessions, the value that students place on this form of communication may wane as they acquire more online course experience. Only a few of the students indicated that the instructor being available by telephone was most important to them. These results are largely consistent with the findings of Brinkerhoff and Koroghlanian (2007) who examined the alignment between the instructional features that students most desired and the features that were actually used in their online courses. At least half of the 249 students who participated in their survey rated “a face-to-face meeting at the beginning of the course (51.3%)” and “a scheduled weekly time for synchronous communication (61.0%)” as either not at all important or somewhat important (Brinkerhoff & Koroghlanian, 2007, p. 386).

Being able to see or hear the instructor received surprisingly low ratings relative to some of the other indicators in the study. Being provided with a video of the instructor had the fourth lowest mean rating across the 64 close-ended items. Being provided with a website containing information about the instructor had the lowest mean rating across the items, suggesting that these methods of enabling students to get to know the instructor were of relatively little value to the students. Getting to know other students, though, through the use of an icebreaker activity was of more importance to the students, although it was still one of the 10 indicators with the lowest mean ratings.

Another finding that was somewhat unexpected was the relative importance of the instructor in establishing and maintaining a sense of community among the students. The importance of reinforcing community was explicitly mentioned by only one person in the open-ended items. Previous research has established a connection between students’ perceptions of teaching presence and their sense of learning community (Shea et al., 2006). The results of the present study indicated that the students did not explicitly perceive that it was important for the instructor to establish and maintain a sense of community. The importance of community was indicated indirectly, though, through the students’ ratings of the close-ended items and some of the open-ended items. For example, “creating a feeling of community” had a relatively high mean of 8.37 although it did not make the top 10 indicators in terms of importance. Other items that were rated highly and are indicators of community were “Gives me a sense of belonging in the course” (Arbaugh et al., n.d., item 14), “Create[s] a feeling of trust and acceptance” and “Makes me feel that my point of view was acknowledged by other course participants” (Arbaugh et al., n.d., item 22). Thus, while the students did not rate the creating of a community as highly important, they did rate some of the indicators within the community construct as relatively high in importance.

Another interesting finding related to teaching presence and community was the representativeness of the indicators. Two of the three higher-order constructs indentified in concept mapping (communication and setup of class) included as a subset the three components of the teaching presence construct in the CoI model: instructional design, facilitation of discourse, and direct instruction (Garrison et al., 2000). The other higher-order construct identified in concept mapping (instructor attributes) may potentially be missing from literature on teaching presence. It is possible that the instructor attributes that students find important in online courses are not indicative that the teacher is present but are indicative of the teacher’s “presence”. Teacher presence (i.e., personality traits and dispositions) may have little to do with the level at which a teacher is present in the course. Follow-up research would be needed to further explore this potential relationship.

While the results of the present study largely support existing literature about online learning, there are several limitations to the generalization of these results. Data was provided by a convenience sample of participants, and the results were largely descriptive. Demographic data collected from participants indicated that there was a relatively broad range of experience levels with online courses in terms of the number of courses taken, but the vast majority of the students were from one university and may not be representative of the population of students enrolled in online courses. Also, the vast majority of the participants (81.5%) were graduate students. The low number of undergraduate students (n = 9) did not support statistical comparisons of systematic variation in the ratings based on whether students were graduates or undergraduates. Future research should investigate potential differences between graduates and undergraduates in terms of how they value various aspects of teaching presence. Follow-up research with a larger sample size would also be beneficial for examining how well the importance of various aspects of instructor presence and other tasks typically completed by instructors during set-up and delivery of online courses replicate with other populations. Additionally, follow-up research should also examine how these tasks fit into existing models of teaching presence.

Acknowledgements

The authors would like to thank Steve Curda, PhD for creating the online survey instrument.

References

Anderson, T., Rourke, L., Garrison, R., & Archer, W. (2001). Assessing teaching presence in a computer conferencing context. Journal of Asynchronous Learning Networks, 5(2).

Arbaugh, B., Cleveland-Innes, M., Diaz, S., D. Garrison, R., Ice, P., Richardson, J., Shea, P., & Swan, K. (n.d.). Community of Inquiry Survey Instrument (Draft v15). Retrieved from http://www.ncolr.org/jiol/issues/PDF/9.1.2.pdf

Baker, J. D. (2004). An investigation of relationships among instructor immediacy and affective and cognitive learning in the online classroom. Internet and Higher Education, 7, 1-13.

Berg, G. A. (2002). Why distance learning? Higher education administrative practices. Westport, CT: Oryx Press

Bliss, C. A. & Lawrence, B. (2009). From posts to patterns: A metric to characterize discussion board activity in online courses. Journal of Asynchronous Learning Networks, 13, 1–18.

Brinkerhoff, J. & Koroghlanian, C. M. (2007). Online students’ expectations: Enhancing the fit between online students and course design. Journal of Educational Computing Research, 36(4), 383–393.

Durrington, V. A., Berryhill, A., & Swafford, J. (2006). Strategies for enhancing student interactivity in an online environment. College Teaching, 54(1), 190–193.

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 11(2), 1–14.

Garrison, D. R. & Cleveland-Innes, M. (2005). Facilitating cognitive presence in online learning: Interaction is not enough. American Journal of Distance Education, 19(3), 133 – 148.

Garrison, D. R., Cleveland-Innes, M., & Fung, T. S. (2010). Exploring causal relationships among teaching, cognitive and social presence: Student perceptions of the community of inquiry framework. The Internet and Higher Education, 13, 31 – 36.

Hong, K. S., Lai, K. W., & Holton, D. (2003). Students’ satisfaction and perceived learning with a web-based course. Educational Technology & Society, 6(1), 116–124.

Kane, M. & Trochim, W. M. K. (2007). Concept mapping for planning and evaluation. Thousand Oaks, CA: Sage Publications.

Kassinger, F. D. (2004). Examination of the relationship between instructor presence and the learning experience in an asynchronous online environment (Doctoral dissertation). Retrieved from http://scholar.lib.vt.edu/theses/available/etd-12232004-230634/unrestricted/Kassingeretd.pdf

LaPointe D. & Gunawardena C. (2004). Developing, testing and refining of a model to understand the relationship between peer interaction and learning outcomes in computer-mediated conferencing. Distance Education, 25(1), 83–106.

Leech, N. L. & Onwebbuzie, A. J. (2007). An array of qualitative data analysis tools: A call for data analysis triangulation. School Psychology Quarterly, 22(4), 557–584.

Lowenthal, P. R. (in press). Social presence. In P. Rogers, G. Berg, J. Boettcher, C. Howard, L. Justice, & K. Schenk (Eds.), Encyclopedia of distance and online learning (2nd ed.). Retrieved from http://www.patricklowenthal.com/publications/socialpresenceEDOLpre-print.pdf

Mandernach, B. J., Gonzales, R. M., & Garrett, A. L. (2006). An examination of online instructor presence via threaded discussion participation. Journal of Online Learning and Teaching, 2(4), 248–260.

Natriello, G. (2005). Modest changes, revolutionary possibilities: Distance learning and the future of education. Teachers College Records Board, 107(8), 1885–1904.

Novak, J. D. & Gowin,B. D. (1998). Learning how to learn. Cambridge, UK: Cambridge University Press.

Palloff, R. M. & Pratt, K. (2003). The virtual student. A profile and guide to working with online learners. San Francisco: Jossey-Bass.

Pawan, F. Paulus, T., Yalsin S. & Chang, C. (2003). Online learning: Patterns of engagement and interaction among in-service teachers. Language Learning & Technology, 7 (3), 119– 140.

Shea, P. & Bidjerano, T. (2009). Community of Inquiry as a theoretical framework to foster “epistemic engagement” and “cognitive presence” in online education. Journal of Asynchronous Learning Networks, 52(3), 543– 553.

Shea, P., Li, C. S., Swan, K., & Pickett, A. M. (2005). Developing learning community in online asynchronous college courses: the role of teaching presence. Journal of Asynchronous Learning Networks, 9. Retrieved from http://www.sloan-org/publications/jaln/v9n4/pdf/v9n4_shea.pdf

Shea, P., Li, C. S., & Pickett, A. (2006). A study of teaching presence and student sense of learning community in fully online and web-enhanced college courses. The Internet and Higher Education, 9(3), 175−190.

Swan, K. & Shih, L.F. (2005). On the nature and development of social presence in online course discussions. Journal of Asynchronous Learning Networks, 9 (3), 115 − 136.

Swan, K. P. , Richardson, J. C., Ice, P., Garrison, D. R., Cleveland-Innes, M. & Arbaugh, J. B. (2008). Validating a measurement tool of presence in online communities of inquiry. ementor,2(24). Retrieved from http://www.ementor.edu.pl/artykul_v2.php?numer=24&id=543

Tabatabaei, M., Schrottner, B., & Reichgelt, H. (2006). Target populations for online education. International Journal on E-learning, 5(3), 401-414.

Varnhagen, S., Wilson, D., Krupa, E., Kasprzak, S., & Hunting, V. (2005). Comparison of student experiences with different online graduate courses in health promotion. Canadian Journal of Learning and Technology, 31, 99-117.

Wise, A., Chang, J., Duffy, T., & del Valle, R. (2004). The effects of teacher social presence on student satisfaction, engagement, and learning. Journal of Educational Computing Research, 31(3), 247-271.

Wu, D., & Hiltz, S. R. (2004). Predicting learning from asynchronous online discussions. Journal of Asynchronous Learning Networks, 8, 139-152.

|

|

|

|

|

|

|