Developing a Valid and Reliable Instrument to Evaluate Users’ Perception

of Web-Based Learning in an Australian University Context

|

Si Fan

Faculty of Education

University of Tasmania

Launceston, Tasmania 7248 AU

sfan@utas.edu.au

Quynh Lê

Department of Rural Health

University of Tasmania

Launceston , Tasmania 7250 AU

Quynh.Le@utas.edu.au

Abstract

The Web has permeated many aspects of modern society. Because the popularity of the Web in society is widely recognised, its role in education has attracted a great deal of attention. As two key players in web-based education, students and teaching staff are the end-users whose views and perceptions about the significance of the Web should be used as a basis for implementing web-based education. To achieve this, valid and reliable instruments are needed. This paper describes the development of a research instrument for measuring the views, behaviours and attitudes of students and staff on the role of the Web in a university context. This pilot study involved 92 participants from the University of Tasmania. Cronbach-Alpha coefficients and exploratory factor analysis were used to measure the reliability and construct validity of the instrument. The results indicated that the questionnaire is a reliable and valid tool for researchers and courseware developers to evaluate web-based learning in this context, as well as in other Australian universities. The discussion also provides some insights into the complex relationship between technology and learning in general and user-friendliness and learner-friendliness in particular.

Keywords : web-based education, web-based technologies, web-based resources, e-learning, tertiary education.

|

Introduction

Due to the rapid growth of information technology and multi-media, computers and networks have become increasingly important in many areas of modern society, including teaching and learning (Pahl, 2008) . Schools and universities have adopted web-based technologies to support their students in both traditional coursework as well as online learning. As web-based education is diffusing across countries, educational levels, universities, and disciplines, the question for Australian universities is no longer whether to adopt web-based learning, but how to use the Webto better assist their students. As students and teaching staff are the end-users of web-based technologies, there is a need to examine how they perceive these resources in everyday learning and teaching practice. For this purpose, effective tools with established validity and reliability for measuring end-users’ perceptions are important. This paper discusses the development of a questionnaire to investigate how the Web is adopted and evaluated by its end-users in an educational context.

Background

This study took place at the University of Tasmania (UTAS) in which a diverse utilisation of the Web in teaching and learning experiences are valued (University of Tasmania, 2010) . The university has adopted a central courseware platform, the My Learning Online (MyLO) system, and a variety of other web-based resources to support teaching and learning. While the wider scope of this study aimed to capture a complete picture of web-based education within the university involving 602 participants (502 students and 100 teaching staff), this paper focuses on one component, which is a pilot study conducted with 92 participants aimed at the development of a valid and reliable questionnaire instrument to be used in the main study.

The Web has become an important application in the field of education. Web-based learning has become more feasible and acceptable for exploring innovative frontiers in life-long education (De Moor, 2007) . It has presented academics with a range of opportunities to support and enhance their curricula (Aggarwal, 2003; Aggarwal & Legon, 2008; Sauter, 2003) . According to Anderson (2009) , in the year 2008 the worldwide corporate web-based education market was valued at $17.2 billion. Instead of teachers being the only resource in classrooms, web-based technologies are adopted in both on- and off-campus learning, and thereby contributing to the enhancement of virtual universities (Le & Le, 1997) . Once implemented, the Web and web-based technologies often become indispensible, since they provide staff and learners with a much easier access to resources and a more convenient way to teach and learn.

While the Web has permeated the educational discourse at various levels, a clear understanding about the views and beliefs of students and staff with respect to the Web is essential. As they are the key players in the learning process, their feedback is essential for improving the effectiveness of web-based education. Previous research has discussed the implementation of web-based technologies from an instrumental perspective, for instance, in which way the Web is used as a learning resource and which type of web-based technologies are adopted in particular (Esnault, 2008; Mari, Genone, & Mari, 2008) . However, to better cater to students’ needs, the university needs to understand the views of both students and staff on the Web as a learning resource, particularly their evaluative judgments.

Developing reliable and valid instruments for educational research is not new (Le, Spencer, & Whelan, 2008) . A variety of tools have been designed to evaluate the courseware used in tertiary education institutions or to investigate the instrumentality of web-based technologies (Le & Le, 2007; Squires & McDougall, 1994) . However, only a few of them were developed to investigate the view of students and staff on the Web as a learning resource. Therefore, there is an opportunity for this study to contribute to this growing need.

The validity and reliability of a research instrument are particularly emphasized in this paper. Reliability refers to how consistent a tool is at measuring a particular phenomenon. A measurement is said to be consistent if it can produce similar results when it is used again in similar situations. Validity refers to whether a measurement tool measures what it claims to measure. These elements are crucial if the aims and objectives of the entire study are to be achieved (Creswell, 2009; Neuman, 2004) . The purpose of this paper is to present the strategies used in developing a valid and reliable research instrument which can assist in achieving the aim and objectives of the main study.

Methods

With the permission from the Heads of schools of the University of Tasmania, all students and teaching staff at this University were invited through each school’s emailing list to participate in this study. Participants in the research were recruited using multiple strategies, including flyers, mass lectures, and the reception area of each faculty/school for questionnaire distributions. As all faculties/schools at this university incorporate web-based resources in teaching and learning, they were motivated to participate in this study so that their perspectives could be considered and accommodated. As a result, participants involved in this research were from the Faculties/Schools of Arts, Business, Education, Health Science, Law, Science, Computing, Engineering and Technology and the Australian Maritime College. A questionnaire was used to collect quantitative data to investigate views on the Web as a learning resource. The questionnaire items were formulated on the basis of relevant theories and discussions in the e-learning literature. These items were constructed, modified, clarified, and organised through consultations with academics, developers, and researchers in the areas of e-learning and web-based technology. This process ensured the content validity of individual items.

Ethical approval for the study was granted by the University of Tasmania Human Research Ethics Committee prior to commencement of the study.

The Early Stage of the Questionnaire Development

The initial questionnaire items were developed according to the research objectives and theories reviewed from relevant literature. The first section of the questionnaire comprised seven questions and was designed to collect participants’ biographic information. The second part included 40 scaled questions about the participants’ views and attitudes on e-learning and an open-ended section. These 40 scaled items were rated on a 5-point Likert Scale (Likert, 1932) . To respond to these items, the participants were instructed to indicate how strongly they agree or disagree (1 = Strongly Agree to 5 = Strongly Disagree), or how frequently they use the Web for different academic purposes (1 = Very Often to 5 = Never). The participants were indicated to select a single choice from the scale of each question or statement (See Appendix 1).

To test the reliability of responses to the questionnaire, a pair of questions of opposite meanings was included. Question 21 stated that web-based learning enhances interpersonal relationship between staff and students, whereas, Question 24 stated that web-based learning lacks interpersonal interactions. Therefore, if the “1 = Strongly Agree” option is selected in Question 21, “5 = Strongly Disagree” should be chosen in Question 24.

The Sample

After item selection and modification, the questionnaire was tested with a sample group of 92 participants (60 students and 32 teaching staff). The questionnaire was also presented to a group of five academics for feedback to enhance content validity. Data collection was carried out within a two month period. Participants were also invited to comment on the clarity of the language and logical organisation of the questionnaire items. They were encouraged to provide recommendations and endorsements for the final version of the instrument.

Statistical Methods

Scaled question items were entered, coded, and tested using Statistical Packages for Social Science (SPSS) version 16.0 to ensure the reliability and construct validity.

The reliability of the 40 scaled items was conducted using Alpha reliability. Cronbach’s Alpha coefficient examines the internal consistency of scaled items by examining the average inter-item correlation (Q. Le et al., 2008) . This is considered to be a fundamental measure of the reliability of research instruments (Pallant, 2007) . Calculation of Cronbach’s Alpha coefficients provides the researcher with information on which questionnaire items are related to each other and which items should be removed or changed. According to Nunnally (1967) , all Cronbach’s Alpha coefficient values above 0.6 are considered to be acceptable.

After conducting the Alpha reliability analyses, items in the questionnaire were tested against their construct validity by using exploratory factor analysis. The two steps involved in the factor analysis were factor extraction and factor rotation. The Kaiser-Meyer-Olkin (KMO) statistical test was conducted prior to factor extraction and rotation to examine the adequacy of the samples for factor analysis.

Factor extraction and factor rotation were carried out on the 40 scaled items of the questionnaire. Principle Component Analysis for factor extraction and Varimax for factor rotation were used to interpret the questionnaire items. Cattell (1966) recommends the use of scree plots to plot a graph of each eigenvalue against the factor with which it is associated. One option in deciding how many factors should be used in the analysis is to examine the plot of the eigenvalues or scree test and to detain all factors with eigenvalues in the sharp descent part of the plot before the eigenvalues start to level off. This criterion yields accurate results more frequently than the eigenvalue-greater-than-1 criterion (Cattell, 1966) . After the factors have been extracted, factor rotation helps to present the pattern of loadings in a manner which is easier to interpret (Pallant, 2007) . This process involves a calculation of what degree variables load onto these extracted factors. In other words, each variable loads strongly on one component, and each component is represented by a number of strongly loading factors (Field, 2000; Pallant, 2007) .

Results

A total of 105 participants picked up the questionnaires and 92 of them responded and returned the questionnaires, yielding a response rate of 87.6% (n/N=92/105). As the purpose of the pilot study was to test the reliability and validity of the instrument instead of examining their views on the questionnaire items, 92 participants were considered as adequate. Details of the participants’ characteristics are presented in Table 1 below.

Table 1 . Participants’ characteristics

|

Students

% (n/N) |

Teaching staff

% (n/N) |

Academic faculties/schools/disciplines |

|

|

|

16.3 (15/92) |

8.6 (8/92) |

- Science/ Engineering /Technology & AMC

|

16.3 (15/92) |

8.6 (8/92) |

|

16.3 (15/92) |

8.6 (8/92) |

|

16.3 (15/92) |

8.6 (8/92) |

Gender |

|

|

|

31.5 (29/92) |

17.3 (16/92) |

|

33.6 (31/92) |

17.3 (16/92) |

Length of teaching/learning at the UTAS |

|

|

|

15.2 (14/92) |

3.3 (3/92) |

|

29.3 (27/92) |

10.9 (10/92) |

|

20.7 (19/92) |

20.7 (19/92) |

|

Reliability

The reliability analysis showed that the Cronbach’s Alpha coefficient was 0.9, which indicates substantial reliability of the instrument. However, the results indicate that the following questions had the lowest corrected item-total correlation (where r denotes as corrected item-total correlation):

- Q15. Web-based learning should be based on sound educational principles (r = 0.07);

- Q18. Learners can be easily lost in web-based learning (r = -0.04);

- Q19. Using the Web saves a great deal of time on finding learning resources (r = 0.26);

- Q30. Learners should have some basic IT knowledge before embarking on web-based learning (r = 0.17); and

- Q31. Web-based learning can be threatening to learners with poor IT skills (r = 0.13).

Thus, they were eliminated from the questionnaire. The reliability analysis procedure was rerun without each of these question items until all were eliminated from the scale. Cronbach’s Alpha coefficient was improved from 0.9 to 0.914. This confirmed that items Q15, Q18, Q19, Q30, and Q31 should not be included in the instrument; therefore, they were removed from the final draft of the questionnaire.

Validity

Content validity

To ensure the content validity of the instrument, the question items were discussed with a group of five researchers and experts in the e-learning field. Changes were made to the questionnaire based on the feedback of these experts. For example, Question 7 was changed from “Knowledge of IT” to “Knowledge of Information Technology (IT)” and Question 10 was changed from “The Web provides powerful resources for gaining latest articles and news” to “The Web provides powerful resources for gaining academic knowledge”.

Construct validity

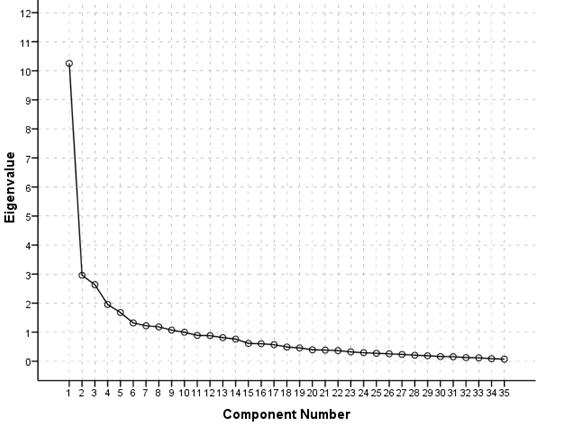

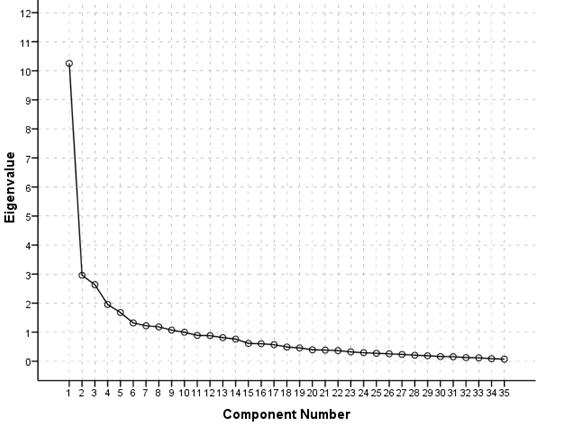

The sample population of students and teaching staff for factor analysis was 60 and 32 respectively. These sampling numbers resulted in a KMO statistical value of 0.767. As proved by Kaiser (1970, 1974) , KMO values greater than 0.5 are considered as acceptable. Therefore, the measurement of 0.767 for the sampling adequacy of the questionnaire is considered to be satisfactory. The scree plot of eigenvalues for the 40 scaled questionnaire items is shown in Figure 1. Table 2 describes the factor loadings for questionnaire items after Factor Extraction and Rotation.

Figure 1 . Scree plot of eigenvalues for the scaled questionnaire items

The scree plot in Figure 1 shows the sharp descent of the eigenvalues 1 to 5, and a leveling off from 6 onwards. Five factors should be rotated in the questionnaire items. The result of this rotation is shown in Table 2 on the following page.

The result of the factor extraction and rotation indicates that five factors explain 55.66% of the total variance in the data. The highest factor loadings of the scaled questionnaire items are listed in Table 2. Some items appeared in more than one factor. However, they were loaded onto the most important factor in which they had the highest loading. Question 14 does not appear in Table 2 as its loading was lower than 0.4, which indicates an irrelevance to the factors concluded after factor rotation. Question 24 had a negative value of -0.46, which means that there is a consistency in the participants’ disagreement with the statement given. This meets the expectation of the researcher as this question was designed to have an opposite meaning to Question 21.

This exploratory factor analysis process helped to determine the construct validity of the questionnaire. It also helped to determine whether there is a single dimension or multiple dimensions underlying the 40 scaled questionnaire items, and whether there are items that are not associated with the identified factors which should be eliminated from the measure because of the irrelevance (Green et al., 2000) . After factor analysis, the scaled items in the questionnaire were rearranged and regrouped according to the factor loadings suggested by the result of the factor extraction and rotation. The finalised questionnaire included six sections with five sections containing scaled items (See Appendix 2).

Table 2. Factor loadings for the scaled questionnaire items

Items |

Question Content |

Factor Loadings |

|

Factor 1: Instrumentality of the Web in different academic areas |

|

Q.13 |

The Web is helpful in developing students’ problem-solving skills. |

0.40 |

Q.33 |

How often is the Web used to support students’ learning in your course? |

0.47 |

Q.34 |

How often is the Web used as a communication tool in your course? |

0.51 |

Q.35 |

How often is the Web used to find reading materials in your course? |

0.56 |

Q.36 |

How often do you participate in online discussion in your course? |

0.75 |

Q.37 |

How often do you get feedback via the Web in your course? |

0.78 |

Q.38 |

How often do you share learning resources via the Web with other/your students? |

0.77 |

Q.39 |

How often is the Web used as an assessment tool in your course? |

0.76 |

Q.40 |

How often is the Web used as a management tool in your course? |

0.62 |

|

Factor 2: The Web as a social enhancement platform |

|

Q.16 |

Web-based learning can replace face-to-face learning. |

0.75 |

Q.17 |

Learning via the Web is more motivating than learning face-to-face. |

0.76 |

Q.21 |

Web-based learning enhances interpersonal relationships between lecturers and students. |

0.77 |

Q.22 |

Online communication among students and lecturers is more effective than face-to-face communication. |

0.68 |

Q.23 |

Web-based learning provides good facilities for interacting with lecturers & other students. |

0.62 |

Q.24 |

Web-based learning lacks interpersonal interaction. |

-0.46 |

Q.45 |

The MyLO system can replace face-to-face teaching. |

0.44 |

|

Factor 3: Effectiveness of the MyLO system |

|

Q.12 |

The Web can provide useful ways of giving feedback to students. |

0.48 |

Q.41 |

Every course should include MyLO in teaching and learning. |

0.67 |

Q.42 |

The lecturers use the MyLO system effectively in my course. |

0.69 |

Q.43 |

The MyLO system is learner-friendly. |

0.77 |

Q.44 |

Most functions of the MyLO system are useful. |

0.77 |

Q.46 |

The information of my course can be easily found in the MyLO system. |

0.64 |

Q.47 |

Many learning tasks are done via the MyLO system in my course. |

0.44 |

|

Factor 4: The Web and learners |

|

Q.20 |

The Web creates an interactive learning environment. |

0.47 |

Q.25 |

The Web can enhance independent learning. |

0.58 |

Q.26 |

The Web can accommodate learners having different learning styles. |

0.73 |

Q.27 |

The Web can accommodate learners from different cultural backgrounds. |

0.73 |

Q.28 |

The Web can encourage learners to take an active part in learning. |

0.52 |

Q.29 |

Web-based learning provides learners with great flexibility. |

0.45 |

Q.32 |

Using the Web can enhance students’ learning outcomes. |

0.63 |

|

Factor 5: The Web as a teaching and learning resource |

|

Q.8 |

The Web is a good tool for teaching and learning. |

0.68 |

Q.9 |

The Web can provide good facilities for exploring in learning. |

0.60 |

Q.10 |

The Web provides powerful resources for gaining academic knowledge. |

0.81 |

Q.11 |

The Web can provide useful ways of assessing students’ learning. |

0.57 |

Extraction Method: Principal Component Analysis; Rotation method: Varimax with Kaiser Nornalisation (Rotation converged in 6 iterations). |

|

Discussion

The study team gained valuable information and feedback from the participants and professionals/experts on various aspects of the instrument design, such as expression, questionnaire structure, and task time. Most importantly, the pilot study provided the research team with considerable and helpful feedback which is useful for reinforcing the validity of the instrument’s contents.

Cronbach’s Alpha coefficient and factor analysis helped to ensure and enhance the reliability and validity of the research instruments utilized. The internal consistency of the scale items was high. The findings indicated that the questionnaire items were suitable for examining the students’ views toward the Web as a learning resource in a university context. Five factors emerged from the analysis of the results. These factors are based on the composite contents of related questionnaire items.

Factor 1: This factor identifies the instrumentality of the Web as an essential component of the learning process. Nine question items were loaded onto this factor. This is an important step in extending the use of IT in an on-campus educational context to an online resource on the grounds of instrumentality. The endorsement of instrumentality as a theme challenges the traditional dichotomy of on-campus and off-campus learning. In other words, online learning should be an important aspect of on-campus education, not just for the distance or off-campus mode of teaching and learning.

Similar to many other Australian universities and education institutions, the University of Tasmania is moving towards web-based hybrid courses, which are more supportive to a constructivist, collaborative, student-centred pedagogy, and away from face-to-face only courses which can be objectivist and sometimes teacher-centred (Hiltz & Turoff, 2005) . Web-based technologies can facilitate this shift by servicing a wide range of learning activities for various learning purposes (Squires & McDougall, 1994; Wilss, 1997) . This factor signifies the role of the Web in achieving a hybrid and more collaborative learning environment.

Factor 2: This factor emphasises the significance of the Web as a social enhancement platform. Seven questions loaded onto this factor, which can assist researchers in examining the role of the Web as a social enhancement tool. The Web as a means to enhance communication and interaction for educational purposes has been emphasised by a number of researchers (Hsu, Marques, Hamza, & Alhalabi, 1999; Khan, 1998) . Students’ social interactions in learning is seen as one of the main characteristics of constructivist theories that can be applied to web-based learning (Leflore, 2000; Piccoli, Ahmad, & Ives, 2001) . Regular contacts between students and staff, and between students themselves, are beneficial in promoting students’ motivation and involvement (Chickering & Ehrmann, 1996) . Online activities such as group emailing, discussion boards and video-conferencing are used to promote social interactions. The emergence of Web-based social activities such as Face Book and Twitter can also contribute to online learning. However, they can also pose challenges in terms of privacy protection, focus and diversion.

Factor 3: This factor focuses on the end-users’ views and usage of the My Learning Online (MyLO) system adopted by all faculties at UTAS. Seven items were loaded onto this factor. In large education institutions like universities, web-based learning systems serve to support teaching across all courses, as well as to create independent asynchronous courses which allow students to study towards degrees off-campus (De Moor, 2007) . When being used appropriately, web-based courseware has a great potential to promote interactions between teachers and learners, and to maximise learning outcomes (Wills & McNaught, 1996) .

As the central courseware platform utilised at the UTAS, the MyLO system plays an essential role in delivering course contents and in the process of assessment and student management. Therefore, it is important to understand how it is perceived and evaluated by these end-users. MyLO has a variety of innovative functions for users, such as discussion forums and tools for recording lectures (e.g., Lectopia). However, these functions do not necessarily lead to innovative teaching and learning. Thus, MyLO or any web-based platform should be embedded in a meaningful learning environment.

Factor 4: This factor highlights the role of the Web as a tool in developing students’ learning skills and facilitating their learning practices. Seven questions were loaded onto this factor. This factor aims at shifting the focus of attention to students, who are considered to be central in the web-based learning practice, rather than passive information receivers (Myhill, Le, & Le, 1999; Squires & McDougall, 1994) . Learning is a meaning making process in which students should be encouraged to make sense of knowledge, and to challenge knowledge, through a variety of learning experiences. The Web has opened up new windows and frontiers for educators and learners to explore.

Due to its adaptability to various learning styles, paces and contents, web-based learning has a stronger potential than the face-to-face learning mode to satisfy students with varied learning needs and preferences. This adaptability is desired by Australian universities as it fits with a high level of diversity in student populations, backgrounds and preferred learning styles. Therefore, web-based learning should be designed in a way that can make the most of this adaptability, so that the Web can become a more powerful resource in enhancing learners’ personalised learning, collaborative learning and problem solving skills.

Factor 5: This factor focuses on the significance of the Web as a teaching and learning resource. Four questions were loaded onto this factor, indicating the usefulness of the Web as a powerful source of teaching and learning. However, the key challenge for educators is how to match the power of technology with the power of learning. As addressed by Biggs (2003) , universities and educational institutions are concerned with educational technology which has potential in helping educators to achieve their educational goals in terms of managing learning, engaging students and in enabling off-campus learning (Biggs, 2003) . As educational technologies are the platform which enables the delivery of courses, the effectiveness of these technologies influences teaching and learning practices. Therefore, it is important for the university to know how its students and staff view the educational value of the Web in order to provide a more meaningful web-based learning environment for future learners.

In summary, the paper has discussed the following important aspects. Firstly, a research questionnaire was developed and validated to assist researchers in courseware and e-learning evaluation in a university context. This instrument can also be revised for use in similar learning contexts to accommodate discourse variations. Secondly, the study identified five thematic categories (i.e. factors) which cover various aspects of a web-based learning environment. It provided some educational insights which need to be taken into account for enhancing the quality of computer-supported learning in general and web-based learning in particular. After the pilot study, this questionnaire instrument was used with 502 students and 100 teaching staff in the main study. The analysis of data obtained from this developed tool showed a strong recognition and a positive evaluation of the participants on the adoption of the web-based technologies in this particular university context.

Conclusion

The Web has become not only an important resource in teaching and learning but also an inspiring phenomenon in the educational discourse. However, the contribution of the Web to teaching and learning is valued differently depending on factors such as teaching and learning styles, level of support, theoretical perspectives of the users, and the quality of user-friendliness. Thus, critical consideration should be given to those factors in adopting the Web in university teaching and learning. It is important to take into consideration the views and perceptions of students and staff on the significance of the Web in their real world of teaching and learning. To facilitate their evaluative feedback, a questionnaire was developed, taking into account those factors and most importantly its validity and reliability are firmly tested. It is hoped that this instrument can open many insightful windows into the complexity of Web-based teaching and learning. Most importantly, it assists evaluators and educators in characterising the attitudes of the students and teaching staff on the implementation of web-based education within Australian university contexts.

Acknowledgement

The authors of this paper would like to thank Dr Thao Lê and Cecilia Chiu for their invaluable comments.

References

Aggarwal, A. K. (2003). A guide to eCourse management: The stakeholders' perspective. In A. K. Aggarwal (Ed.), Web-based education: Learning from experience (pp. 1-23). Hershey: IRM Press.

Aggarwal, A. K., & Legon, R. (2008). Web-based education diffusion: A case study. In L. Esnault (Ed.), Web-based education and pedagogical technologies: Solutions for learning applications (pp. 303-328). Hershey: IGI Global.

Anderson, C. (2009). Worldwide and U.S. corporate eLearning 2009–2013 forecast: cost savings and effectiveness drive slow market. Retrieved 6th June, 2010, from http://www.idc.com/getdoc.jsp?containerId=219499

Biggs, J. (2003). Teaching for quality learning at university: What the student does (2nd ed.). Maidenhead: Open University Press.

Cattell, R. B. (1966). The scree test for the number of factors. Multivariate Behavioral Research, 1(2), 245-276.

Chickering, A. W., & Ehrmann, S. C. (1996). Implementing the seven principles: Technology as Lever. Retrieved 6th June, 2010, from http://www.tltgroup.org/programs/seven.html

Creswell, J. W. (2009). Research design: Qualitative, quantitative, and mixed methods approaches (3rd ed.). Los Angeles: SAGE Publications.

De Moor, A. (2007). A practical method for courseware evaluation. Paper presented at the 2nd International Conference on the Pragmatic Web Oct 22-23, 2007.

Esnault, L. (2008). Preface. In L. Esnault (Ed.), Web-based education and pedagogical technologies: Solutions for learning applications (pp. viii-xxiii). Hershey: IGI Global.

Field, A. (2000). Discovering statistics using SPSS for windows: Advanced Techniques for the Beginner. London: SAGE Publications.

Green, S. B., Salkind, N. J., & Akey, T. M. (2000). Using SPSS for windows: Analysing and Understanding data (2nd ed.). Upper Saddle River, New Jersey: Prentice Hall.

Hiltz, S. R., & Turoff, M. (2005). Education goes digital: The evolution of online learning and the revolution in higher education. Communication of the ACM, 48(10), 59-64.

Hsu, S., Marques, O., Hamza, M. K., & Alhalabi, B. (1999). How to design a virtual classroom: 10 easy steps to follow. T.H.E. Journal, 27(2), 96-104.

Kaiser, H. F. (1960). The application of electronic computer to factor analysis. Educational and Psychological Measurement, XX(1), 141-151.

Kaiser, H. F. (1970). A second generation Little Jiffy. Psychometrika, 35(4), 401-415.

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39(1), 31-36.

Khan, B. H. (1998). Web-based instruction (WBI): An introduction. Education Media International, 35(2), 63-71.

Le, Q., & Le, T. (2007). Evaluation of educational software: Theory into practice. In J. Sigafoos & V. Green (Eds.), Technology and teaching (pp. 1-10). New York: Nova Science Publishers.

Le, Q., Spencer, J., & Whelan, J. (2008). Development of a tool to evaluate health science students' experiences of an interprofessional education (IPE) programme. Annals Academy of Medicine, 37(12).

Le, T., & Le, Q. (1997). Web-based evaluation of courseware. Paper presented at the Ascilite, Perth.

Leflore, D. (2000). Theory supporting design guidelines for web-based instruction. In B. Abbey (Ed.), Instructional and cognitive impacts of Web-based education. Hershey: PA: Idea Group Pubishing.

Likert, R. (1932). A technique for the measurement of attitudes. New York: Archives of psychology.

Mari, C., Genone, S., & Mari, L. (2008). E-learning and new teaching scenarios: The Mediation of Technology between Methodologies and Teaching Objectives. In L. Esnault (Ed.), Web-based education and pedagogical technologies: Solutions for learning applications (pp. 17-36). Hershey: IGI Global.

Myhill, M., Le, T., & Le, Q. (1999). Development of internet TESOL courseware . Paper presented at the The Fourth International Conference on Language and Development. from www.languages.ait.ac.th/hanoi_proceedings/marion.htm

Neuman, W. L. (2004). Basics of social research: Qualitative and Quantitative Approaches. Boston: Pearson Education.

Nunnally, J. C. (1967). Psychometric theory. New York: McGraw-Hill.

Pahl, C. (2008). A hybrid method for the analysis of learner behavior in active learning environment. In L. Esnault (Ed.), Web-based education and pedagogical technologies: Solutions for learning applications (pp. 89-101). Hershey: IGI Global.

Pallant, J. (2007). SPSS: A Step by Step guide to Data Analysis Using SPSS for Windows (Version 15) (3rd ed.). Crows Nest, NSW: Allen & Unwin.

Piccoli, G., Ahmad, R., & Ives, B. (2001). Web-based virtual learning environments: A research framework and a preliminary assessment of effectiveness in basic IT skills training. MIS Quarterly, 25(4), 401-426.

Sauter, V. L. (2003). Web design studio: A preliminary experiment in facilitating faculty use of the web. In A. Aggarwal (Ed.), Web-based education: Learning from experience. Hershey: IRM Press.

Squires, D., & McDougall, A. (1994). Choosing and using education software: A teachers' guide. London: The Falmer Press.

University of Tasmania. (2010). About using MyLO for teaching & learning online. Retrieved 6th June, 2010, from http://tlo.calt.utas.edu.au/about/mylo.aspx

Wills, S., & McNaught, C. (1996). Evaluation of computer-based learning in higher education. Computing in Higher Education, 7(2), 106-128.

Wilss, L. (1997). Evaluation of computer assisted learning across four faculties at Queensland University of Technology: Students learning process and outcomes. Paper presented at the Ascilite.

Appendix 1: Initially designed questionnaire instrument

Questionnaire items

Part A: Information about the participants’ background.

- You are a : a. Student – please go to Q3 b. Staff

- What is your teaching position at the University of Tasmania? – Please go to Q4

- What is your degree at the University of Tasmania?

- Your gender

- Which academic faculty/institution are you studying/teaching in?

- Length of studying/teaching at the University of Tasmania.

- Level of knowledge of Information Technology (IT).

Part B: Scale items for enquiring participants’ views on the significance of the Web and web-based learning environments.

- The Web is a good tool for teaching and learning.

- The Web can provide good facilities for exploring in learning.

- The Web provides powerful resources for gaining academic knowledge.

- The Web can provide useful ways of assessing students’ learning.

- The Web can provide useful ways of giving feedback to students.

- The Web is helpful in developing students’ problem-solving skills.

- The Web provides an opportunity for collaborative learning.

- Web-based learning should be based on sound educational principles.

- Web-based learning can replace face-to-face learning.

- Learning via the Web is more motivating than learning face-to-face.

- Learners can be easily lost in web-based learning.

- Using the Web saves a great deal of time on finding learning resources.

- The Web creates an interactive learning.

- Web-based learning enhances interpersonal relationships between lecturers and students.

- Online communication among students and lecturers is more effective than face-to-face communication.

- Web-based learning can provide good facilities for interacting with lecturers and other students.

- Web-based learning lacks interpersonal interaction.

- The Web can enhance independent learning.

- The Web can accommodate learners having different learning styles.

- The Web can accommodate learners from different cultural backgrounds.

- The Web can encourage learners to take an active part in learning.

- Web-based learning provides learners with great flexibility.

- Learners should have some basic IT knowledge before embarking on web-based learning.

- Web-based learning can be threatening to learners with poor IT skills.

- Using the Web can enhance students’ learning outcomes.

- How often is the Web used to support students’ learning in your course?

- How often is the Web used as a communication tool in your course?

- How often is the Web used to find reading materials in your course?

- How often do you participate in online discussion in your course?

- How often do you get feedback via the Web in your course?

- How often do you share learning resources via the Web with other/your students?

- How often is the Web used as an assessment tool in your course?

- How often is the Web used as a management tool in your course?

- Every course should include MyLO in teaching and learning.

- Lecturers use the MyLO system effectively in my course.

- The MyLO system is learner-friendly.

- Most functionalities of the MyLO system are useful.

- The MyLO system can replace face-to-face teaching.

- The information in my course can be easily found on the MyLO system.

- Many learning tasks are done via the MyLO system in my course.

Open-ended section: Any comments/remarks you would like to make in regarding to the web-based learning environment or the MyLO system?

Appendix 2: Final version of the questionnaire instrument

Research topic: The Web as a Learning Resource for Students in an Australian University Context

Part A: Information about the participants’ background. Please tick (v) the most appropriate response.

You are:

Student – please go to Q3

Staff

What is your teaching position at the University of Tasmania? – Please go to Q4

Academic teaching staff

General support staff

IT support staff

Research related position

Other(s) (please specify) _______________

Which degree are you undertaking at the University of Tasmania :

Undergraduate

Postgraduate

Graduate research

Other(s) (please specify) _______________

Gender:

Male

Female

Academic Faculty:

Education

Arts

Science/Computing/ Engineering

AMC

Health Science/Pharmacy/Nursing

Commerce/ Business

Law

Length of studying at the University of Tasmania (up to now):

Less than one year

Over one year to three years

Over three year

Knowledge of Information Technology (IT):

Very poor

Poor

Fine

Good

Excellent

Part B: Instrumentality of the Web in different academic areas. Please circle your most appropriate response.

Directions: To answer Part B, please indicate your most appropriate response by using the following criteria:

1 = Very Often 2 = Often 3= Sometimes 4 = Rarely 5 = Never

No. |

Instrumentality of the Web in different academic areas |

Weighted scores |

8 |

How often is the Web used to support students’ learning in your course? |

1 2 3 4 5 |

9 |

How often is the Web used as a communication tool in your course? |

1 2 3 4 5 |

10 |

How often is the Web used to find reading materials in your course? |

1 2 3 4 5 |

11 |

How often do you participate in online discussion in your course? |

1 2 3 4 5 |

12 |

How often do you get feedback via the Web in your course? |

1 2 3 4 5 |

13 |

How often do you share learning resources via the Web with other/your students? |

1 2 3 4 5 |

14 |

How often is the Web used as an assessment tool in your course? |

1 2 3 4 5 |

15 |

How often is the Web used as a management tool in your course? |

1 2 3 4 5 |

Part C: The Web as a social enhancement. Please circle your most appropriate response.

Directions: To answer Part C to Part F, p lease indicate your most appropriate response by using the following criteria:

1 = S trongly A gree 2 = A gree 3= N ot Sure/Not Applicable 4 = D isagree 5 = S trongly D isagree

No. |

The Web as a social enhancement |

Weighted scores |

16 |

Web-based learning can replace face-to-face learning. |

1 2 3 4 5 |

17 |

Learning via the Web is more motivating than learning face-to-face. |

1 2 3 4 5 |

18 |

Web-based learning enhances interpersonal relationships between lecturers and students. |

1 2 3 4 5 |

19 |

Online communication among students and lecturers is more effective than face-to-face communication. |

1 2 3 4 5 |

20 |

Web-based learning can provide good facilities for interacting with lecturers and other students. |

1 2 3 4 5 |

21 |

Web-based learning lacks interpersonal interaction. |

1 2 3 4 5 |

Part D: The Web and learners. Please circle your most appropriate response.

No. |

The Web and learners |

Weighted scores |

22 |

The Web can provide useful ways of giving feedback to students. |

1 2 3 4 5 |

23 |

The Web creates an interactive learning. |

1 2 3 4 5 |

24 |

The Web can enhance independent learning. |

1 2 3 4 5 |

25 |

The Web can accommodate learners having different learning styles. |

1 2 3 4 5 |

26 |

The Web can accommodate learners from different cultural backgrounds. |

1 2 3 4 5 |

27 |

The Web can encourage learners to take an active part in learning. |

1 2 3 4 5 |

28 |

Web-based learning provides learners with great flexibility. |

1 2 3 4 5 |

29 |

Using the Web can enhance students’ learning outcomes. |

1 2 3 4 5 |

30 |

The Web is helpful in developing students’ problem-solving skills. |

1 2 3 4 5 |

31 |

The Web provides an opportunity for collaborative learning. |

1 2 3 4 5 |

Part E: The Web as a teaching and learning resource. Please circle your most appropriate response.

No. |

The Web as a teaching and learning resource |

Weighted scores |

32 |

The Web is a good tool for teaching and learning. |

1 2 3 4 5 |

33 |

The Web can provide good facilities for exploring in learning. |

1 2 3 4 5 |

34 |

The Web provides powerful resources for gaining academic knowledge. |

1 2 3 4 5 |

35 |

The Web can provide useful ways of assessing students’ learning. |

1 2 3 4 5 |

Part F: Effectiveness of the MyLO system in different academic areas. Please circle your most appropriate response.

(Please complete this section if using MyLO is involved in your course)

No. |

Effectiveness of the MyLO system in different academic areas |

Weighted scores |

36 |

Every course should include MyLO in teaching and learning. |

1 2 3 4 5 |

37 |

Lecturers use the MyLO system effectively in my course. |

1 2 3 4 5 |

38 |

The MyLO system is learner-friendly. |

1 2 3 4 5 |

39 |

Most functionalities of the MyLO system are useful. |

1 2 3 4 5 |

40 |

The information in my course can be easily found on the MyLO system. |

1 2 3 4 5 |

41 |

Many learning tasks are done via the MyLO system in my course. |

1 2 3 4 5 |

42 |

The MyLO system can replace face-to-face teaching. |

1 2 3 4 5 |