Student Moderators in Asynchronous Online Discussion:

A Question of Questions

|

Daniel Zingaro

Doctoral Student

Ontario Institute for Studies in Education

University of Toronto

Toronto, ON M5S 1V6 CANADA

daniel.zingaro@utoronto.ca

Abstract

Much current research exalts the benefits of having students facilitate weekly discussions in asynchronous online courses. This study seeks to add to what is known about student moderation through an analysis of the types of questions students use to spur each discussion. Prior experimental work has demonstrated that the types of questions posed by instructors influence the cognitive levels of responses, but little is known about the extent to which student moderators use these various question forms. Question types and the cognitive levels of responses in an online graduate course were analyzed, and it was found that students relied on a small number of question forms. In particular, students rarely asked questions directly related to weekly course readings, and did not ask any questions that made connections to previously studied course material. Questions that constrained student choice led to lower levels of responses compared to other question types.

Keywords: asynchronous online learning, peer facilitation, peer moderation, collaborative learning, graduate students, distance education, quantitative |

Introduction

An increasingly popular form of distance education course is that involving the use of asynchronous computer-mediated communication (CMC) (Johnson & Aragon, 2003). Asynchronous forums typically use thread structures to link together related notes, allowing students to follow multiple simultaneously occurring discussions (Hewitt, 2005). Many authors highlight the benefits of threaded asynchronous CMC compared to synchronous CMC and face-to-face courses, including time-independent access, opportunities for heightened levels of peer interaction, avoidance of undesirable classroom behavior, and support for multiple learning styles (Morse, 2003). Asynchronous courses can support and embody core tenets of constructivist education, including participatory learning, teacher-as-collaborator, and the production of meaningful artifacts (Cavana, 2009; Gold, 2001). Others espouse the apparent equity of such courses, as such discussions tend to admit multiple perspectives and yield more even levels of contribution (Light, Colbourn, & Light, 1997). Yet, while these benefits are compelling in themselves, they are somewhat incidental to the ultimate goal of any educational initiative: purposeful, meaningful learning.

Unfortunately, as further described in the first subsection of the Literature Review that follows, such learning in online courses is anything but automatic. Various supports and pedagogical techniques are in use by instructors to support learning, including allowing students to facilitate weekly discussions. This involves giving students opportunities to shape discussions while the course instructor assumes a supportive, participatory role (Griffith, 2009; Seo, 2007). As described in the next section, peer facilitators (also referred to as peer moderators) afford many social, affective, motivational, and cognitive benefits compared to course instructors. One of the most important roles for peer moderators, however, is to take responsibility for proposing several starter questions with which to initiate a discussion. Yet, in spite of the importance of questioning for meaningful learning, the literature has not examined the breadth of questions asked by peer moderators, and their impact on participation and deep conceptual thought. This paper begins such an examination through an analysis of student contributions to an online course.

Literature Review

Learning in Online Environments

One of the most widely used frameworks for understanding online learning is the Community of Inquiry framework (Garrison, 1999; Rourke & Kanuka, 2009). At the core of this program of research is the claim that asynchronous learning environments can foster deep and meaningful learning in the presence of adequate cognitive, social, and teaching presence. Social presence refers to the feeling that others are "actually there" in the environment, whereas teaching presence reflects the instructional, facilitative, and organizational roles of the instructor. Cognitive presence is defined as "the extent to which the participants in any particular configuration of a community of inquiry are able to construct meaning through sustained communication," and is measured by four forms of discourse: triggering events, exploration, integration, and resolution (Rourke & Kanuka, 2009, p. 21). The last two of these categories represent deep, high-level learning, and so the question is: can and does this type of learning occur in asynchronous courses?

Rourke and Kanuka (2009) synthesize several references suggesting that the vast majority of student posts fall in the lowest levels of cognition, and that only 1-18% of posts can be considered "resolution" posts. Slightly more promising results were offered by Meyer (2004), who found 52% of notes in two doctoral education courses to be at the integrate–resolve levels, and 20% of posts to be at the resolution level. Schrire (2006) noted that integration and resolution may be increased in synergistic threads compared to instructor-centered threads, the latter of which are dominated by instructor posts and questions. She found, for example, that 24% of notes in instructor-centered threads contained evidence of integration and resolution, compared to 53% in synergistic threads. Others have used Bloom's taxonomy to determine the extent to which higher level cognition occurs, often finding quite sobering results. The approach involves treating the top two levels of Bloom's (1956) taxonomy – synthesis and evaluation – as reflecting higher order thinking, and the other four levels as low- or medium-level thinking. One study found that only 15% of student posts were at the highest two Bloom's taxonomy levels (Ertmer, Sadaf, & Ertmer, 2011); others found that student posts are highly concentrated in the analyze–apply midrange (Bradley, Thom, Hayes, & Hay, 2008; Christopher, Thomas, & Tallent-Runnels, 2004).

While the focus in this paper is on the relationship between question type and the cognitive level of discussion, the research cites many other factors that affect discussion quality. Online discussions can take many forms (Hammond, 2005), and the learning activities chosen by the instructor can impact the level of critical thinking engaged in by students (Richardson & Ice, 2010). Garrison and Arbaugh (2007) suggest that the difficulty in moving through the inquiry process can be traced to aspects of teaching presence. The types of questions asked by the instructor are known to influence subsequent interaction, and some types (e.g., those that have practical applications) foster increased cycling through the entire inquiry process. Both the direct-instruction component (Garrison & Arbaugh, 2007) and discourse-facilitation component (Gorsky & Blau, 2009) of teaching presence are critical for moving students to resolution. Teaching presence is also critical for perceived learning, student satisfaction, and online community (Gorsky & Blau, 2009).

Student Moderators

Interaction and participation are two key constructs thought to enhance learning in asynchronous courses. Hammond (2005) describes this assumption as arising from teachers' alignment with social constructivist and collaborative learning principles. Engagement in online learning requires student–student and student–teacher interaction, and so teachers should strive to form supportive online learning communities (Rovai, 2007). Yet, such communities are difficult to build in the face of certain recurring challenges to online learning: an overwhelming quantity of messages to read, increased likelihood of misunderstandings, and reduced student motivation. Both Rovai as well as Collins and Berge (1996) note that the key to overcoming such challenges lies in skillful instructor facilitation. This facilitation/moderation role is multifaceted, containing such aspects as being supportive, weaving and focusing topics, and offering coaching and leadership (Tagg, 1994). Instructors should seek to develop social presence, avoid becoming the center of discussions, and emphasize student–student interactions, all while encouraging students and indicating that their posts are being read. When instructors become central, the otherwise communal forum can degenerate into a question-and-answer session (Mazzolini & Maddison, 2003).

To increase student–student interaction, researchers have begun investigating the viability of having teachers assign or students self-select moderator roles. As moderators, students can play four interdependent roles (Wang, 2008): intellectual/pedagogical, social, managerial, and technical. Novice peer moderators tend to ascribe most importance to the social (e.g., encouraging participation, inviting responses) and intellectual (e.g., summarizing) roles. Indeed, this pattern also appears to be reflected in teacher-supplied guidelines for peer moderators, of which Griffith (2009) offers a representative example. In his study, the moderator was required to (p. 38):

- provide guidance on the reading and research for the week, identifying the particular focus to be pursued;

- pose questions to guide the on-line discussion;

- initiate and stimulate discussion for the topic under consideration; and

- guide discussion by (a) logging on daily to integrate and advance the discussion, (b) ensuring that all questions were discussed and (c) encouraging each participant to arrive at some closure.

In some of the earliest work on the subject, Tagg (1994) argued that student moderation sets up a context within which students and teachers can complement one another's strengths. In his study of students in graduate psychology courses, each week one student signed up for the role of topic leader (submitting the initial contribution) and another signed up to be topic reviewer (submitting a final, synthesis posting). It was found that such student involvement promoted increased cohesion and structure to the discussions. Furthermore, interaction and participation was increased by virtue of having students publicly commit to their moderator roles. Hara, Bonk, and Angeli (2000) corroborate this finding: forums headed by students acting as "starters" yield fewer isolated and scattered discussions. Tagg and others (e.g., Rourke & Anderson, 2002) invoke an empowerment argument to explain that instructor-posted opener/starter notes tend not to be as effective as starters posted by students: students may hesitate to respond to the instructor, whom they perceive as being more knowledgeable and competent. At the same time, however, the instructor remains critical for providing recognition and requesting clarifications and elaborations. In another early study (Murphy et al., 1996), increases in participation similar to those reported by Tagg were obtained when the instructor selected the weekly moderators, rather than having students self-select.

To foster this increased participation, it is not sufficient for peer moderators to post frequently themselves (Chan, Hew, & Cheung, 2009). What is it about moderators, then, that does tend to enhance participation? Hew and Cheung (2008) sought to answer this question through a study of thread structure in blended courses. They argued that deep threads (operationalized as having a depth of at least six) implied that significant participation had occurred, and therefore proceeded to examine such threads for salient moderator tactics. Seven facilitator strategies were found: disclosing personal opinions, questioning, showing appreciation, establishing ground rules, suggesting new directions, inviting to participate, and summarizing. Of these, questioning occurred most frequently, in a combination of asking for clarification and asking for students' opinions. And, while questioning tends to promote thread growth, summarizing tends to cut threads short (Chan et al., 2009).

Compared to face-to-face courses, asynchronous courses permit students to assume a more central teaching-presence role (Heckman & Annabi, 2005), especially when those students are assigned to moderate weekly discussions (Rourke & Anderson, 2002). Rourke and Anderson argue that addressing the constituents of teaching presence can be daunting for a single teacher, and that student moderation may help distribute and balance such responsibilities. These authors describe an asynchronous graduate course in which the instructor modeled moderation for the first five weeks, and then teams of four students moderated each of the remaining weekly discussions. Content analysis of the course transcripts showed that peer moderators exceeded the instructor on percentage of instructional design and facilitation posts, and that direct instruction was comparable between peer moderators and the instructor. Questionnaire data and interview analysis confirmed that the student moderators performed comparably to the instructor on all three teaching roles.

It is important here to add that the instructor's role remains critical in a course where peer moderators are used. Rourke and Anderson (2002) note that the instructor can work to fill teaching-related gaps left by more pedagogically novice student moderators. Furthermore, instructor-controlled elements of online structure can impact the effectiveness of peer-moderator activities. Gilbert and Dabbagh (2005) report a multiple case study of four increasingly structured iterations of a blended graduate course, wherein pairs of students moderated weekly discussions. In the first offering of the course, peer moderators were simply told to post at least two starter questions, facilitate the discussion, and synthesize the discussion in a follow-up face-to-face meeting. In the second offering, moderators were provided with a document outlining their specific roles and a list of criteria on which they would be evaluated by the instructor. These resources increased the percentage of posts that were written by the moderators, including posts that responded to other students, clarified material, and asked follow-up questions. This increased moderator involvement led to greater interaction among students and heightened "meaningful discourse" as measured through inference, analysis, synthesis, and evaluation. Finally, it is important for instructors to model and motivate the role of peer moderator prior to having students assume facilitation roles (Baran & Correia, 2009). Baran and Correia describe a case study in which the instructor modeled online facilitation for three weeks, stressed the importance of facilitation skills to the soon-to-be teachers in the course, provided guidelines, and encouraged the exploration of meaningful and novel facilitation styles. Student facilitators readily responded to this motivating, safe venue for leading their groups and encouraging meaningful interaction. As an example, one student chose to lead through a highly structured style that kept others focused on current learning goals in close connection to the week's readings and their own teaching practice. Another facilitator asked students to recount personal teaching-related goals and wishes so as to create an atmosphere of trust and personal disclosure. In each case, all students were active and enthusiastic participants, taking ownership of the discussion and others' learning. It is unlikely that such varying facilitation styles, motives, and learner engagement would follow from an instructor not attuned to the nuances of giving students power and "letting go" (Gunawardena, 1992).

Online Questioning

Decades of research in face-to-face settings has focused on two broad classes of questions: lower cognitive and higher cognitive, mapping, respectively, to the knowledge and comprehension and the application, analysis, synthesis, and evaluation levels of Bloom's taxonomy (Gall, 1970; Redfield & Rousseau, 1981). Lower cognitive questions ask students to recall or recognize information that has previously been presented, whereas high-cognitive questions demand evidence-based, information-informed reasoning processes. A meta-analysis of 14 school-based studies found that average students treated with high-cognitive questions reached the 77th percentile of achievement, compared to the expected 50th percentile (Redfield & Rousseau, 1981). A follow-up research synthesis tempers this finding, but concludes that high-cognitive questions do have a small effect overall (Samson, Strykowski, Weinstein, & Walberg, 1987). Gall (1984) explains that while it is a common belief that the cognitive level of the question has a strong influence on the cognitive level of the responses, this is not always borne out by the literature.

Relatively few studies, however, have examined the effects of questions in the online setting, and it is dangerous to assume that what is true in face-to-face settings will be true online. As one example shows, students often have limited opportunities to ask questions in face-to-face settings, whereas Blanchette (2001) found that 77% of questions in an online graduate course were written by students. Blanchette further classified questions and their responses using three different frames of analysis: linguistic, pedagogic, and cognitive. While these frames each contributed to the depth of the present study, the main interest here is in the cognitive frame. The cognitive classification was based on a coding system developed by Gallagher and Aschner (1963), who grouped questions as routine, cognitive-memory, convergent, divergent, or evaluative. Blanchette found that evaluative questions were used most frequently by the students and teacher, that divergent questions were used very infrequently, and, overall, that most questions written by the students and teacher were at the high cognitive levels. In general, the cognitive level of responses to an instructor-posed question matched the cognitive level of that question.

Using a typology of question types from Andrews (1980), several studies have found that the type of question influences the quantity and quality of subsequent interaction. Bradley et al. (2008) classified questions into the following categories of Andrews':

- Direct link: ask students to interpret an aspect of a course reading;

- Course link: ask students to integrate specific course knowledge with the topic of a reading;

- Brainstorm: ask students to generate any and all relevant ideas for or solutions to an issue;

- Limited focal: present an issue and several alternatives and ask students to justify a position;

- Open focal: ask for student opinion on an issue without providing a list of alternatives;

- Application: present a scenario and ask students to respond using information from a reading.

Bradley et al. balanced the use of these prompt types over three sections of an online undergraduate course on child development. Limited-focal and direct-link questions generated the most words, whereas limited-focal and open-focal questions generated the most complete answers. Application and course-link questions were least effective in terms of word count and answer completion. Using a coding scheme based on Bloom's taxonomy (Gilbert & Dabbagh, 2005), course-link and brainstorm questions were found to be best for higher order thinking, though thinking overall was concentrated at a comprehension level.

A similar study was conducted by Ertmer et al. (2011), who purposively sampled 10 graduate courses for instructor prompts that covered the various Bloom's taxonomy levels and each of Andrews' question types. They found that questions at high Bloom's taxonomy levels facilitated responses at those high levels, though still with one-third of responses at the comprehension level. In terms of Andrews' types, lower divergent questions (those that ask students to generate conclusions and generalizations from data) were most promising in yielding responses at the medium and high levels of Bloom's taxonomy. In stark contrast to the results of Bradley et al. (2008), brainstorm questions led to a majority of responses in the low Bloom's taxonomy levels while yielding the highest number of posts per student and response threads.

Such findings – that the level of question influences the level of response – are mirrored by those studies using Garrison, Cleveland-Innes, and Koole's (2006) cognitive-presence indicators (McLoughlin & Mynard, 2009). Meyer (2004) found in an analysis of 17 doctoral-level online discussions that almost half (40%) of the resolution posts were concentrated in five discussions whose leading questions explicitly asked students to solve a problem.

Investigations into the questions written by student moderators are rarer than the abovementioned studies focusing on teacher moderators. One study (Christopher et al., 2004) examined the relationship between questions and responses posted by 10 students in a blended graduate gifted-education course. Each student posted one prompt in one of the 10 discussion weeks. The results indicated that most of the responses were at the levels of application and analysis, though four students consistently synthesized and evaluated. Further, in contrast to the teacher-moderated studies cited earlier, there was no pattern linking the Bloom's taxonomy level of the prompt and the average cognitive level of its responses. Yet, in a content-analytic study using a well-traveled analysis tool (Hara et al., 2000), it was found that the questioning activities of "starters" held considerable sway in shaping incident discussion. For example, when the majority of the starters' questions were "inference questions," the most frequently used cognitive skill during the discussion was inference. To be sure, results from face-to-face studies of student-generated questions suggest that the inconsistency between these two studies is no coincidence: some such studies indicate that student-generated questions are positively correlated with achievement, while others do not (Waugh, 1996).

Here, it is suggested that categorizing questions along Andrews' categories may bring some clarity to these disparate results. After all, Andrews' categories have been found to be extremely powerful predictors in several instructor-focused studies (Bradley et al., 2008; Ertmer et al., 2011). In addition, should such a categorization prove fruitful in understanding student questioning patterns, it would be able to serve as a ready-made typology for teaching and encouraging students to preferentially use certain types of questions (Choi, Land, & Turgeon, 2005).

Method

Context

The course studied here is a fully online graduate education course that took place in Fall 2011 at a large Canadian research university. The course used an online learning environment that supported both asynchronous and synchronous communication. The former was implemented through weekly discussion forums and is the interest of this paper; the latter took place in private chat spaces and is not analyzed here. The course concerned various topics related to the educational use of asynchronous and synchronous CMC, including its history, the role of the teacher, student factors, and Web 2.0 technologies. There were 11 modules, each corresponding to one week, in which students discussed instructor-assigned readings. The class was sufficiently small (13 students) that the instructor decided to have all students discuss in a single large group rather than splitting them into smaller weekly discussion groups. Each week, one or two students acted as moderators. The moderators carried out roles in accordance with those specified by the literature (Griffith, 2009): they collaborated in advance to develop guiding questions for the week, facilitated discussion throughout the week, and finally offered a summary of the week's issues. The instructor provided moderators with literature and best-practice strategies for focusing, maintaining, and extending discussions. Each student acted as moderator once during the course, and such moderation accounted for 20% of their course grade. Information relating to students' prior moderation experience was not collected. Students' contributions to the weekly discussions (not including the moderator roles just described) were worth 30% of their grade.

For the first three weeks of the course, the instructor served as moderator. She posted starter questions, encouraged students to express their viewpoints, and otherwise modeled the moderator role for the students. The remaining eight weeks were moderated by students, with the instructor participating alongside the other students in the forum.

Instruments

The present study made use of descriptive quantitative content analysis, the goals of which are to be systematic and objective. By systematic it is meant that the coding categories are determined a priori; by objective it is meant that classification is subject to inter-rater reliability checks and that raters sufficiently agree on categorizations (Rourke & Anderson, 2002). Rourke, Anderson, Garrison, and Archer (2001) note that there is a lack of replication studies in the online learning literature, which poses a threat to the establishment of widely applicable and validated instruments: while many instruments are being created, they are rarely being used outside the institution in which they are developed. Moreover, since such instruments often infer cognitive processes from the text resulting from such processes, validity in these schemes is anything but assured. Rourke and Anderson (2004) suggest an unfortunate catch-22 in the use of quantitative data analysis in online learning research: creating new coding schemes ought to be a meticulous process of theoretical and empirical validation, whereas many existing instruments have been developed in the absence of such rigor. Nevertheless, confidence in existing schemes can be enhanced through their use in new studies and contexts that attest to the robustness and generalizability of their categories and indicators. For this reason, two instruments that had been used in previous related studies were adopted: to classify questions, the Andrews-based typology of Bradley et al. (2008) was used as a starting point; to measure higher order thinking in discussions, the Bloom-based coding scheme of Bradley et al., which was adapted from Gilbert and Dabbagh (2005), was employed.

These coding schemes have been followed in the present study with the choice of the message posting as the unit of analysis. Rourke et al. (2001) describe the tradeoff between unitizing syntactically (e.g., at the message or paragraph level) and semantically (e.g., thematic unit) in terms of coder agreement and construct representativeness. For example, units such as sentences and paragraphs may not be easily separable in online learning transcripts when participants use ellipses or extra blank lines, and tend to yield an unruly number of units. In contrast, messages are objectively identifiable, are far fewer in number than smaller syntactic units, and structurally correspond to the intentions of their authors.

Reliability

There are two broad classes of coding in quantitative content analysis: manifest coding and latent coding (Lombard, Snyder-Duch, & Bracken, 2002). The former involves coding features of communication that are readily observable, such as word counts or use of pre-specified emoticons; the latter requires subjective interpretations to infer those processes responsible for manifest content. Rourke and Anderson (2002) explain that inferring quantitative aspects of online postings, as has been done here, is an example of the latent level. Such coding in particular requires that inter-rater agreement be both established for purposes of intersubjective judgments and carefully described for purposes of communicating with other researchers.

Among the many available measures of inter-rater agreement, percent agreement, Cohen's kappa, and Krippendorff's alpha are frequently used (Lombard et al., 2002). Literature deems that percent agreement, reported on its own, is inappropriate because it does not take into account agreements that occur by chance. The interpretation of Cohen's kappa depends on the number of coding categories and the distribution of the data, so it can be difficult to make comparisons across studies (Rourke et al., 2001). In contrast, Krippendorff's alpha is highly recommended (Lombard et al., 2002) as it is a conservative, chance-corrected measure that supports all levels of data and any number of coders. Krippendorff's alpha is therefore reported in coding the responses to moderator questions.

Garrison et al. (2006) describe a negotiated approach to coding that involves researchers coding transcripts and then discussing those codes to arrive at maximal consensus. In contrast to traditional multiple-coder analysis, negotiated coding involves all coders coding and agreeing on the same analysis units. While certainly more time consuming, negotiated coding may be particularly effective in initial, exploratory research (Garrison et al., 2006). A decision was made to adopt this approach for coding the moderator questions in the present study. Such questions were few compared to the number of response notes, and therefore negotiating a code on all questions was thought to be manageable in terms of time and resources. In addition, and to the extent feasible, it was considered important in coding these questions to "get them right," since the categorization of a question would determine the category into which all of its responses would be placed.

The present author and a research colleague engaged in this process of negotiated coding. They began by creating a codebook containing descriptions and examples of the codes. They then trained themselves on the coding instrument using questions from the prior (Winter 2011 semester) offering of the course. Next, they independently coded each question, and finally met to negotiate coding on all questions. The results of this coding, along with meta-information about the coding process itself, are described further in the next section.

Results

Moderator Questions

As noted above, the author and his colleague began with the typology of questions used by Bradley et al. (2008) (see the "Online Questioning" subsection in the Literature Review for their six categories). However, they very quickly noticed that many starter notes in fact contained several often-unrelated questions that could not be categorized into one of these six types. For example, in a week on web accessibility, the student moderators posed the following starter question:

[After hyperlinking to and briefly summarizing an article asserting that web accessibility is a right] "How does such an assertion hold up to your educational experiences using technology in the classroom? Is it possible that such technologies are sometimes treated more like a privilege than a right?"

The first of these two questions asks students to relate directly to a reading-based statement, and would therefore be classified as direct link (DL). The second appears to be a limited-focal (LF) question, asking students to decide whether web accessibility in practice is a privilege or a right. To account for these multi-faceted questions, the shotgun (SG) category from Ertmer et al. (2011) was added. The seven codes are presented in Table 1.

Table 1. Types of questions

Code |

Shorthand |

Description |

Direct link

|

DL |

Refers to a specific aspect of an article (e.g., a quotation) and asks students for interpretation or analysis. The direct link to the article can be found in the question itself or be a requirement in the response. |

Course link

|

CL |

Asks students to integrate specific course knowledge with the topic of the article. |

Brainstorm

|

BS |

Asks students to generate any and all relevant ideas or solutions to an issue. |

Limited focal

|

LF |

Presents an issue with several (e.g., two to four) alternatives and asks students to take a position and justify it. |

Open focal

|

OF |

Asks for student opinion on an issue without providing a list of alternatives. |

Application |

AP |

Presents a scenario and asks students to respond using information from a reading. |

Shotgun |

SG |

Presents multiple questions that may contain two or more content areas. |

The negotiated coding process yielded useful information about the coding scheme. For example, the coders repeatedly had difficulty agreeing on whether a question was open focal (OF) or brainstorm (BS). In fact, the only question on which they could not ultimately agree was of the following form (only the question stem has been included so as to comply with a student's consent requests): "do you see any advantages to [new technology]?" The present author classified this as a BS question, arguing that, in meaning if not in syntax, the question was asking for a "braindump" of perceived advantages. The second coder, on the other hand, argued that this was an OF question that was asking for an opinion: are there any advantages of this new technology? Ultimately, they could not come to an agreement, and arbitrarily classified the question as BS.

Table 2 indicates the number of instructor (first three weeks) and student (remaining eight weeks) questions. Most weeks contained three or four questions; the final week, however, was a strong outlier containing 13 questions (nine BS, one DL, one LF, two SG). Of the 40 total student questions, the vast majority (29) were BS or SG. BS questions often asked students to produce descriptions of personal experiences relevant to the topic at hand, or generate many examples of a phenomenon. For example, one of the questions in Week 4 (on the topic of social networking) asked, "What examples do you know of instructors using SNS [social networking sites] in their teaching?" In Week 6, student moderators asked, "After watching these two videos [external hyperlinks to two new video resources], describe the radical changes you would make to teaching in CMCs. Why would you make these changes?"

Table 2. Question types produced by the instructor and students

Written By |

Question Type |

BS |

DL |

LF |

OF |

SG |

Student |

3 |

1 |

0 |

0 |

5 |

Instructor |

17 |

3 |

3 |

5 |

12 |

SG questions often contained components spanning question types and topic areas. For example, again in Week 4, student moderators asked:

"Do you have SNSs that you are in favour of (If you don't please pick one from boyd and Elison (2008) [a course reading for the week])? What would be potential benefits for using it as teaching tool? What would be your issues/concerns using it as teaching tool?"

The first subquestion here is LF, asking students to choose among the available SNSs; the latter two questions are highly related BS questions.

Students did not ask any application (AP) or course-link (CL) questions. Further examples of student questions falling into each of the other five categories are provided in Appendix A.

The instructor asked a total of nine questions, which were also categorized using the negotiated coding process. Similar to what was described for the students' questions, the majority of instructor questions (five questions) were again BS or SG, with none of them classified as AP or CL.

Cognitive Level of Responses

Given that the interest of the present study is primarily in the responses to student (rather than instructor) questions, the first two weeks of discussion were used as the reliability sample. The coders began by training themselves on the instrument using the first question from Week 1, then independently coded responses for subsequent questions. It took three rounds of coding responses to single questions to reach a Krippendorff's reliability of .7. (The ordinal alpha was used, so as to incorporate the distance between coders, in addition to exact agreement.) After this, the present author coded 80% of the notes produced in the eight weeks of student moderation, and the secondary coder coded the remaining 20%. Both coders had been students in prior offerings of the course, and had previous research experience with Bloom's taxonomy.

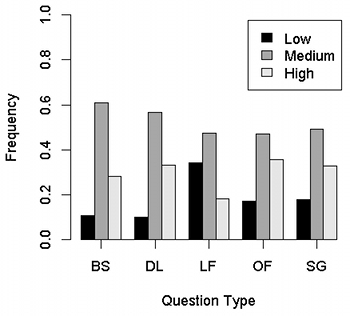

Table 3 contains the instrument used for coding the responses, based on that of Bradley et al. (2008). For each question type, the percentage of each participant's notes that fell within the low (no score, reading citation, or content clarification), medium (prior knowledge, real-world example, or abstract example), and high (making inferences) levels of Bloom's taxonomy were calculated. Then, for each question type, the average percentage of low notes was obtained as the average of the students' low percentages for that question type. This was repeated for the medium and high percentages. In this way, each student's contributions, no matter how many, were equally weighted in the calculation of the per-question-type percentages. Figure 1 presents the results of this analysis.

Table 3. Cognitive response categories

Code |

Bloom's Level |

Description |

No score |

N/A |

Student attempted submission, but it cannot be coded as a result of being too off topic or incorrect.

|

Reading citation |

1 |

Student only cited the reading using mostly direct quotations when justifying his/her answer.

|

Content clarification

|

2 |

Student stated personal interpretation of article content, such as paraphrasing ideas in his/her own words. |

Prior knowledge |

2 |

Student used prior knowledge or referred to new outside resources when justifying his/her answer. |

Real-world example |

3 |

Student applied a personal experience or scenario to justify his/her answer. |

Abstract example |

3 |

Student applied an analogy, metaphor, or philosophical interpretation to justify his/her answer. |

Making inferences |

4, 5, 6 |

Student's answer reflected analysis, synthesis, or evaluation, and he/she made broader connections to society or culture and created new ideas in justifying his/her answer. |

Figure 1. Frequencies of responses by question type

LF questions yielded substantially lower inference percentages than other question types. The three LF questions asked students to state whether they preferred synchronous or asynchronous communication, choose the most important factor from among five influences on their participation in a community of practice, and argue for the maturity or immaturity of a new technology. In each case, the restricted range appears to have directed discourse toward stating choices, rather than comparing alternatives, making value judgments, or relating impacts of choices to other knowledge. A brief consideration of the responses to the first LF question allows this to be illustrated. The initial response made comparisons between the use of asynchronous/synchronous tools, arguing that the "best" medium might differ depending on whether one is a student or a teacher. This inferential response was followed by several notes expressing approval for this nuanced approach. Others brainstormed advantages and disadvantages of one of the media, or provided personal examples of why they favored one mode over the other. Of the 10 responses to the question contributed by a total of eight participants, only two notes were coded as involving making inferences. In fact, across all three LF questions, the two students that wrote these notes were the only students to reach any inference.

SG questions yielded inference percentages as high as 44% and as low as 0%, though the latter appears to be an outlier. The 0% SG question discussed open educational resources (OER), and asked many questions: "Have you re-used/re-mixed OER? What were the advantages and challenges? Do you have an OER you found useful? How can OER help public good be met?" The 0% inference resulted from students focusing only on sharing their favorite OERs; one cannot help but wonder to what extent students would have reached the inference level had they chosen to tackle other subquestions. Inference in BS questions peaked at 40%, and, overall, BS questions gave rise to a similar mean inference level as SG (Wilcoxon signed-rank test, W = 0.58, p = .63).

Discussion

When looking at the types of questions produced by student moderators, a heavy reliance is found on two types of questions: SG and BS. Three comments are made relating to this finding.

First, it can be hypothesized that this paucity of variety in question types may be partly responsible for the preponderance of lower and medium-order thinking in the present course. Bradley et al. (2008) found that CL and DL questions yielded the highest levels of thinking, yet these questions were near absent in the course that was analyzed. And, while BS questions were lauded in the Bradley et al. study and on par with other question types in the present study, they were found ineffective in Ertmer et al. (2011). Similarly, both the findings of Ertmer et al. and those of the present study point to the fact that SG questions promote thinking at the midrange of Bloom's taxonomy. In summary, there is no solid evidence that the types of questions asked by the students in the present course led to higher order thinking. Of course, there is also no direct evidence that other types of questions would have led to higher levels of thinking, but this is hypothesized to be the case based on the literature cited here.

Second, there is reason to believe that CL questions in particular might play a role in the ability of the course to promote knowledge advancement. Scardamalia (2000) argues that students can engage in knowledge building in the presence of four technology-supported processes. One such process is the continual improvement of ideas: acknowledging that knowledge is not fixed, but that it can and should be continually refined. Community of inquiry researchers argue similarly: that integration of ideas is a desired cognitive goal, and that this requires students to synthesize what has been said in order to move ideas outside of their initial contexts (Garrison, 1999). It is suggested that if students in an online course are to do this, they must repeatedly reconsider knowledge built in earlier weeks, and that one way to promote this behavior is through CL questions that require old ideas to remain pliable as students work to connect and integrate the body of course knowledge.

Third, it is unclear to what extent students' online question preferences in the present course were reflective of instructor modeling. The instructor, by virtue of modeling the first three weeks, likely played a role in the types of questions that were subsequently asked by students, particularly for those students who were new to online learning. To the extent that students lack experience in asking questions, it is suggested that the instructor's questions can be powerful forces in shaping question-asking patterns. If the instructor uses no CL questions, as was the case in the present study, students might not consider CL as an available option, might believe that CL questions are not valued, or might not understand the possible educational potential of CL questions. While it is believed the instructor should consciously model several question types, it may not be necessary to model all such types. Instead, students could be supplied with a list of types of questions that they might consider using, and be encouraged to experiment with a variety of those types in their weekly moderation activities. In fact, there is a particular difficulty here in modeling CL questions, since by definition they require the existence of prior course material. If the instructor models during the first few weeks, it will be challenging to make meaningful links to earlier material. Perhaps the instructor would do well to moderate in Week 1, and then again in a slightly later week (e.g., Week 5), in order to demonstrate a wider variety of question types.

Limitations of the Study

There are three main areas in which limitations of the present study exist. First, it has been hypothesized throughout this paper that the type of question is a determinant of the type of interaction. However, other aspects of student moderation that might interact with question type have not been considered. In particular, holding question type constant, it seems reasonable to assume that individual moderator styles will work to influence the type and level of responses. In studies where questions are all created by the instructor who moderates each week, this is a non-issue. Unfortunately, with different students acting as moderator each week, it is difficult to decouple the question itself from the student asking the question.

Second, no distinction has been made between direct responses to a question and indirect responses (i.e., responses to responses). The number of direct responses was small, leading to consideration of the entire response set as the data to be analyzed for each question. Yet, other researchers (e.g., Bradley et al., 2008) have found differences between initial responses to questions and subsequent replies to those responses.

Third, the limited number of question types, and the small number of questions of most other types, precludes the making of strong judgments on the most useful questions. In future work, attempts will be made to help students broaden their use of question types, and then carry out a more complete analysis of all question types that are of interest.

Conclusion

Compared to other studies that vary the types of instructor-posed questions or look across courses for variability in questions, it was found in the present study that student moderators in the online course used a limited variety of question types. In particular, there was no evidence of questions being asked that pertained to content covered earlier in the course, and little evidence of questions relating directly to the current readings. Students asked mostly brainstorm questions or questions that tapped multiple areas of interest using different question forms. To benefit from other types of questions known to spur higher level discussion, future research should seek to encourage the use of question variety among student moderators. The author has hypothesized that teacher modeling and/or making available question-related resources would help students understand the value of varying question form. In general, the analysis of responses reported in this paper accords with other research that finds large portions of online discourse to be focused on the lower levels of Bloom's taxonomy. Whether students can successfully use direct-link and course-link questions and whether such questions yield higher level responses when they do so are important open questions.

References

Andrews, J. D. W. (1980). The verbal structure of teacher questions: Its impact on class discussion. POD Quarterly, 2(3-4), 129-163. Retrieved from http://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1031&context=podqtrly

Baran, E., & Correia, A.-P. (2009). Student-led facilitation strategies in online discussions. Distance Education, 30(3), 339-361. doi:10.1080/01587910903236510

Blanchette, J. (2001). Questions in the online learning environment. The Journal of Distance Education, 16(2), 37-57. Retrieved from http://www.jofde.ca/index.php/jde/article/view/175/121

Bloom, B. S. (Ed.). (1956). Taxonomy of educational objectives: The classification of educational goals. Handbook 1: Cognitive domain. New York, NY: David McKay.

Bradley, M. E., Thom, L. R., Hayes, J., & Hay, C. (2008). Ask and you will receive: How question type influences quantity and quality of online discussions. British Journal of Educational Technology, 39(5), 888-900. doi:10.1111/j.1467-8535.2007.00804.x

Cavana, M. L. P. (2009). Closing the circle: From Dewey to Web 2.0. In C. R. Payne (Ed.), Information technology and constructivism in higher education: Progressive learning frameworks (pp. 1-13). Hershey, PA: Information Science Reference. doi:10.4018/978-1-60566-654-9.ch001

Chan, J. C. C., Hew, K. F., & Cheung, W. S. (2009). Asynchronous online discussion thread development: Examining growth patterns and peer-facilitation techniques. Journal of Computer Assisted Learning, 25(5), 438-452. doi:10.1111/j.1365-2729.2009.00321.x

Choi, I., Land, S. M., & Turgeon, A. J. (2005). Scaffolding peer-questioning strategies to facilitate metacognition during online small group discussion. Instructional Science, 33(5-6), 483-511. doi:10.1007/s11251-005-1277-4

Christopher, M. M., Thomas, J. A., & Tallent-Runnels, M. K. (2004). Raising the bar: Encouraging high level thinking in online discussion forums. Roeper Review, 26(3), 166-171. doi:10.1080/02783190409554262

Collins, M., & Berge, Z. L. (1996, June). Facilitating interaction in computer mediated online courses. Paper presented at the Florida State University/Association for Educational Communications and Technology Distance Education Conference, Tallahassee, FL. Retrieved from http://repository.maestra.net/valutazione/MaterialeSarti/articoli/Facilitating%20Interaction.htm

Ertmer, P. A., Sadaf, A., & Ertmer, D. J. (2011). Student–content interactions in online courses: The role of question prompts in facilitating higher-level engagement with course content. Journal of Computing in Higher Education, 23(2-3), 157-186. doi:10.1007/s12528-011-9047-6

Gall, M. D. (1970). The use of questions in teaching. Review of Educational Research, 4(5), 707-721. doi:10.3102/00346543040005707

Gall, M. D. (1984). Synthesis of research on teachers' questioning. Educational Leadership, 42(3), 40-47. Retrieved from http://www.ascd.org/ASCD/pdf/journals/ed_lead/el_198411_gall.pdf

Gallagher, J. J., & Aschner, M. J. (1963). A preliminary report on analyses of classroom interaction. Merrill-Palmer Quarterly of Behavior and Development, 9(3), 183-194. Retrieved from JSTOR database. (23082786)

Garrison, D. R. (1999). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2(2-3), 87-105. doi:10.1016/S1096-7516(00)00016-6

Garrison, D. R., & Arbaugh, J. B. (2007). Researching the community of inquiry framework: Review, issues, and future directions. The Internet and Higher Education, 10(3), 157-172. doi:10.1016/j.iheduc.2007.04.001

Garrison, D. R., Cleveland-Innes, M., & Koole, M. (2006). Revisiting methodological issues in transcript analysis: Negotiated coding and reliability. The Internet and Higher Education, 9(1), 1-8. doi:10.1016/j.iheduc.2005.11.001

Gilbert, P. K., & Dabbagh, N. (2005). How to structure online discussions for meaningful discourse: A case study. British Journal of Educational Technology, 36(1), 5-18. doi:10.1111/j.1467-8535.2005.00434.x

Gold, S. (2001). A constructivist approach to online training for online teachers. Journal of Asynchronous Learning Networks, 5(1), 35-57. Retrieved from http://www.sloanconsortium.org/sites/default/files/v5n1_gold_1.pdf

Gorsky, P., & Blau, I. (2009). Online teaching effectiveness: A tale of two instructors. The International Review of Research in Open and Distance Learning, 10(3). Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/712/1270

Griffith, S. A. (2009). Assessing student participation in an online graduate course. International Journal of Instructional Technology and Distance Learning, 6(4), 35-44. Retrieved from http://www.itdl.org/Journal/Apr_09/article04.htm

Gunawardena, C. N. (1992). Changing faculty roles for audiographics and online teaching. American Journal of Distance Education, 6(3), 58-71. doi:10.1080/08923649209526800

Hammond, M. (2005). A review of recent papers on online discussion in teaching and learning in higher education. Journal of Asynchronous Learning Networks, 9(3), 9-23. Retrieved from http://www.sloanconsortium.org/sites/default/files/v9n3_hammond_1.pdf

Hara, N., Bonk, C. J., & Angeli, C. (2000). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28(2), 115-152. doi:10.1023/A:1003764722829

Heckman, R., & Annabi, H. (2005). A content analytic comparison of learning processes in online and face-to-face case study discussions. Journal of Computer-Mediated Communication, 10(2). Retrieved from http://jcmc.indiana.edu/vol10/issue2/heckman.html

Hew, K. F., & Cheung, W. S. (2008). Attracting student participation in asynchronous online discussions: A case study of peer facilitation. Computers & Education, 51(3), 1111-1124. doi:10.1016/j.compedu.2007.11.002

Hewitt, J. (2005). Toward an understanding of how threads die in asynchronous computer conferences. The Journal of the Learning Sciences, 14(4), 567-589. doi:10.1207/s15327809jls1404_4

Johnson, S. D., & Aragon, S. R. (2003). An instructional strategy framework for online learning environments. New Directions for Adult and Continuing Education, 2003(100), 31-43. doi:10.1002/ace.117

Light, P., Colbourn, C., & Light, V. (1997). Computer mediated tutorial support for conventional university courses. Journal of Computer Assisted Learning, 13(4), 228-235. doi:10.1046/j.1365-2729.1997.00025.x

Lombard, M., Snyder-Duch, J., & Bracken, C. C. (2002). Content analysis in mass communication: Assessment and reporting of intercoder reliability. Human Communication Research, 28(4), 587-604. doi:10.1111/j.1468-2958.2002.tb00826.x

Mazzolini, M., & Maddison, S. (2003). Sage, guide or ghost? The effect of instructor intervention on student participation in online discussion forums. Computers & Education, 40(3), 237-253. doi:10.1016/S0360-1315(02)00129-X

McLoughlin, D., & Mynard, J. (2009). An analysis of higher order thinking in online discussions. Innovations in Education and Teaching International, 46(2), 147-160. doi:10.1080/14703290902843778

Meyer, K. A. (2004). Evaluating online discussions: Four different frames of analysis. Journal of Asynchronous Learning Networks, 8(2), 101-114. Retrieved from http://www.sloanconsortium.org/sites/default/files/v8n2_meyer_1.pdf

Morse, K. (2003). Does one size fit all? Exploring asynchronous learning in a multicultural environment. Journal of Asynchronous Learning Networks, 7(1), 37-55. Retrieved from http://www.sloanconsortium.org/sites/default/files/v7n1_morse_1.pdf

Murphy, K. L., Cifuentes, L., Yakimovicz, A. D., Segur, R., Mahoney, S. E., & Kodali, S. (1996). Students assume the mantle of moderating computer conferences: A case study. American Journal of Distance Education, 10(3), 20-35. doi:10.1080/08923649609526938

Redfield, D. L., & Rousseau, E. W. (1981). A meta-analysis of experimental research on teacher questioning behavior. Review of Educational Research, 51(2), 237-245. doi:10.3102/00346543051002237

Richardson, J. C., & Ice, P. (2010). Investigating students' level of critical thinking across instructional strategies in online discussions. The Internet and Higher Education, 13(1-2), 52-59. doi:10.1016/j.iheduc.2009.10.009

Rourke, L., & Anderson, T. (2002). Using peer teams to lead online discussions. Journal of Interactive Media In Education, 2002(1). Retrieved from http://www-jime.open.ac.uk/article/2002-1/79

Rourke, L., & Anderson, T. (2004). Validity in quantitative content analysis. Educational Technology Research & Development, 52(1), 5-18. doi:10.1007/BF02504769

Rourke, L., Anderson, T., Garrison, D. R., & Archer, W. (2001). Methodological issues in analysis of asynchronous, text-based computer conferencing transcripts. International Journal of Artificial Intelligence in Education, 12(1), 8-22. Retrieved from http://www.iaied.org/pub/951/file/951_paper.pdf

Rourke, L., & Kanuka, H. (2009). Learning in communities of inquiry: A review of the literature. The Journal of Distance Education, 23(1), 19-48. Retrieved from http://www.jofde.ca/index.php/jde/article/view/474/875

Rovai, A. P. (2007). Facilitating online discussions effectively. The Internet and Higher Education, 10(1), 77-88. doi:10.1016/j.iheduc.2006.10.001

Samson, G. E., Strykowski, B., Weinstein, T., & Walberg, H. J. (1987). The effects of teacher questioning levels on student achievement: A quantitative synthesis. The Journal of Educational Research, 80(5), 290-295. Retrieved from JSTOR database. (40539640)

Scardamalia, M. (2000). Social and technological innovations for a knowledge society. In S. Young, J. Greer, H. Maurer, & Y. S. Chee (Eds.), Proceedings of the International Conference on Computers in Education / International Conference on Computer-Assisted Instruction 2000 (Vol. 1, pp. 22-27). Taipei, Taiwan: National Tsing Hua University.

Schrire, S. (2006). Knowledge building in asynchronous discussion groups: Going beyond quantitative analysis. Computers & Education, 46(1), 49-70. doi:10.1016/j.compedu.2005.04.006

Seo, K. K. (2007). Utilizing peer moderating in online discussions: Addressing the controversy between teacher moderation and nonmoderation. American Journal of Distance Education, 21(1), 21-36. doi:10.1080/08923640701298688

Tagg, A. C. (1994). Leadership from within: Student moderation of computer conferences. American Journal of Distance Education, 8(3), 40-50. doi:10.1080/08923649409526865

Wang, Q. (2008). Student-facilitators' roles in moderating online discussions. British Journal of Educational Technology, 39(5), 859-874. doi:10.1111/j.1467-8535.2007.00781.x

Waugh, M. (1996). Group interaction and student questioning patterns in an instructional telecommunications course for teachers. Journal of Computers in Mathematics and Science Teaching, 15(4), 353-382. Retrieved from Ed/ITLib Digital Library. (15205)

Appendix A: Question-Type Examples

Code |

Description |

DL

|

"Coiro (2003) asserts that we "can no longer allow the fears of adults to dictate or confine the literacy needs and desires of the young readers and writers of our future." With the dearth of resources available to students apart from the instructor, is it not possible for students to still have their needs met even if their instructor is fearful of using digital texts and tools? Is there any danger in this?" |

BS |

"This article [hyperlink to external article] describes a neomillennial learning style arising out of new technologies (p. 10). On the other hand, another study (Margaryan, Littlejohn, & Vojt, 2011) found that "Students in our sample appear to favor conventional, passive, and linear forms of learning and teaching. Indeed, their expectations of integration of digital technologies in teaching focus around the use of established tools within conventional pedagogies" (p. 439). What has been your experience in your own practice, as teacher and/or student, of students' learning styles? How do you see technology making a difference?" |

LF

|

"In pages 503-506, Sherblom (2010) identifies some influences on instruction and learning in the CMC classroom. In your initial experience with the Community of Practice you selected for this course, which of the following influences most hindered/promoted your participation in the CoP and why?

- Interpersonal uncertainty reduction;

- Social presence, anxiety, and apprehension;

- Social interaction, experience and training;

- Social identity and anonymity;

- Identity in social relationship."

|

OF |

"Brunvand and Abadeh's article also touches on the fact that several Web 2.0 resources are free for students. Unmentioned, however, is the fact that online resources (such as Google, YouTube, and Facebook) are often provided by private corporations, undoubtedly driven by profit incentives. Is there a concern of commercializing our students by embedding so much of their learning opportunities into private corporations?" |

SG |

"This article [hyperlink to external article] considers how "Second Life" or SL, an online virtual world, is a CoP [Community of Practice] environment which can have applications in education. It might be helpful to watch this virtual campus introductory video [link to external video].

In reference to Gorini et al. (2008) [external article], the article describes an example from the telehealth sector: "... compared with conventional telehealth applications such as emails, chat, and videoconferences, the interaction between real and 3-D virtual worlds may convey greater feelings of presence, facilitate the clinical communication process, positively influence group processes and cohesiveness in group-based therapies, and foster high levels of interpersonal trust between therapists and patients" (p. 2).

In the context of education, can you imagine virtual worlds building group trust and facilitating positive interactions? Could they be more effective at it than environments such as Pepper [institutional environment in which the course was run]? Is there anything in your own experience which could affirm or deny this? How might this course be different if it were conducted in Second Life?" |

Acknowledgments

The author would like to thank Murat Oztok for his help with this paper. His inhuman capacity to prioritize quantitative coding over playing Dangerous Dave in the Haunted Mansion is commendable and appreciated. Also instrumental to this work is the Ys IV video game soundtrack. Without that on repeat in the background there is no way the author could have focused sufficiently to write this paper.