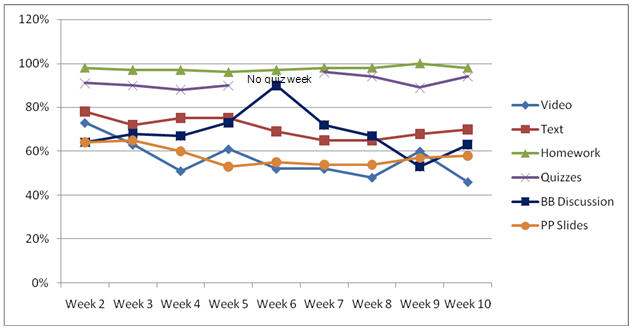

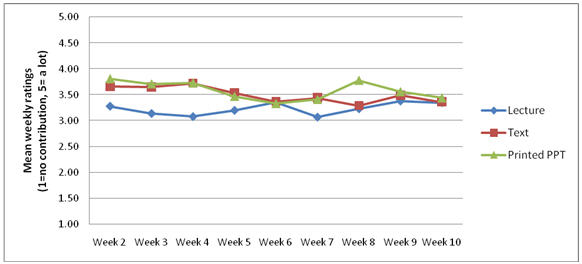

Figure 7. Contribution of Active LOs to Perceived

Learning.

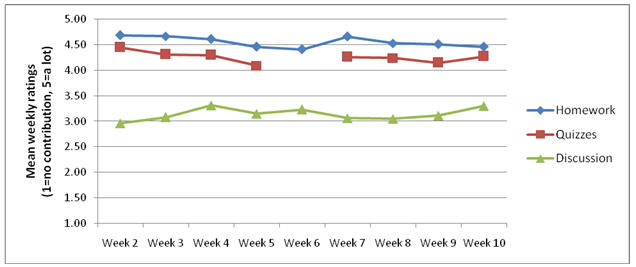

The active LOs, on the other hand, are stable

throughout the 10 weeks of the course. Homework was

consistently reported to have the greatest

contribution to the learning, quizzes were also said

to have had an impact on learning and, according to

the students, Blackboard discussions consistently

contributed less to their learning of the material. As previously noted, these active LOs also

correspond to the required components of the

course.

Although the students were given opportunities

throughout the quarter to rate the contribution of

each LO to their learning, they were asked to do so

again on the final survey. The question on the

final survey asked them to reflect globally on the

contribution of each LO to their overall learning of

the course material. The mean overall ratings are

in Table 6.

The

students reported that the homework assignments

contributed the most to their overall learning of

the material. This is consistent with responses

from the weekly surveys where homework was reported

to be the LO having the greatest contribution to

learning every week.

Table 6. Contribution of LOs to Overall Learning

|

Learning Object

|

N

|

Mean

(1=not at all, 5=a lot) |

SD

|

|

Homework |

45 |

4.71 |

.66 |

|

Quizzes |

45 |

4.31 |

1.02 |

|

PowerPoint slides |

45 |

4.02 |

1.25 |

|

Lectures |

45 |

3.91 |

1.46 |

|

Text |

45 |

3.33 |

1.38 |

|

Blackboard discussions |

45 |

3.02 |

1.37 |

To validate the rating data, students were

presented with five LOs (quizzes were not included

in this ranking question) and asked to rank them

based on which they believed had the greatest

overall impact on them; the results of those

rankings are in Table 7. In this ranking exercise,

it was impossible to assign the same rank to more

than one item; therefore, students who might have

rated, homework and lectures, for example, as both

having a lot of impact on their learning were forced

to choose which had the most impact, which

had the second most impact, and so on. As with the

quality and learning contribution ratings previously

presented, homework emerged as the most important

LO, ranking #1.

Without this impact ranking data, one might conclude that the

required LOs would always come out “on top” of the

pile in terms of impact on learning; however, note

that this is not the case. Homework came out on top

for usage and impact on learning, but the 2nd,

3rd, and 4th LOs (lectures,

PowerPoint and text) ranked in terms of overall

impact were not required LOs and did not rank high

in terms of usage (see Figure 4). This is

additional evidence of the importance and value of

the homework support provided by the CC/MML site.

Table 7. Impact on learning of LOs—Overall

Rankings

|

LO

|

Rank

|

|

Homework |

1st |

|

|

Lectures |

2nd |

|

|

PowerPoint slides |

3rd |

|

|

Text |

4th |

|

|

Blackboard discussions |

5th |

|

Quality.

In the final survey of the quarter, students were

asked to rate the quality of each of the LOs. Table

8 presents the mean ratings (on a 1-to-4 point

scale) of each LO. Homework, the LO that students

consistently used the most, and that they felt

contributed the most to their learning, was rated

highest quality; the text, which was used

moderately, received the lowest rating.

Table 8. Overall Quality Ratings of LOs

|

LO

|

N

|

Mean

(1=poor, 4=excellent) |

SD

|

|

Homework |

45 |

3.58 |

.54 |

|

Quizzes |

45 |

3.22 |

.67 |

|

PowerPoint slides |

45 |

3.11 |

.91 |

|

Lectures |

45 |

3.02 |

.81 |

|

Blackboard discussions |

44 |

2.59 |

.84 |

|

Text |

45 |

2.58 |

.92 |

Satisfaction.

Two

measures were used to determine overall student

satisfaction with this online course: a

standardized question on the general student course

evaluation form distributed to all students at the

end of every class and a question on the final

online survey of the quarter that asked students

whether they would recommend this particular online

course. Table 9 shows the frequencies of responses

to both items: 58 students completed the course

evaluation administered by the University; of those,

86.2% said that the course was outstanding or good.

About the same percentage of students who answered

the recommend question on the final course survey

(86.7%) said that they would recommend the course.

Together, these two questions provide compelling

evidence to support the claim that students in this

online course were overwhelmingly satisfied.

Table 9. Overall Rating and Likelihood to Recommend

|

Evaluation item |

Frequency (percent) |

|

Overall course rating

Outstanding

Good

Fair

Poor |

26 (44.8%)

24 (41.4%)

5 (8.6%)

3 (5.2%) |

|

Would recommend the course

Yes

No |

39 (86.7%)

6 (13.3%) |

Discussion

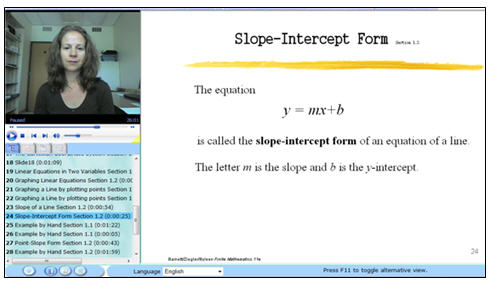

Because of the challenges of notation and intricacy

of content, mathematics is one of the most

challenging disciplines to offer online. However,

the availability of rich, publisher-supported online

homework sites such as CC/MML and software such as

Acustudio has made the creation of LOs for teaching

mathematics relatively easy. Acustudio makes

possible the creation of rich online lectures that,

in the past, would have required extensive

instructional technology design support. The

ability to show hand-worked examples using the

“whiteboard” feature was key to the successful

implementation of this software. Students reported

that the “examples by hand” created utilizing the

whiteboard were an especially useful component of

the lectures. However, far and away the most highly

utilized and consistently preferred LO was the

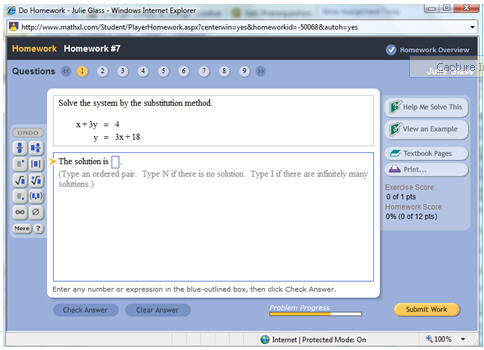

homework. As shown in the screenshot in Figure 3,

the CC/MML site offers a variety of tools for

students completing homework assignments. Students

are able to view examples, request help solving a

problem and link directly to relevant pages in the

e-text. In addition, students are given immediate

feedback on their solutions. This instant feedback

speaks also to student preferences as described by

Swan (2003). If an incorrect solution is entered,

students are able to solve a similar problem

(generated by MML) for credit. This rewards

persistence and helps students become familiar with

procedures and patterns found in solving certain

types of problems. CC/MML allows students to

interact with the material in a manner that

exemplifies the notion of active learning. There

is evidence (Bonwell & Eison, 1991; Sahin, 2007)

that active participation in content leads to

greater and longer lasting understanding of

material. Thus, in this online environment, the

fact that students engaged with, perceived the

values of, and spent a majority of their time doing

homework is a positive outcome in terms of student

learning online. It would be interesting to

compare student performance and preferences to that

of a face-to-face class with the same credit

structure that offers all the mentioned support

mechanisms (including videos) and regular (in terms

of time as well as delivery mode) class meetings. This is an area of great interest and may be pursued

for verification in future studies.

Another area of interest is the lack of value that

students placed on the discussion portion of the

class. There were, in fact, two aspects of the

discussion: a required component and an optional

component. The students were only asked to comment

on the required component, which consisted of

students responding to an instructor “prompt” (a

question, problem or statement). The 60 points

total for discussion participation were distributed

as follows: 3 responses (chosen by the student)

were graded for quality (content and communication

skills) by the instructor for a total of 30 points

(10 points each). The remaining 30 points were

given based upon timely and consistent responses to

the prompts throughout the quarter. The prompts

were designed to encourage students to think more

deeply about the material and its applications.

Some sample prompts are: “We know that two points

determine a unique line. What if you have 3

points? How many distinct lines pass through at

least 2 of the given points? Is the answer always

the same? How do the various transformations

(shifts, stretches and shrinks) affect the equation

of a line and the graph of that line (think about

the slope and y-intercept)?” and “Find two examples

in the newspaper or online of automobile loan offers

that require periodic payments and compare the

offers.” Thus we see that “discussion” is somewhat

of a misnomer for this portion of the course that

does not fit the traditional definition of

“discussion.” Students were encouraged to respond

to each other’s postings but did not often do so.

On the other hand, there were optional discussion

boards where students could post questions and

comments about the course. Because open discussion

boards had only optional participation, it was not

included in the weekly survey questions. However,

in the final survey, “Instructor responses to your

discussion postings” was among the course components

rated for quality and contribution to overall

learning. It is of note that for this final survey,

the optional and required discussion boards were not

distinguished. In terms of quality, these

instructor responses were rated 2nd only

to the homework with a mean score of 3.25 (1 = poor,

4 = excellent, SD = .78) while the Blackboard

discussions had a rating of 2.59 (SD = .84). In

terms of contribution to overall learning,

Instructor responses were rated 5th (out

of 7) for a mean of 3.64 (with 1 = not at all, 5 = a

lot, SD = 1.46) while the Blackboard discussions

ranked last overall with a mean score of 3.02 (SD =

1.37).

Features of a face-to-face class that were lost in

this online course were useful office hour

interactions between student and faculty and partial

credit on students’ solutions. While office hours

were offered both online and face-to-face, students

rarely took advantage of this availability. The

online office hours were offered in chat format,

limiting the ability to use the required notation

for useful interaction, and students generally could

not travel to campus to attend face-to-face office

hours. It is certainly a difficult mode of

communication for mathematics. However, students

did interact with the instructor and each other on

discussion boards. A potential solution to this

problem is offered by a very promising communication

software package titled enVision. This software

allows for anonymous online communication between

students and faculty with rich notational

availability. One study (Hooper, et. al., 2006)

reports that enVision sessions are more effective

than traditional office hours. The software

(freeware) allows any number of students to “attend”

an online office hour and participate, or lurk, as

they choose. Several strengths of the software are

described as “Anonymity”, “Engagement and multi-way

dialog” and “Passive participation.” Incorporation

of enVision into future offerings of the course here

are being considered.

Students’ open-ended comments about their online

learning experience revealed that there was a great

deal of disappointment over the lack of partial

credit in the online homework. This will be

addressed in future offerings of the course by

requiring the final exam to be a traditional “paper

and pencil” exam, graded by the instructor. There

is also impressive work being done on creating and

incorporating partial credit in computer-aided

homework grading (Ahton, et. al., 2006) and (Livne,

et. al., 2007). If these processes were to come to

fruition, it would greatly enhance the online

homework services currently offered.

Conclusions

The

preference, satisfaction, and perceived impact on

learning reported by students in this online class

are encouraging for students and instructors of

online mathematics courses. Students clearly felt

that the course was demanding though time

consuming. A large majority of the students rated

the class as good or outstanding (50 out of 58) and

an even greater majority found the class to be

intellectually challenging (54 out of 58). This

demonstrates that the course, while requiring a lot

of work, was perceived as successful by most

students. The strong preference for the active

learning LO homework coupled with the perceived

impact on learning of the lectures lead to the

overall impression that the online environment

offered these students an extensive, flexible and

rich learning experience. While there are some

areas of concern, the rate at which tools for

instruction online are being developed leads the

authors to believe that many will be addressed in

due time. The findings in this paper point to a

best practices model for online mathematics that

strongly utilizes practice problems with fast

feedback and integrates tools for content delivery

such as media-enhanced lectures. This combination

of LOs will provide students with the tools that

they need to succeed online.

Acknowledgements

Both authors are grateful for funding from the

Faculty Support Grants Program at CSUEB. The first

author also thanks the members of the FLC for Best

Practices in Online Teaching and Learning and the

members of the FLC for the Scholarship of Teaching

and Learning. The authors appreciated the

reviewers’ comments and have incorporated their

suggestions. We feel this has made a stronger

paper.

References

Ahton, H., Beevers, C., Korabinski, A. & Youngson,

M. (2006) Incorporating partial credit in

computer-aided assessment of Mathematics in

secondary education. British Journal of

Educational Technology, 37(1), 93-119.

Bennett, K. & McGee, P. (2005). Transformative

power of the learning object debate. Open

Learning, 20(1), 15-30.

Bonwell, C. & Eison, J. (1991). Active learning:

Creating excitement in the classroom. ASHEERIC

Higher Education Report No. 1, George Washington

University, Washington, D.C.

Cuthrell, K. & Lyon, A. (2007). Instructional

strategies: What do online students prefer?

MERLOT Journal of Online Learning and Teaching,

3(4), 357-362 (https://jolt.merlot.org/documents/cuthrell.pdf)

Dennen, V. (2008). Pedagogical lurking: Student

engagement in non-posting discussion behavior

Computers in Human Behavior, 24(4), 1624-1633.

Ellis, T. & Cohen, M. (2005). Building the better

asynchronous computer mediated communication system

for use in distributed education. Proceedings of

the 35th Frontiers in Education

Conference (pp T3E15-T3E20).

Piscataway, NJ: IEEE.

Friesen, N. (2003). Three objections to learning

objects. Available online at:

phenom.edu.ualberta.ca/˜nfriesen (accessed May

2008).

Hodgins, W. (2000). Into the future. Available

at:

http://www.learnactivity.com/download/MP7.PDF,

p27.

Hooper, J., Pollanen, M. & Teismann, H. (2006). Effective Online Office Hours in the Mathematical

Sciences. MERLOT Journal of Online Learning and

Teaching, 2(3), 187-194 (https://jolt.merlot.org/vol2no3/hooper.pdf)

Liber, O. (2005). Learning objects: Conditions

for viability. Journal of Computer Assisted

Learning, 21, 366-373.

Livne, N., Livne, O. & Wight, C. (2007). Can

Automated Scoring Surpass Hand Grading of Students’ Constructed Responses and Error Patterns in

Mathematics? MERLOT Journal of Online Learning

and Teaching, 3(3), 295-306 (https://jolt.merlot.org/vol3no3/livne.pdf)

Parish, P. (2004). The trouble with learning

objects. Educational Technology Research and

Development, 52(1), 49-61.

Pelz, B. (2004). Three principles of effective

online pedagogy. JALN 8(3), retrieved May

2008 from

http://www.sloan-c.org/publications/JALN/v8n3/v8n3_pelz.asp

Prince, M. (2004). Does active learning work? A

review of the research.Journal of Engineering

Education, 93(3), 223-231.

Saba, F. (2005). Critical issues in distance

education: A report from the United States.

Distance Education, 26(2), 255-272.

Sahin, I. (2007). Predicting student satisfaction

in distance education and learning environments.

(ERIC Document Reproduction Service No. ED 496541).

Swan, K. (2003). Learning effectiveness: What the

research tells us. In J. Bourne & J. Moore (Eds.) Elements of Quality Online Education, Practice

and Directions. Needham, MA:

Sloan Center

for Online Education, 13-45.

Wiley, D. (2002). Connecting learning objects to

instructional design theory: A definition, a

metaphor, and a taxonomy. In D. Wiley (Ed.) The

Instructional Use of Learning Objects. The

Agency for Instructional Technology,

Bloomington,

IN, 4.

|