|

| MERLOT

Journal of Online Learning and Teaching |

Vol. 2,

No. 3, September 2006 |

|

High-Tech Tools for Teaching Physics: the

Physics Education Technology Project

Noah

Finkelstein, Wendy Adams, Christopher Keller, Katherine

Perkins, Carl Wieman

and the Physics Education Technology Project Team.

Department of Physics

University of Colorado at Boulder

Boulder, Colorado, USA

noah.finkelstein@colorado.edu

Abstract

This paper introduces a new suite of computer simulations from the

Physics Education Technology (PhET) project, identifies

features of these educational tools, and demonstrates their

utility. We compare the use of PhET simulations to the use of

more traditional educational resources in lecture, laboratory,

recitation and informal settings of introductory college

physics. In each case we demonstrate that simulations are as

productive, or more productive, for developing student

conceptual understanding as real equipment, reading resources,

or chalk-talk lectures. We further identify six key

characteristic features of these simulations that begin to

delineate why these are productive tools. The simulations:

support an interactive approach, employ dynamic feedback,

follow a constructivist approach, provide a creative

workplace, make explicit otherwise inaccessible models or

phenomena, and constrain students productively.

Introduction

While computer simulations have become relatively widespread

in college education (CERI, 2005; MERLOT, n.d.), the

evaluation and framing of their utility has been less

prevalent. This paper introduces the Physics Education

Technology (PhET) project (PhET, 2006), identifies some of the

key features of these educational tools, demonstrates their

utility, and examine why these are useful. Because it is

difficult (and, in this case, unproductive) to separate a tool

from its particular use, we examine the use of the interactive

PhET simulations in a variety of educational environments

typical of introductory college physics. At present,

comprehensive and well-controlled studies of the utility of

computer simulations in real educational environments remain

relatively sparse, particularly at the college level. This

paper summarizes the use of the PhET tools in lecture,

laboratory, recitation, and informal environments for a broad

range of students (from physics majors to non-science majors

with little or no background in science). We document some of

the features of the simulations (e.g., the critical role of

direct and dynamic feedback for students) and how these design

features are used (e.g., the particular tasks assigned to

students). We find, for a wide variety of environments and

uses surveyed, PhET simulations are as productive or more

productive than traditional educational tools, whether these

are physical equipment or textbooks.

Research and Design of PhET Simulations

The Physics Education Technology project at the University of

Colorado has developed a suite of physics simulations that

take advantage of the opportunities of computer technology

while addressing some of the limitations of these tools. The

suite includes over 50 research-based simulations that span

the curriculum of introductory physics as well as sample

topics from advanced physics and chemistry (PhET, 2006;

Perkins et al., 2006; Wieman & Perkins, 2006). All

simulations are free, and can be run from the internet or

downloaded for off-line use. The simulations are designed to

be highly interactive, engaging, and open learning

environments that provide animated feedback to the user. The

simulations are physically accurate, and provide highly

visual, dynamic representations of physics principles.

Simultaneously, the simulations seek to build explicit bridges

between students’ everyday understanding of the world and

the underlying physical principles, often by making the

physical models (such as current flow or electric field lines)

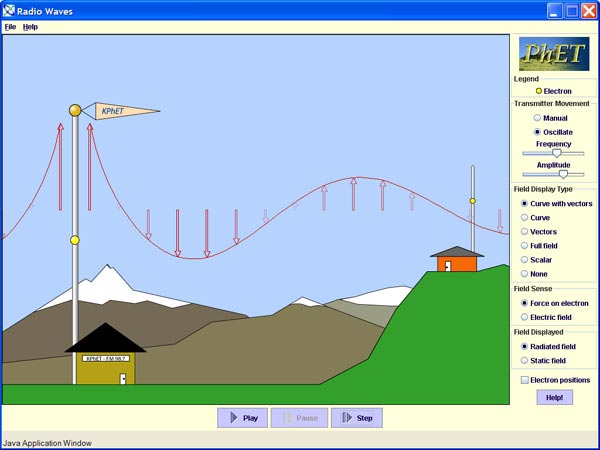

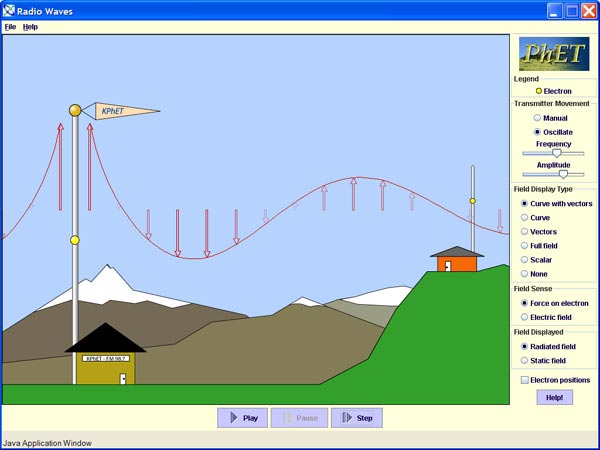

explicit. For instance, a student learning about

electromagnetic radiation starts with a radio station

transmitter and an antenna at a nearby house, shown in Figure

1. Students can force an electron to oscillate up and down at

the transmission station, and observe the propagation of the

electric field and the resulting motion of an electron at the

receiving antenna. A variety of virtual observation and

measurement tools are provided to encourage students to

explore properties of this micro-world (diSessa, 2000) and

allow quantitative analysis.

Figure 1. Screenshot of PhET simulation, Radios

Waves & Electromagnetic Fields.

We employ a research-based

approach in our design – incorporating findings from prior

research on student understanding (Bransford, Brown, &

Cocking, 2002; Redish, 2003),

simulation design (Clark & Mayer, 2003), and our own

testing – to create simulations that support student

engagement with and understanding of physics concepts. A

typical development team is composed of a programmer, a

content expert, and an education specialist. The iterative

design cycle begins by delineating the learning goals

associated with the simulation and constructing a storyboard

around these goals. The underpinning design builds on the idea

that students will discover the principles, concepts and

relations associated with the simulation through exploration

and play. For this

approach to be effective, careful choices must be made as to

which variables and behaviors are apparent to and controllable

by the user, and which are not. After a preliminary version of

the simulation is created, it is tested and presented to the

larger PhET team to discuss. Particular concerns, bugs, and

design features are addressed, as well as elements that need

to be identified by users (e.g. will students notice this

feature or that feature? will users realize the relations

among various components of the simulation?). After complete

coding, each simulation is then tested with multiple student

interviews and summary reports returned to the design team.

After the utility of the simulation to support the particular

learning goals is established (as assessed by student

interviews), the simulations are user-tested through in-class

and out-of-class activities. Based on findings from the

interviews, user testing, and class implementation, the

simulation is refined and re-evaluated as necessary. Knowledge

gained from these evaluations is incorporated into the

guidelines for general design and informs the development of

new simulations (Adams et al., n.d.). Ultimately, these

simulations are posted for free use on the internet. More on

the PhET project and the research methods used to develop the

simulations is available online (PhET, 2006).

From

the research literature and our evaluation of the PhET

simulations, we have identified a variety of characteristics

that support student learning. We make no claims that these

are necessary or sufficient of all learning environments –

student learning can occur in a myriad of ways and may depend

upon more than these characteristic features. However, these

features help us to understand why these simulations do (and

do not) support student learning in particular environments.

Our simulations incorporate:

An Engaging and Interactive Approach. The simulations encourage student engagement.

As is now thoroughly documented in the physics

education research community and elsewhere (Bransford, Brown,

& Cocking, 2002; Hake, 1998; Mazur, 1997; Redish, 2003),

environments that interactively engage students are supportive

of student learning. At start-up for instance, the simulations

literally invite users to engage with the components of the

simulated environment.

Dynamic feedback.

These simulations emphasize causal relations by linking ideas

temporally and graphically. Direct feedback to student

interaction with a simulation control provides a temporal and

visual link between related concepts. Such an approach, when

focused appropriately, facilitates student understanding of

the concepts and relations among them (Clark & Mayer,

2003). For instance, when a student moves an electron up and

down on an antenna, an oscillating electric field propagates

from the antenna suggesting the causal relation among electron

acceleration and radio wave generation.

A constructivist approach.

Students learn by building on their prior understanding

through a series of constrained and supportive explorations

(von Glasersfeld, 1983). Furthermore, often students build

(virtual) objects in the simulation, which further serves to

motivate, ground, and support student learning (Papert &

Harel, 1991).

A

workspace for play and tinkering. Many

of the simulations create a self-consistent world, allowing

students to learn about key features of a system by engaging

them in systematic play, "messing about," and

open-ended investigation (diSessa, 2000).

Visual

models / access to conceptual physical models. Many

of the microscopic and temporally rich models of physics are

made explicit to encourage students to observe otherwise

invisible features of a system (Finkelstein, et al., 2005;

Perkins et al., 2006). This approach includes visual

representations of electrons, photons, air molecules, electric

fields etc., as well as the ability to slow down, reverse and

play back time.

Productive constraints

for students. By

simplifying the systems in which students engage, they are

encouraged to focus on physically relevant features rather

than ancillary or accidental conditions (Finkelstein, et al.,

2005). Carefully segmented features introduce relatively few

concepts at a time (Clark & Mayer, 2003) and allow for

students to build up understanding by learning key features

(e.g., current flow) before advanced features (e.g., internal

resistance of a battery) are added.

While

not an exhaustive study of the characteristics that promote

student learning, these key features serve to frame the

studies of student learning using the PhET simulations in

environments typical of college and other educational

institutions: lecture, lab, recitation, and informal settings.

Research Studies

Lecture

Simulations can be used in a variety of ways in the lecture

environment. Most often they are used to take the place of, or

augment chalk-talk or demonstration activities. As such, they

fit within a number of pedagogical reforms found in physics

lectures, such as Interactive Lecture Demonstrations (Sokoloff & Thornton, 1998)

or Peer Instruction

(Mazur, 1997).

In a comparative study of the utility of demonstration with

real equipment versus simulation, we studied the effects in a

large-scale (200 person) introductory physics course for

non-science majors during lectures where students were taught

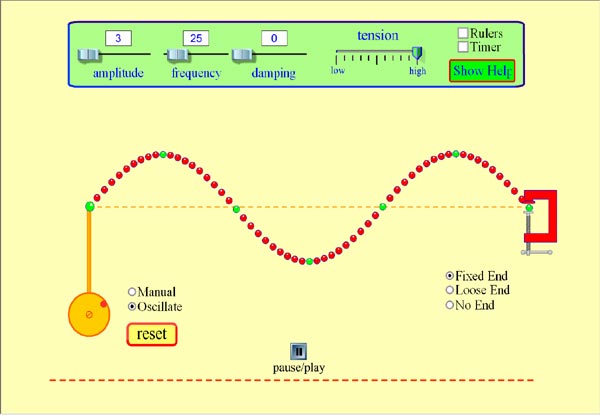

about standing waves. One year, students were taught with a

classic lecture demonstration, using Tygon tubing. The

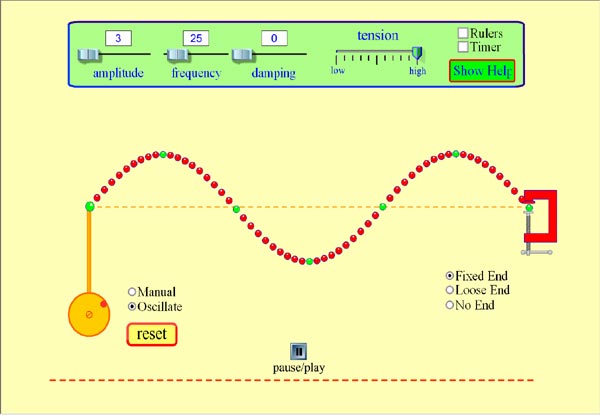

subsequent year a similar population of students was taught

the material using the Wave

on a String simulation (Figure 2) to demonstrate standing

waves.

Figure 2. Screenshot of Wave

On a String simulation.

Notably, just as with the lecture demonstratioing may be observed to

oscillate up and down. That is, by manipulating the simulation

parameters appropriately, the instructors constrain vertically displaced in a standing wave), students from the

real equipment demonstration lecture answered 28% correctly

(N=163); whereas, students observing the course using the

simulation answered 71% correctly (N=173 statistically

different at p<0.001, via two-tailed

z-test) (Perkins et al., 2006). On a similar follow-up

question, students learning from equipment answered 23%

correctly, compared to 84% correctly when learning from the

simulation (N1= 162, N2=165,

p<0.001).

In another investigation substituting simulations for real

demonstration equipment, we studied a several-hundred student

calculus-based second semester introductory course on

electricity and magnetism. The class was

composed of engineering and physics majors (typically

freshmen) who regularly interacted in class through Peer

Instruction (Mazur, 1997)

and personal response systems. The large class

necessitated two lecture sections (of roughly 175 students

each) taught by the same instructor. To study the impact of

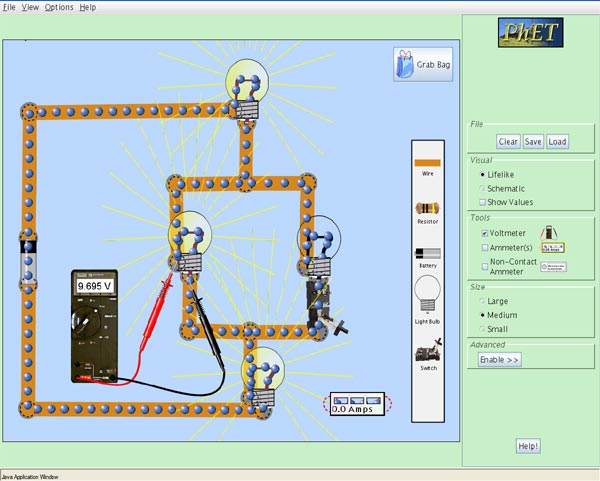

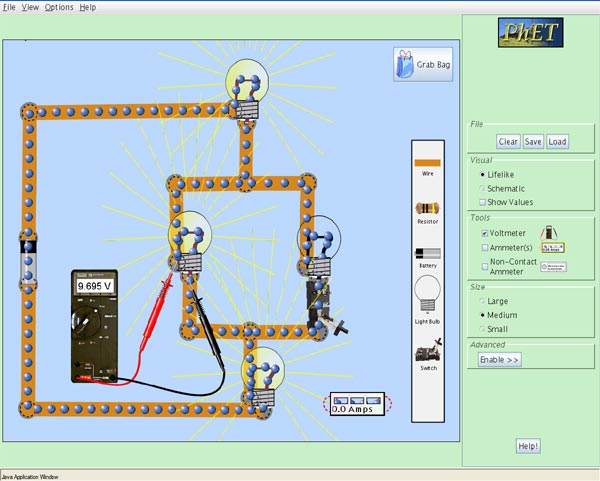

computer simulations, the Circuit

Construction Kit was substituted for chalk-talk or real

demonstration equipment in one of the two lectures.

The

Circuit Construction Kit (CCK) models the behavior of simple

electric circuits and includes an open workspace where

students can place resistors, light bulbs, wires and

batteries. Each element has operating parameters (such as

resistance or voltage) that may be varied by the user and

measured by a simulated voltmeter and ammeter. The underlying

algorithm uses Kirchhoff’s laws to calculate current and

voltage through the circuit. The batteries and wires are

designed to operate either as ideal components or as real

components, by including appropriate, finite resistance. The

light bulbs, however, are modeled as Ohmic, in order to

emphasize the basic models of circuits that are introduced in

introductory physics courses. Moving electrons are explicitly

shown to visualize current flow and current conservation. A

fair amount of attention has been placed on the user interface

to ensure that users may

easily interact with the simulation and to encourage users to

make observations that have been found to be important and

difficult for students (McDermott & Shaffer 1992) as they

develop a robust conceptual understanding of electric

circuits. A screen shot appears in Figure 3.

Figure 3. Screenshot of Circuit

Construction Kit simulation.

In this study, students in both lecture sections first

participated in a control activity– a real demonstration not

related to circuits followed by Peer

Instruction. Subsequently the two parallel lectures were

divided by treatment – students in one lecture observed a

demonstration with chalk diagrams accompanying a real circuit

demonstration (traditional); students in the other lecture

observed the same circuits built using the CCK

simulation (experimental). Students in both lectures under

both conditions (traditional and experimental) participated in

the complete form of Peer Instruction.

In this method, the demonstration is given and a

question is presented. First the students answer the question

individually using personal response systems before any

class-wide discussion or instruction; then, students are

instructed to discuss the question with their neighbors and

answer a second time. These are referred to as “silent”

(answering individually) and “discussion”

(answering individually after discussing with peers)

formats.

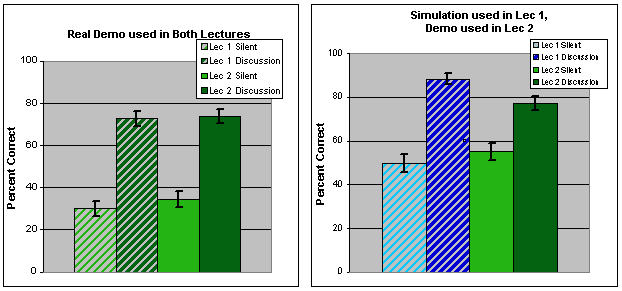

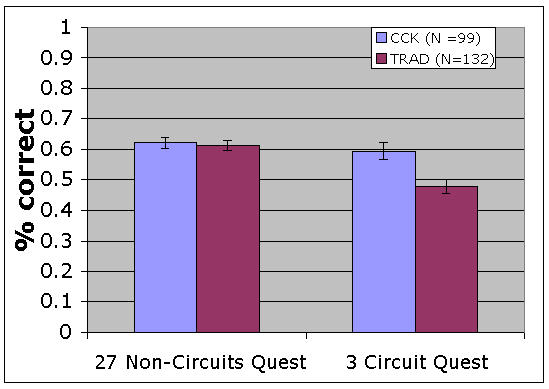

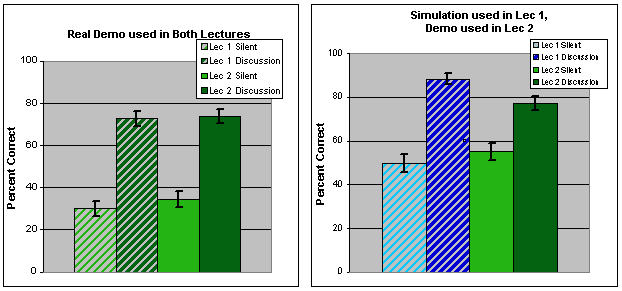

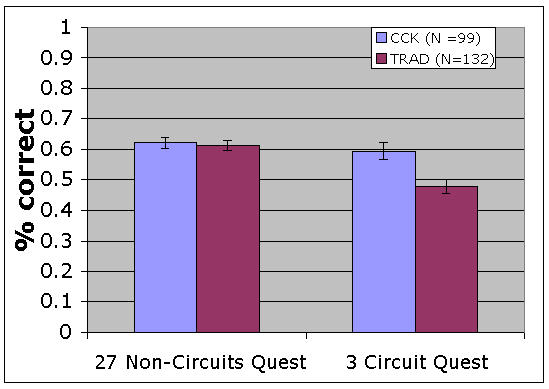

In the control condition, Figure 4a, there are no statistical

differences between the two lecture environments, as measured

by their pre- or post-scores,

or gain (p > 0.5). In the condition where different

treatments were used in the two lectures (Figure 4b) –

Lecture 1 using CCK and Lecture 2 using real equipment – a

difference was observed. While the CCK group (Lecture 1) is

somewhat lower in “silent” score, their final scores after

discussion are significantly higher than their counterparts

(as are their gains from pre- to post- scores, p<0.005, by two-tailed

z-test). Both sets of data (Figure 4a and 4b) corroborate

claims that discussion can dramatically facilitate student

learning (Mazur, 1997). However the data also illustrate that

what the students have to discuss is significant, with the

simulation leading to more fruitful discussions.

Figure 4. Student performance in control (left 4a) and

treatment (right 4b) conditions

to study the

effectiveness of computer simulation in Peer

Instruction activities. Standard error of the mean is indicated.

While we present data only from a small section of lecture

courses and environments, we note that the PhET simulations

can be productively used for other classroom interventions.

For example, PhET simulations may be used in addition to or

even in lieu of making microcomputer-based lab measurements of

position, velocity and acceleration of moving objects for the

1-D Interactive Lecture Demonstration (ILD) (Sokoloff &

Thornton, 1998). In PhET’s Moving

Man, we simulate the movement of a character, tracking

position, velocity and acceleration. Not only does the

simulation provide the same plotting of real time data that

occurs with the ILDs, but

Moving Man also allows for replaying data (synchronizing

movement and data display), as well as assigning pre-set plots

of position, velocity and acceleration and subsequently

observing the behavior (inverting the order of ILD data

collection). The utility of PhET simulations has been applied

beyond the introductory sequence in advanced courses, such as

junior-level undergraduate physical chemistry, where students

have used the Gas

Properties simulation to examine the dynamics of molecular

interaction to develop an understanding of the mechanisms and

meaning of the Boltzmann distribution.

In each of these instances, we observe the improved results

of students who are encouraged to construct ideas by providing

access to otherwise temporally obscured phenomena (e.g., Wave on a String), or invisible models (such as electron flow in CCK

or molecular interaction in Gas

Properties). These simulations effectively constrain

students and the focus their attention on desired concepts,

relations, or processes. These findings come from original

interview testing and modification of the simulation to

achieve these results. We hypothesize that it is the

simulations' explicit focus of attention, productive

constraints, dynamic feedback, and explicit visualization of

the otherwise inaccessible phenomena that promote productive

student discussion, and the development of student ideas.

Laboratory

Can simulations be used productively in a laboratory where

the environment is decidedly hands-on and designed to give

students the opportunity to learn physics through direct

interaction with experimental practice and equipment?

In the laboratory segment of a traditional large-scale

introductory algebra-based physics course, we examined this

question. Most of the details of this study and some of the

data have been reported previously (Finkelstein et al., 2005),

so here we briefly summarize. In one of the two-hour long

laboratories, DC circuits, the class was divided into two

groups – those that only used a simulation (CCK)

and those that only used real equipment (bulbs, wires,

resistors, etc.). The lab activities and questions were

matched for the two groups.

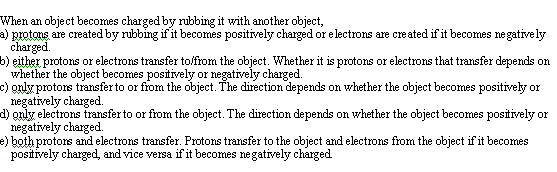

On the final

exam, three DC-circuits questions probed students’ mastery

of the basic concepts of current, voltage, and series and

parallel circuits. For a given series and parallel circuit,

students were asked to: (1) rank the currents through each of

the bulbs, (2) rank the voltage drops across the bulbs in the

same circuit, and (3) predict whether the current through the

first bulb increased, decreased, or remained the same when a

switch in the parallel section was opened.

In Figure 5, the average of number of correct responses

for the DC circuits and non-DC-circuit exam questions are

shown. The average on the final exam questions not relating to

the circuits was the

same for the

two groups

(0.62 for CCK, with N = 99; s

=.18, and 0.61 for TRAD, N = 132; s

=.17).

Figure 5. Student performance on final exam questions. CCK indicates student groups using

Circuit

Construction Kit simulation; TRAD indicates students using real lab equipment. Error

is the standard error of the mean.

The mean performance on the three circuits questions is 0.59 (s

=.27) for CCK and is 0.48 (s

=.27) for TRAD groups. This is

a statistically significantly difference at the level of

p<0.002 (by Fisher Test or one-tailed binomial

distribution) (Finkelstein et al., 2005).

We also assessed the impact of using the simulation on

students’ abilities to manipulate physical equipment. During

the last 30 minutes of each lab class, all students engaged in

a common challenge worksheet requiring them to assemble a

circuit with real

equipment, show a TA, and write a description the behavior of

the circuit. For all CCK sections, the average time to

complete the circuit challenge was 14.0 minutes; for the

Traditional sections, it was 17.7 minutes (statistically

significant difference at p<0.01 by two tailed t-test of

pooled variance across sections). Also, the CCK group scored

62% correct on the written portion of the challenge, whereas

the traditional group scored 55% – a statistically

significant shift (p<0.03 by a two-tailed

z-test) (Finkelstein et al., 2005).

These data indicate that students learning with the

simulation are more capable at understanding, constructing,

and writing about real circuits than their counterparts who

had been working with real circuit elements all along. In this

application the computer simulations take advantage of the

features described above – they productively engage students

in building ideas by providing a workspace that is

simultaneously dynamic and constraining, and allows them to

mess about productively.

Recitation Section

Most

introductory college courses include 1-hour recitations or

weekly problem solving sections. Recently we have implemented Tutorials

in Introductory Physics (McDermott & Schaffer, 2002)

in the recitations of our calculus-based physics course. These

student-centered activities are known to improve student

understanding (McDermott & Schaffer, 1992),

and we have recently demonstrated that it is possible to

replicate the success of the curricular authors (Finkelstein

and Pollock, 2005). In addition to implementing these

Tutorials, which often involve student manipulation of

equipment, we have started to study how simulations might be

used to augment Tutorials or replace the equipment used in

recitation sections.

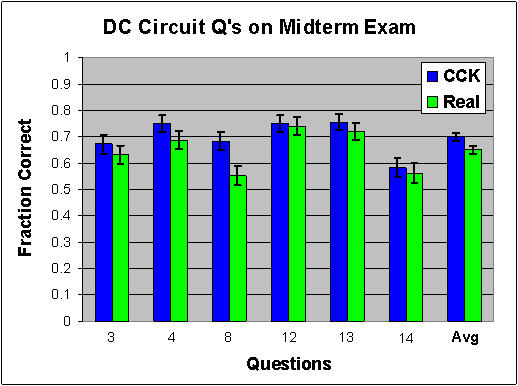

In two of the

most studied Tutorials, which focus on DC circuits, we

investigated how the Circuit

Construction Kit might be substituted for real light

bulbs, batteries and wires. In nine recitation sections

(N~160), CCK was

used in lieu of real equipment, while in the other nine

sections, real equipment was used. As described in Finkelstein

and Pollock (2005), this course included other reforms such as

Peer Instruction in

lecture. On the mid-term exam following the Tutorial, six

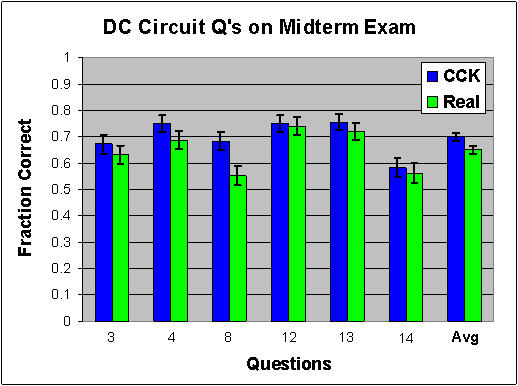

questions were directly related to DC circuits. In Figure 6,

student performance data on these questions are plotted by

treatment (CCK) and control (Real) along with the average

score across all these questions.

Figure 6. Student performance on midterm exam for students who learned about circuits in

recitation section using the Circuit Construction Kit simulation or real equipment. Std. error

of mean is indicated.

Students in the CCK group outperform their counterparts by an

average of approximately 5% (statistically significant p <

0.02 by two-tailed z-test).

We note that simply using simulations in these (or other)

environments does not guarantee success. How these simulations

are used is important. While the CCK

successfully replaced the bulbs and batteries in recitation,

we believe its success is due in part to the coupling of the

simulation with the pedagogical structure of the Tutorials.

Here, the students are encouraged to engage, by building

circuits (real or virtually) and are constrained in their

focus of attention (by the Tutorial structure). However, the

CCK group works with materials that explicitly model current

flow in a manner that real equipment cannot.

In other instances when these heuristics are not

followed, the results are more complex.

In another Tutorial on wave motion, students are asked

to observe an instructor demonstrating a transverse wave

(using a long slinky). Allowing students the direct

manipulation of the related simulation, Wave

on a String, does not improve student performance on

assessments of conceptual mastery. In fact, in some cases

these students did worse. We believe that in this case, not

having structure around the simulation (with the Tutorial

activity not written for direct student engagement) means that

students miss the purpose of activity, or are not productively

constrained to focus attention on the concepts that were the

object of instruction. As a result, students were less likely

to stay on task.

Informal settings

We have briefly explored how effective

computer simulations might be for student learning of physics

concepts in informal unstructured use. These studies were

conducted by testing students on material that they had not

seen in any of their college courses. The students were

volunteers from two introductory physics courses, and they

were tested by being asked one or two questions on a basic

conceptual idea covered by the simulation

.

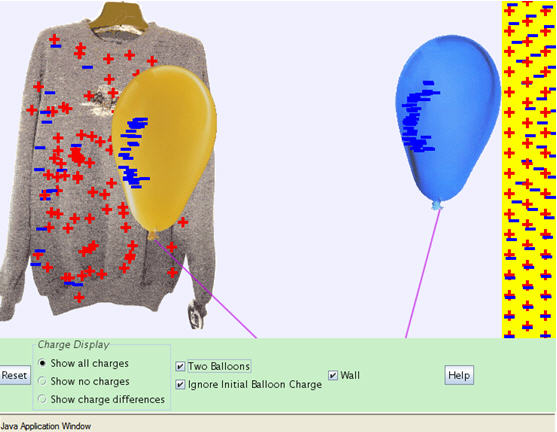

Students

in the treatment group were assigned to one of three

subgroups: i) a group that read a relevant text passage and

was asked a question (read),

ii) a group that played with the simulation and then was asked

the question (play first),

and iii) a group that was asked the question first as a

prediction, then played with the simulation and was asked the

question again (predict

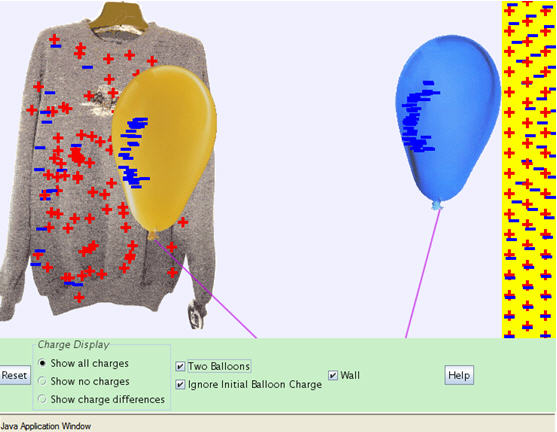

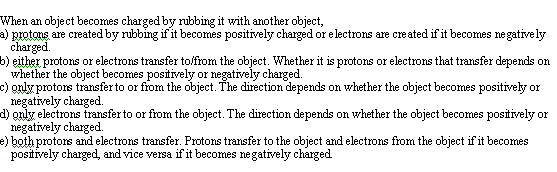

and play). A sample question for the static electricity

simulation is shown in Figure 7 below a snapshot of the

simulation. A control group selected from the two physics

course was asked the same question for each simulation to

establish the initial state of student knowledge. There were

typically 30 to 50 students per group and tests were run on

five different simulations.

Figure 7. Screenshot from Balloons and Static Electricity simulation

and sample conceptual question.

We found that there was no statistically significant

difference for any individual simulation between the control

group and the group that played with the simulation with no

guidance (play first)

before being asked the question. Similarly, the group that

only read a text passage that directly gave the answer to the

question (read) also

showed no difference from the control group. When results were

averaged over all the simulations, both reading

and play groups

showed equivalent small improvements over the control group.

More significant was the comparison

between control group and the predict

and play group whose play with the simulation was

implicitly guided by the prediction question. The fraction

that answered questions correctly improved from 41% (control

group) to 63% (predict and play group), when averaged over all

five simulations (significant at p< 0.001, two tailed

z-test). Greater insight is provided, however, by looking at

performance on concept questions associated with a particular

simulation, rather than the aggregate. These are shown in

Table 1, with the uncertainties (standard error on the mean)

in parentheses.

Table 1. Student

performance (% correct) on conceptual questions for each ;border-top:solid green 1.5pt;

border-left:none;border-bottom:solid green 1.0pt;border-right:none;

mso-border-top-alt:solid green 1.5pt;mso-border-bottom-alt:solid green .75pt;

padding:0mm 5.4pt 0mm 5.4pt;height:28.25pt">

Simulation Topic

|

Energy Conservation

|

Balloons Static Elec

|

Signal Circuit

|

Radio Waves

|

Sound

|

Weighted average

|

|

Control

|

56(7)

|

29(8)

|

35(9)

|

18(7)

|

60(8)

|

41(3.7)

|

|

Predict & Play

|

77(8)

|

63(9)

|

69(8)

|

41(8)

|

69(8)

|

63(3.8)

|

We believe these large variations in the impact of playing

with the simulation to be indications of the manner in which

the simulations are used and the particular concepts that are

addressed. That is, particular questions and concepts (e.g. on

the microscopic nature of charge) are better facilitated by a

simulation that makes explicit use of this microscopic model.

Furthermore, just as learning from all the simulations was

significantly improved by the simple guiding scaffolding of a

predictive question, some simulations require more substantial

scaffolding than others to be effective. For a simulation like

Balloons, where

students learn about charge transfer by manipulating a balloon

as they would in real life, little support is needed, but for

more complex simulations involving manipulations more removed

from every day experience, more detailed exercises are

required. By observing students using these simulations to

solve homework problems in a number of courses, we have

extensive qualitative data corroborating the variation in

levels of scaffolding required for various simulations.

We

have noticed that simulation interface design and display

greatly impact the learning in these sorts of informal

settings, more so than they do in more structured settings. We

see this effect routinely in the preliminary testing of

simulations as part of their development. Student difficulties

with the use of the interface and confusion over what is being

displayed can result in negligible or even substantial

negative effects on learning. In observations to date, we have

found such undesirable outcomes are much less likely to occur

when the simulation is being used in a structured environment

where there is likely to be implicit or explicit clarification

provided by the instructor.

Conclusions

This

paper has introduced a new suite of computer simulations from

the Physics Education Technology project and demonstrated

their utility in a broad range of environments typical of

instruction in undergraduate physics. Under the appropriate

conditions, we demonstrate that these simulations can be as

productive, and often more so, than their traditional

educational counterparts, such as textbooks, live

demonstrations, and even real equipment.

We suspect that an optimal educational experience will

involve complementary and synergistic uses of traditional

resources, and these new high tech tools.

As

we seek to employ these new tools, we must consider how and

where they are used as well as for what educational goals they

are employed. As such, we have started to delineate some of

the key features of the PhET tools and their uses that make

them productive. The

PhET tools are designed to:

support an interactive approach, employ dynamic

feedback, follow a constructivist approach, provide a creative

a workplace, make explicit otherwise inaccessible models or

phenomena, and constrain students productively. While not an

exhaustive list, we believe these elements to be critical in

the design and effective use of these simulations.

Acknowledgements

We thank our PhET teammates for their energy, creativity, and

excellence: Ron LeMaster, Samuel Reid, Chris Malley, Michael

Dubson, Danielle Harlow, Noah Podolefsky, Sarah McKagan, Linda

Koch, Chris Maytag, Trish Loeblein, and Linda Wellmann. We are

grateful for support from the National Science Foundation (DUE

#0410744, #0442841), Kavli Operating Institute, Hewlett

Foundation, PhysTEC (APS/AIP/AAPT), the CU Physics Department,

and the Physics Education Research at Colorado group (PER@C).

References

Adams, W.K., Reid, S., LeMaster, R., McKagan, S. Perkins, K. and

Wieman, C.E. (n.d.). A Study of Interface Design for

Engagement and Learning with Educational Simulations. In

preparation.

Bransford, J.D.. Brown, A L and

Cocking, R. R (eds.) (2002). How

People Learn, Expanded Edition. Washington, DC: Natl.

Acad. Press.

Clark, R.C. and Mayer, R.,

(2003). e-Learning and

the Science of Instruction: Proven Guidelines for Consumers

and Designers of Multimedia Learning. San Francisco: John

Wiley and Sons.

CERI, OECD Centre for

Educational Research and Innovation (2005). E-learning

in Tertiary Education: Where Do We Stand? Paris, France: OECD

Publishing.

diSessa, A. A. (2000). Changing

Minds: Computers, Learning, and Literacy. Cambridge, MA:

MIT Press.

Finkelstein,

N.D.

and Pollock, S.J. (2005). Replicating and Understanding

Successful Innovations: Implementing Tutorials in Introductory

Physics, Physical

Review, Spec Top: Physics Education Research, 1, 010101.

Finkelstein,

N.D. et al. (2005). When learning about the real world is

better done virtually: a study of substituting computer

simulations for laboratory equipment, Physical

Review, Special Topics: Physics Education Research, 1,

010103.

Hake,

R.R. (1998). Interactive-engagement versus traditional

methods: a six-thousand-student survey of mechanics test data

for introductory physics courses. American

Journal of Physics, 66, 64-74.

Mazur,

E. (1997). Peer

Instruction Upper Saddle, NJ: Prentice Hall.

McDermott, L.C. and Shaffer,

P.S. (1992). Research as a guide for curriculum development:

an example from introductory electricity Parts I&II, Am J Phys 60(11), 994-1013.

McDermott,

L.C. and Schaffer, P.S. (2002). Tutorials in Introductory Physics. Upper Saddle River, NJ: Prentice

Hall.

MERLOT, Multimedia Educaitonal

Resource for Learning and Online Teaching (n.d.).

Retrieved July 1, 2006.

https://www.merlot.org/

Papert, S. and Harel,

I.

(1991). Situating Constructionism in

S. Papert

and I. Harel (eds) Constructionism.

Westport, CT: Ablex Publishing Corporation.

Perkins, K. et al. (2006).

PhET: Interactive Simulations for Teaching and Learning

Physics, Physics Teacher,

44(1), 18-23.

PhET (2006). Physics Education

Technology Project. Retrieved June 1, 2006. http://phet.colorado.edu

Redish,

E.F. (2003). Teaching

Physics with the Physics Suite. New York, NY: John Wiley

and Sons.

Sokoloff, D. R. and Thornton,

R. K. (1998). Using Interactive Lecture Demonstrations to

Create an Active Learning Environment, Phys.

Teach 35, 340-347.

von Glasersfeld, E. (1983).

Learning as a constructive activity. In J. C. Bergeron and N.

Herscovics (Eds.), Proceedings

of the Fifth Annual Meeting of the North American Chapter of

the International Group for the Psychology of Mathematics

Education. Montreal: University of Montreal Press.

Wieman, C.E. and Perkins, K.K.

(2005). Transforming Physics Education. Physics Today, 58(11). 36-42.

Wieman,

C.E. and Perkins, K.K. (2006). A Powerful Tool for Teaching

Science. Nature: Physics,

2(5), 290-292.

Received

31 May 2006; revised manuscript received 1 Aug 2006

This work is licensed under a

Creative Commons Attribution-NonCommercial-ShareAlike 2.5 License.

|